Advertisement

A comprehensive and systematic literature review on the big data management techniques in the internet of things

- Original Paper

- Published: 15 November 2022

- Volume 29 , pages 1085–1144, ( 2023 )

Cite this article

- Arezou Naghib nAff1 ,

- Nima Jafari Navimipour 2 , 3 ,

- Mehdi Hosseinzadeh 4 , 5 , 6 &

- Arash Sharifi 1

11k Accesses

16 Citations

Explore all metrics

The Internet of Things (IoT) is a communication paradigm and a collection of heterogeneous interconnected devices. It produces large-scale distributed, and diverse data called big data. Big Data Management (BDM) in IoT is used for knowledge discovery and intelligent decision-making and is one of the most significant research challenges today. There are several mechanisms and technologies for BDM in IoT. This paper aims to study the important mechanisms in this area systematically. This paper studies articles published between 2016 and August 2022. Initially, 751 articles were identified, but a paper selection process reduced the number of articles to 110 significant studies. Four categories to study BDM mechanisms in IoT include BDM processes, BDM architectures/frameworks, quality attributes, and big data analytics types. Also, this paper represents a detailed comparison of the mechanisms in each category. Finally, the development challenges and open issues of BDM in IoT are discussed. As a result, predictive analysis and classification methods are used in many articles. On the other hand, some quality attributes such as confidentiality, accessibility, and sustainability are less considered. Also, none of the articles use key-value databases for data storage. This study can help researchers develop more effective BDM in IoT methods in a complex environment.

Similar content being viewed by others

Systematic Literature Review on Data Provenance in Internet of Things

Data quality and the Internet of Things

Recent Research on Data Analytics Techniques for Internet of Things

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

1 Introduction

The Internet of Things (IoT) is an emerging information technology model and a dynamic network that enables interaction between self-configuring, smart, and interconnected devices and humans [ 1 ]. The IoT's ubiquitous data collection devices (such as Radio-Frequency Identification (RFID) tags, sensors, Global Positioning Systems (GPS), Geographical Information Systems (GIS), drives, Near-Field Communication (NFC), actuators, and mobile phones) collect and share real-time, mobile, and environmental data for automatic monitoring, identification, processing, maintenance, and control in real-time [ 2 , 3 , 4 ]. The IoT ecosystem has five main components generally: IoT devices, including sensors and actuators that collect data and perform actions on things; IoT connectivity, including protocols and gateways, that is responsible for creating communication in the IoT ecosystem between smart devices, gateways, and the cloud; an IoT cloud that is responsible for data storage, processing, analysis, and decision-making; IoT analytics and data management are responsible for processing the data; and end-user devices and user interfaces help to control and configure the system [ 5 ]. The most important applications of IoT include environmental monitoring, disaster management, smart homes/buildings, smart farms, healthcare, smart cities, urban, smart manufacturing, intelligent transport systems, smart floods, financial risk management, supply chain management, water management, enterprise culture, cultural heritage, smart surveillance, military tracking and environment, digital forensics, underwater environments, and understanding social phenomena [ 6 , 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 , 21 , 22 ]. The IoT devices and sensors in the Wireless Sensor Networks (WSN) generate large data. According to the international data corporation Footnote 1 forecast, the number of IoT devices will be 41.6 billion and generate 79.4 zettabytes of data in 2025. This massive structured, semi-structured, and unstructured data, which is expanding rapidly with time, results in "Big Data" [ 23 ]. "Big data" technologies are a new generation of distributed architectures and technologies that provide distributed data mining capabilities to inexpensively, valuable, and effectively extract value from a huge dataset with characteristics such as volume, velocity, variety, variability, veracity, and value [ 24 ]. Big data provides both opportunities and problems for organizations and enterprises. Big data can improve data precision, be used for forecasting and decision-making, and give stakeholders more in-depth analytical findings [ 2 ]. Traditional data processing systems cannot collect, process, manage, and interpret data effectively using conventional mechanisms. Therefore, it requires a scalable architecture or framework for effective capture, storage, management, and analysis [ 25 ].

A major challenge in implementing IoT in real and complex environments is analyzing heterogeneous data volumes that contain a wide variety of knowledge content [ 26 ]. Various platforms, tools, and technologies have been developed for big data monitoring, collecting, ingesting, storing, processing, analysis, and visualization [ 10 , 27 ]. These platforms and tools are Apache Hadoop, MapReduce, 1010data, Apache Storm, Cloudera, Cassandra, HP-HAVEn, SAP-Hana, Hortonworks, MongoDB, Apache Kafka, Apache Spark, Infobright, etc. Industries and enterprises use Big Data Analytics (BDA) with IoT technologies to handle the timely analysis of information streams and intelligent decision-making [ 28 , 29 , 30 ]. BDM in the IoT involves different analytic types [ 31 ]. Marjani et al. [ 29 ] discussed analytical types in real-time, offline, memory, business intelligence, and at massive levels. Singh and Yassine [ 28 ] divided analytical types into preprocessing, pattern mining, and classification. Gandomi and Haider [ 32 ] divided big data processing into two major phases: data management and data analytics. Also, Ahmed et al. [ 33 ] provided five aspects of big data: acquisition and storage; programming model; benchmark process; analysis; and application. Finally, ur Rehman et al. [ 34 ] divided BDA into five main steps: data ingestion, cleaning, conformation, transformation, and shaping.

However, despite the importance of BDM in the IoT and the rising challenges in this area, as far as we know, there is not any complete and detailed systematic review in this field. Hence, this paper tries to analyze the mechanisms of BDM in the IoT. The main contributions of this paper are as follows:

Presenting a study of the existing methods for BDM in the IoT.

Dividing BDM methods in the IoT are divided into four main categories: BDM processes, BDM architectures/frameworks, quality attributes, and big data analytics types.

Dividing the BDM process in the IoT into six main steps, including data collection, communication, data ingestion, data storage, processing and analysis, and post-processing.

Dividing the BDM architecture/framework in the IoT into two main subcategories: BDM architectures/frameworks in IoT-based applications and BDM architectures/frameworks in the IoT paradigms.

Exploring the primary challenges, issues, and future works for BDM in the IoT.

The following subsection discusses related work to show the main differences between this review and similar studies. Also, the abbreviations used in this paper are presented in Table 1 .

1.1 Related work and contributions of this review

This section studies some reviews and survey articles that work on BDM in the IoT to highlight the need for reviewing them. In addition, this section describes the main advantages and disadvantages of this article to distinguish this one.

Ahmed et al. [ 27 ] analyzed several techniques for IoT-based big data. This article categorizes the literature based on parameters, including big data sources, system components, big data enabling technologies, functional elements, and analytics types. The authors also discussed connectivity, storage, quality of services, real-time analytics, and benchmarking as the critical requirements for big data processing and analytics.

Constante Nicolalde et al. [ 35 ] overviewed the technical tools used to process big data and discussed the relationship between BDA and IoT. The big data challenges are divided into four general categories: data storage and analysis; the discovery of knowledge and computational complexities; information security; and scalability and data visualization.

Talebkhah et al. [ 36 ] investigated the architecture, challenges, and opportunities of big data systems in smart cities. This article suggested a 4-layer architecture for BDM in smart cities. The layers of this architecture are data acquisition, data preprocessing, data storage, and data analytics. This article also considered the opportunities and challenges for smart cities, such as heterogeneity, design and maintenance costs, failure management, throughout, etc.

Bansal et al. [ 37 ] investigated state-of-the-art research on IoT and BDM. This article proposed a taxonomy based on BDM in the IoT applications, including smart transport, smart cities, smart buildings, and smart living. BDM steps are considered as data acquisition, communication, storage, processing, and retrieval. Also, the related surveys on BDM were divided into three general categories: surveys on IoT BDA, domain-specific surveys on IoT big data, and surveys on challenges in IoT big data. The authors classified the articles based on four major vendor services (Google, Amazon, Microsoft, and IBM) to integrate IoT and IoT big data with case studies. The big data management challenges in the IoT are considered based on 13 V’s challenges.

Marjani et al. [ 29 ] investigated state-of-the-art research efforts directed toward big IoT data analytics and proposed a new architecture for big IoT data analytics. This article discusses big IoT data analytic types under real-time, offline, memory-level, business intelligence, and massive level analytics categories.

Simmhan and Perera [ 38 ] presented the analytics requirements of IoT applications. They defined the relationship between data volume capacity and processing latency of new big data platforms. This article divided decision systems into visual analytics, alerts and warnings, reactive systems, control and optimization, complex systems, knowledge-driven intelligent systems, and behavioral and probabilistic systems.

Shoumy et al. [ 39 ] discussed frameworks and techniques for multimodal big data analytics. They divided multimodal big data analytics techniques into four topics: affective framework; multimodal framework; big data and analytics framework; and fusion techniques. Furthermore, Ge et al. [ 40 ] discussed the similarities and differences among big data technologies used in IoT domains and developed a conceptual framework. This article interpreted big data research and application opportunities in eight IoT domains (healthcare, energy, transportation, building automation, smart cities, agriculture, industry, and military) and discussed the advantages and disadvantages of big data technologies. In addition, it examined four aspects of big data processes: storage, cleaning/cleansing, analysis/analytics, and visualization.

Siow et al. [ 41 ] considered the analytics infrastructure from data generation, collection, integration, storage, and computing. This article presented a comprehensive classification of analytical capabilities consisting of five categories: descriptive, diagnostic, discovery, predictive, and prescriptive analytics. In addition, a 3-layered taxonomy of data analytics was presented, including data, analytics, and applications.

Fawzy et al. [ 42 ] investigated the techniques and technologies of IoT systems from BDA architectures and software engineering perspectives. This article proposed a taxonomy based on BDA systems in the IoT, including smart environments, human, network, energy, and environmental analytics. The BDA target, approach, technology, challenges, software architecture and design, model-driven engineering, separation of concerns, and system validation and verification. The authors presented the IoT data features as multidimensional, massive, timely, heterogeneous, inconsistent, traded, valuable, and spatially correlated. The proposed domain-independent BDA-based IoT architecture has six layers. The layers of architecture are data manager, system resources controller, system recovery manager, BDA handler, software engineering handler, and security manager.

Zhong et al. [ 43 ] investigated using BDA and data mining techniques in the IoT. This article divided the review articles into four categories: architecture and platform, framework, applications, and security. The data mining methods for BDA in the IoT were discussed in these four categories. The challenges investigated in the article are as follows: data volume, data diversity, speed, data value, security, data visualization, knowledge extraction, and real-time analysis.

Hajjaji et al. [ 44 ] discussed applications, tools, technologies, architectures, current developments, challenges, and opportunities in big data and IoT-based applications in smart environments. This article divided the benefits of combining the IoT and big data into six categories: multi-source and heterogeneous data; connectivity; data storage; data analysis; and cost-effectiveness.

Ahmadova et al. [ 45 ] discussed big data applications in the IoT. They proposed a taxonomy of big data in the IoT that includes healthcare, smart cities, security, big data algorithms, industry, and general view. In the article, the authors discussed big data technologies' advantages and disadvantages for IoT domains. Also, the evaluation factors that are considered in the article are security, throughput, cost, energy consumption, reliability, response time, and availability.

Table 2 shows the summary contributions of related survey articles. The publication year, methodology, discussion, and other disadvantages are shown for each article in this table. Due to the existing weaknesses in the review articles, this paper presents a systematic literature review and a proper categorization of BDM mechanisms in the IoT that addresses the shortcomings as follows:

This paper provides a complete research methodology that includes research questions and the article selection process.

This paper discusses the newly proposed mechanisms for BDM in the IoT between 2016 and August 2022.

This paper considers the architectures/frameworks of IoT-based applications, including healthcare, smart cities, smart homes/buildings, intelligent transport, traffic control and energy, urban planning, and other IoT applications (smart IoT systems, smart flood, smart farms, disaster management, laundry, digital manufacturing, and smart factory).

This paper investigates the quality attributes and categorizes the review articles based on the quality attributes used and the reference model of standard software quality attributes, i.e., ISO 25010.

This paper classifies the review articles based on BDA types in the IoT and their tactics.

This paper considers the big data storage systems and tools in the IoT based on relational databases, NoSQL databases, distributed file systems, and cloud/edge/fog/mist storage.

This paper discusses the BDM process in six steps: data collection, communication, data ingestion, data storage, processing and analysis, and post-processing, and proposes the main tools in each step.

This paper presents open issues and challenges on BDM in the IoT and divides challenges into two categories: BDM in the IoT and quality attributes challenges.

The rest of the paper is structured as follows: Sect. 2 explains the research methodology and the article selection process. The categories of the BDM methods in the IoT and their comparison are described in Sect. 3 . Section 4 discusses the challenges and some open issues. Finally, Sect. 5 represents the conclusion and the paper’s limitations.

2 Research methodology

Systematic literature review (SLR) is a research methodology that examines data and findings of the researchers relative to specified questions [ 46 , 47 ]. It aims to find as much relevant research on the defined questions as possible and to use explicit methods to identify what can reliably be said based on these studies [ 48 , 49 ]. This section provides an SLR to understand the BDM techniques in the IoT. The following subsection will explain the research questions and the article selection process.

2.1 Research questions

This study focuses more explicitly on the articles related to BDM in the IoT, focusing on their advantages and disadvantages, architectures, processing and analysis methods, storage systems, evaluation metrics, and tools. To achieve the goals mentioned above, the following research questions are presented.

RQ1: What is BDM in IoT?

Section 1 answered this question.

RQ2: What is the importance of BDM in the IoT?

This question aims to show the number of published articles about BDM in IoT between 2016 and August 2022.

Section 2 answers this question.

RQ3: How are the articles searched and chosen to be assessed?

Section 2.2 discusses the question.

RQ4: What are the classifications of BDM methods in the IoT?

This question aims to show the existing methods of BDM in the IoT environment. Section 3 will discuss this answer.

RQ5: What are the challenges and technical issues of BDM in the IoT?

This question identifies the challenges for BDM in the IoT and provides open issues for future research. Section 4 will discuss this answer.

2.2 Article selection process

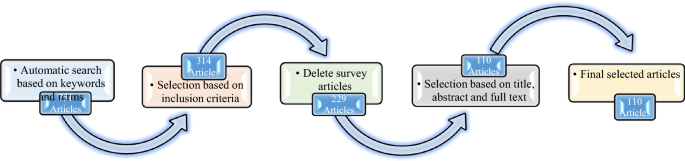

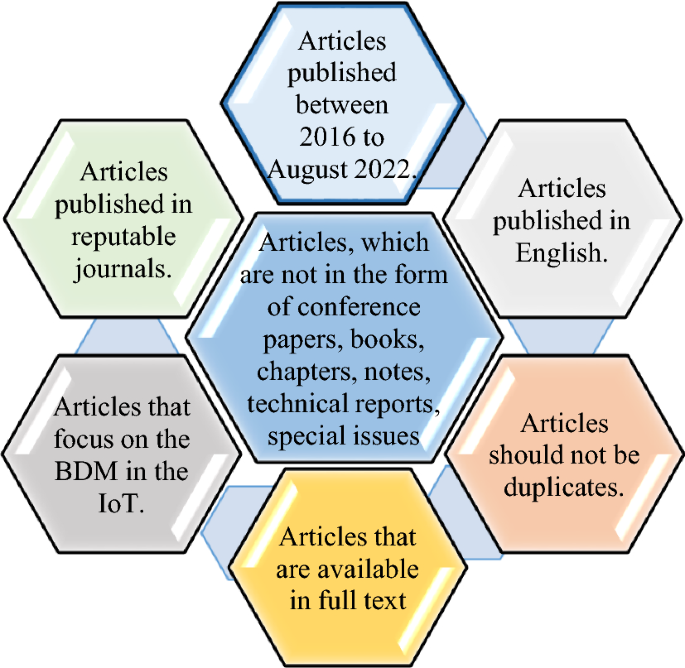

In this study, the article’s search and selection process consists of three stages. These stages are shown in Fig. 1 . In the first stage, the articles between 2016 and August 2022 were searched based on the keywords and terms (presented in Table 3 ). These articles are the results of searching popular electronic databases. These electronic databases include Google Scholar, Elsevier, ACM, IEEE Explore, Emerald Insight, MDPI, Springer Link, Taylor and Francis, Wiley, JST, Dblp, DOAJ, and ProQuest. The articles include journals, chapters, conference papers, books, notes, technical reports, and special issues. 751 articles were found in Stage 1. In Stage 2, there are two steps to select the final number of articles to review. First, the articles are considered based on the inclusion criteria in Fig. 2 . There are 314 articles left at this stage. Next, the review articles are removed; of the remaining 314 articles in the previous stage, 85 (27.07%) were review articles. Elsevier has the highest number of review articles (31.76%, 27 articles). EMERALD and Taylor and Francis have the lowest number of reviewed articles (2.35%, one article). The highest number of published review articles is in 2019 (24.71%), and the lowest is in 2022 (8.24%). The number of remaining articles at this stage is 229. In Stage 3, the title and abstract of the articles are reviewed. Also, to ensure that the articles are relevant to the study, we reviewed the methodology, evaluation, discussion, and conclusion sections. The number of selected articles retained at this stage is 110. Elsevier publishes most of the selected articles (30.91%, 34 articles). The lowest number is related to ACM (0.91%, one article). 2018 has the highest number of published articles (26.36%, 29 articles). The Future Generation Computer Systems journal publishes the highest number of articles (11.82%, 13 articles).

Articles search and selection process stage

Inclusion criteria in the articles selection process

3 Big data management approaches in the IoT

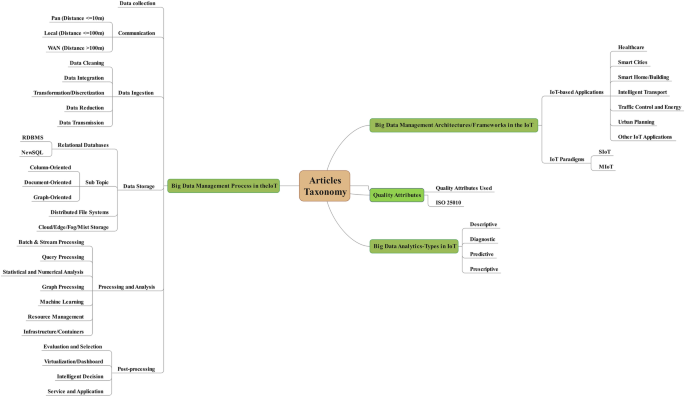

This section presents four different categories for the reviewed articles. These categories include the BDM process in the IoT (Sect. 3.1 ), BDM architectures/frameworks for IoT applications (Sect. 3.2 ), quality attributes (Sect. 3.3 ), and big data analytics types (Sect. 3.4 ). Each category has subcategories that will be considered in its relevant section. Figure 3 shows this taxonomy.

Taxonomy of the selected articles

3.1 Big data management process in the IoT

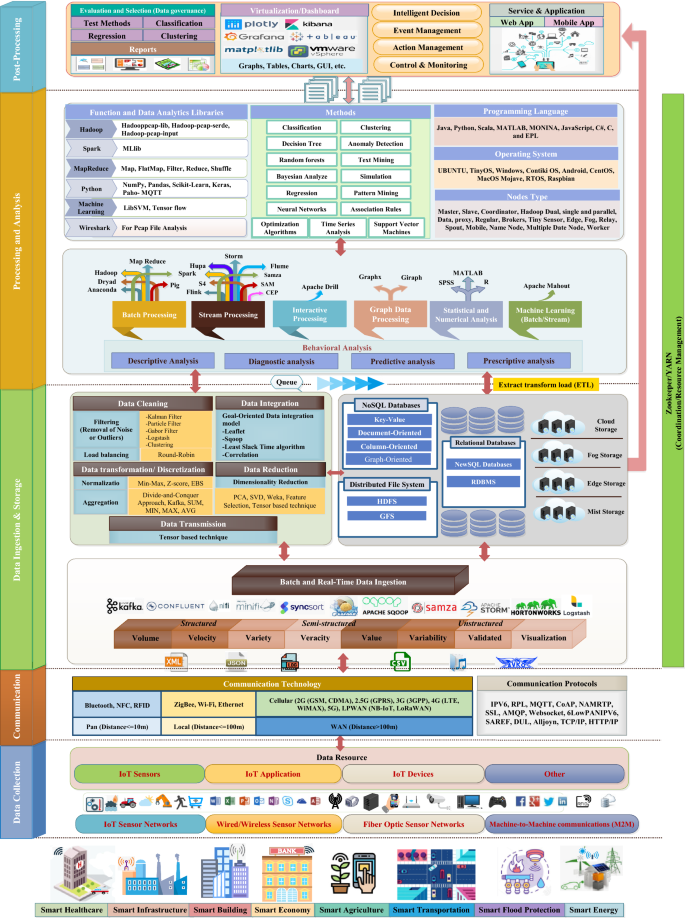

This section categorizes articles based on BDM process mechanisms and presents a comprehensive framework for BDM in the IoT. The comprehensive framework for BDM in the IoT is shown in Fig. 4 . The steps of BDM in IoT include data collection, communication, data ingestion, storage, processing and analysis, and post-processing.

Big data management framework in IoT

3.1.1 Data collection

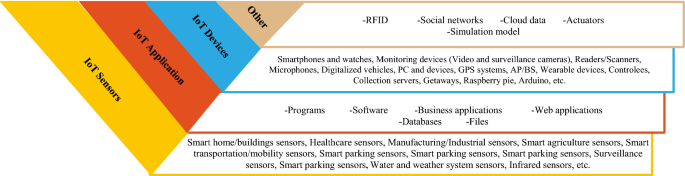

A variety of sources generates IoT data. There are different mechanisms for IoT data collection, but there is still no fully efficient and adaptive mechanism for IoT data collection [ 50 ]. This paper divides IoT sources into sensors, applications, devices, and other resources. Figure 5 shows the classification of the sources based on these four categories.

Big data sources categories in IoT

3.1.2 Communication

The data sources are located on various networks, such as IoT sensor networks, wired and wireless sensor networks, fiber-optic sensor networks, and machine-to-machine communications. Communication technologies are required to process and analyze these data sources [ 51 , 52 ]. There are several communication technologies and protocols in the IoT. The communication protocols used in the articles are IPV6, RPL, MQTT, CoAP, SSL, AMQP, Websocket, 6LowPANIPV6, Alljoyn, TCP/IP, HTTP/IP. Communication technologies are compared based on frequency, data rate, range, power usage, cost, latency, etc. There are several categories of these communication technologies. This paper divides big data communication technologies in the IoT based on distance criteria into three categories: pan, local, and WAN. Table 4 shows the articles' classification based on these three categories. Wi-Fi, ZigBee, Bluetooth, and 4G LTE are of the utmost importance in communication technology, with a total number of 29, 19, 17, and 17 articles, respectively.

3.1.3 Data ingestion

Data ingestion is the process of importing and transporting data in different formats from various sources (shown in Fig. 4 ) to a storage medium, processing and analyzing platform, and decision support engines [ 93 , 94 ]. The quality of the dataset used by ML-based prediction models (classification) plays a vital role in BDM in the IoT. A prediction model requires a lot of correctly labeled data for correct construction, assessment, and accurate result generation [ 95 ]. Therefore, the data ingestion layer should handle the enormous volume, high speed (velocity), variety, value, variable, and validated data for the processing and analysis step. In different articles, this layer has multiple tasks. The data ingestion layer in [ 96 ] includes identification, filtration, validation, noise reduction, integration, transformation, and compression. The data ingestion layer in [ 97 ] provides data synchronization, data slicing, data splitting, and data indexing. Also, the data ingestion layer in [ 98 ] includes data stream acquisition, data stream extraction, enrichment, integration, and data stream distribution. Finally, the data ingestion layer in [ 99 ] includes data cleaning, data integration, and data compression.

There are three categories of data ingestion technologies: real-time data ingestion, batch data ingestion, and both. Real-time data ingestion is used for time-sensitive data and real-time intelligent decision-making. Batch data ingestion is used for data collection from sources at regular intervals (daily reports and schedules) [ 100 ]. There are many tools and platforms for data ingestion, such as Apache Kafka, Apache NIFI, Apache Storm, Apache Flume, Apache Sqoop, Apache Samza, Apache Minifi, Confluent Platform, and Elastic Logstash. These tools can be compared based on throughput, latency, scalability, and security [ 98 ]. The data ingestion layer in this paper includes data cleaning, data integration, data transformation/ discretization, and data reduction. Each of these steps uses special tools, methods, and algorithms. Table 5 shows the categorization of articles based on the tools that are used for data ingestion. Data ingestion tools have been compared based on ingestion type, throughput, reliability, latency, scalability, security, and fault tolerance. Platforms in some articles use a combination of these tools, such as the Horton data flow platform in [ 101 ], including Apache NiFi/MiNiFi, Apache Kafka, Apache Storm, and Druid tools. As you can see in Table 5 , Apache Kafka is of utmost importance to the data ingestion tool, with a total of 8 articles. Also, Table 6 shows the categorization of articles based on the big data preprocessing stage in the IoT.

3.1.4 Data storage

This subsection categorizes articles based on storage mechanisms. The articles use various methods and tools to store big data. This study divides these mechanisms into four categories: relational, NoSQL, Distributed File Systems (DFS), and cloud/edge/fog/mist storage. Each of these categories has subcategories. One of the most critical big data challenges is the categorization and scalability that traditional relational databases such as MySQL, SQL Server, and Postgres cannot overcome. Therefore, NoSQL databases are used to store big data. NoSQL technologies are divided into four categories: key-value, column-oriented, document-oriented, and graph-oriented [ 102 ]. These NoSQL technologies have many platforms to support their operations. Key-value storage is the most straightforward and highly flexible type of NoSQL database and stores all the data as a pair of keys and values. A document-oriented database stores data as a set of columns. In a relational database, data is stored in rows and read row-by-row. A graph database focuses on the relationships between data elements, and each element is stored as a node. Tables 7 and 8 show the types of storage methods used in articles. Table 7 shows the classification of articles based on relational databases, NoSQL databases, and DFS. As you can see, any of the 110 selected articles do not use the key-value databases. In relational databases, Hive, NoSQL databases, Hbase, and distributed file systems, HDFS is most commonly used. Table 7 compares these storage tools and platforms based on in-memory database/storage or disk-based, data type, scalability, security, availability, flexibility, performance, fault-tolerant, easy to use, and replication.

Table 8 shows the classification of articles based on cloud/edge/fog/mist storage. Cloud computing provides scalable computing, high data storage, processing power, and ensures the quality of the applications. However, it has main challenges such as latency, network overhead, bandwidth, data privacy, lower real-time responsiveness, location awareness, security, reliability, data availability, and accessibility [ 103 ]. Network architectures came into existence to overcome these challenges, such as fog, edge, and mist computing, that move the data and computation closer to the consumer and reduce some of the workloads from the cloud [ 104 ].

Fog computing is a type of decentralized computing that is between cloud storage and IoT devices. Fog computing reduces service latency, bandwidth, energy consumption, storage, and computing costs and improves the QoS [ 149 ]. The fog computing for the IoT model supports real-time services, mobility, and geographic distribution [ 150 ]. Another alternative approach to cloud computing is edge computing. Data storage and processing in edge computing occur closer to the device or data source to improve data locality, performance, and decision-making [ 151 ]. Edge computing is less scalable than fog computing but provides near real-time analytics and high-speed data access and reduces data leakage during transmission [ 104 , 152 ]. Mist computing is an intermediate layer between fog/cloud and edge computing. It can improve the fog/cloud challenges, such as response time, location awareness, data privacy, local decision-making, network overhead, latency, and computing and storage costs. Mist nodes had low processing power and storage [ 153 ]. In some articles, in addition to using cloud/edge/fog/mist storage, HDFS and NoSQL databases are used alongside these technologies. The goal is to overcome the disadvantages of these technologies by using them together.

3.1.5 Processing and analysis

Big data processing and analysis in the IoT are techniques or programming models for extracting knowledge from large amounts of data for supporting and providing intelligent decisions [ 154 ]. Efficient big data processing and analysis in IoT can help mitigate many challenges in event management, action management, control and monitoring, improved customer service, cost savings, improve business relationships [ 155 ], etc. This paper divides the big data processing and analysis step in IoT into a set of sub-steps: batch and stream processing, query processing, statistical and numerical analysis, graph processing, ML, resource management, and infrastructure/containers. Table 9 shows the articles' classification and comparison of the tools based on criteria: throughput, reliability, availability, latency, scalability, security, flexibility, ease of use, and cost-effectiveness. Big data processing in the IoT is generally done at both batch and stream levels. Many tools, platforms, and frameworks exist for batch and stream processing. The tools used in the articles are Apache Hadoop, Apache Spark, Map Reduce, Apache Storm, Apache Flink, Anaconda, Apache S4, Weka, streaming analytics manager, and CEP.

As you can see in Table 9 , Apache Hadoop, MapReduce, and Apache Spark are the most critical quality attributes, with a total number of 45, 32, and 31 articles, respectively. Some of these tools include a set of libraries and procedures for efficient processing and analysis. In the study, the libraries and functions used by the articles are Hadooppcap-lib, Hadoop-pcap-serde, Hadoop-pcap-input (Apache Hadoop), MLlib, GraphX, Spark Streaming, Spark SQL, Spark Core (Apache Spark), Map, FlatMap, Filter, Reduce, Shuffle (Map Reduce), Gelly, FlinkML, Table and SQL, FlinkCEP (Apache Flink), NumPy [ 132 ], Keras [ 108 ], Pandas [ 59 ], and Scikit-Learn, Paho-MQTT (Anaconda). Also, various algorithms and methods are used to process and analyze data, such as classification, clustering, regression, optimization algorithms, and SVM. Most of these tools have these algorithms.

3.1.6 Post-processing

The post-processing step is another vital task in knowledge discovery from big data in the IoT. This paper divides the post-processing step into evaluation and selection (data governance), virtualization/dashboard, intelligent decision, and service and application. The evaluation and selection stage evaluates results obtained using test methods on different types of datasets. There are various criteria for assessing the results. In this section, the articles are categorized based on the methods they used for the test. These methods are divided into four categories, including test methods, classification, clustering, and regression. Each of them uses various criteria for evaluation. Table 10 shows the articles’ classification based on these four categories. The virtualization/dashboard stage uses tools, graphs, tables [ 75 ], graphical user interface [ 59 ], and charts [ 92 ] to display the results. Intelligent decisions can be made using stochastic binary decisions [ 156 ], ML, pattern recognition, soft computing, and decision models [ 51 , 53 , 74 ]. These tools are Kibana, Plotly, Tableau, Microsoft Power BI, Grafana, vSphere, NodeJS, and Matplotlib [ 59 , 105 , 106 , 109 , 110 , 113 , 140 ].

Tables 11 and 12 show the relevant datasets that the articles used for investigating/numerically assessing techniques for BDM in the IoT. These datasets are divided into two categories: 1) categorized based on characteristics including dataset name, repository, dataset characteristics, attribute characteristics, number of instances/size, and number of attributes 2) categorized based on characteristics including dataset name, website address, and size. As you can see, the UCI machine learning repository has been repeatedly used in articles as a repository to access techniques for BDM in the IoT.

3.2 Big data management architectures/frameworks in the IoT

This subsection investigates and analyzes the articles that (71 articles) presented the frameworks and architectures for BDM techniques in the IoT. These articles are divided into two categories: BDM architectures/frameworks in the IoT-based applications (63 articles) and BDM architectures/frameworks in the IoT paradigms (8 articles).

3.2.1 Big data management architectures/frameworks in the IoT applications

The architectural models used in the selected articles are layered, component-based, and cloud/fog-based architecture. A layered architecture is organized hierarchically, and each layer performs a service. The layered architecture ensures the system is more adaptable to emerging technologies at each layer and improves the acquisition and integration of data processes [ 167 ]. Component-based architecture is a framework that decomposes the system into reusable and logical components. The advantages of component-based architecture are increased quality, reliability, component reusability, and reduced time. Operations and components related to processing or storage in cloud-based or fog-based architectures are placed in the cloud or fog. Most of the proposed architectures are layered, and the most common types of BDM architectures in the IoT are 3-layer and 4-layer (22 and 20 articles). Also, most of the proposed architectures are in IoT-based healthcare, equivalent to 33.33%, followed by IoT-based smart cities, which equals 22.22%. The selected articles in this study used nine different OS for BDM in the IoT. Ubuntu is the most important OS, with 18 articles. Articles used programming languages to analyze and process big data in the IoT. Java, Python, and MATLAB are the major programming languages. In the following, these architectures and frameworks will be examined. For a better presentation, we have divided these architectures and frameworks into seven categories in terms of IoT applications (healthcare, smart cities, smart home/building, intelligent transport, traffic control and energy, urban planning, and other IoT applications (smart IoT systems, smart flood, smart farms, disaster management, laundry, digital manufacturing, and smart factory)). Then we review the attributes of the architectures and frameworks, including layers, the functions of the layers, the operating system, the programming language, and the advantages and disadvantages of each.

3.2.1.1 BDM architectural/framework for IoT-based healthcare

Predicting health and disease and preventing deaths are essential in our modern world [ 168 , 169 ]. Healthcare IoT (e.g., electronic and mobile health) uses wireless body sensor networks for monitoring the patients’ environmental, physiological, and behavioral parameters [ 170 ]. Wearables and other IoT devices within the healthcare industry generate a large amount of data. The health data must be collected, stored, processed, and analyzed for future intelligent decision-making. BDA plays a vital role in minimizing computation time, predicting the future status of individuals, providing reliable health services, prevention, healthy living, population health, early detection, and optimal management [ 133 , 158 , 171 ]. There are the BDM mechanisms’ objectives and requirements for different types of medical data [ 172 ]. Various research has presented many mechanisms for BDM in IoT-based healthcare that have advantages and disadvantages. Therefore, this subsection examines the articles (21 articles; 33.33%) that discussed the architectures or frameworks of BDM in IoT-based healthcare.

Rathore et al. [ 58 ] proposed Hadoop-based intelligent healthcare using a BDA approach. This system collected the big data and directed them to a 3-unit smart building for storing and processing. The units of this system are big data collection, Hadoop processing, and analysis and decision. This system used the 5-layer architecture for parallel, real-time, and offline processing. The layers of this architecture are the data collection, communication, processing, management, and service. The data collection layer includes data sensing, acquisition, buffering, and filtration. The big data are divided into small pieces in the processing layer, processed in parallel using HDFS and MapReduce, and stored. The management layer uses medical expert systems for processing the results and recommending corresponding actions.

Chui et al. [ 126 ] proposed a 6-layer architecture for patient behavior monitoring based on big data and IoT. Message queue, Apache Hadoop, behavior analytics, Mongo database, distributed stream processing, and exposer are the layers of this architecture. This architecture uses Hadoop for processing (descriptive, diagnostic, predictive, and prescriptive analytics), MongoDB for storing, Spark/Flink/Storm for stream processing, and Apache Kafka for breaking up the data stream into several partitions. Also, the authors have discussed the challenges of trust, security, privacy, and interoperability in the healthcare research field.

Ullah et al. [ 140 ] proposed a lightweight Semantic Interoperability Model for Big-Data in IoT (SIMB-IoT). The SIMB-IoT model has two main components: user interface and semantic interoperability. The semantic interoperability component is divided into three subcomponents: semantic interoperability, cloud services, and big data analytics. IoT data is collected and directed into an intelligent health cloud for online storage and processing. After processing, it sends suitable medicines to the patient’s IoT devices. This article used the SPARQL query to find hidden patterns.

Elhoseny et al. [ 173 ] presented a Parallel Particle Swarm Optimization (PPSO) algorithm for IoT big data analysis in cloud computing healthcare applications. This article aims are: optimize virtual machine selection and storage by using GA, PSO, and PPSO algorithms; real-time processing; and reducing the execution time. This architecture has four components: stakeholders’ devices; tasks; cloud broker; and network administrator. The cloud broker sends and receives requests to the cloud. The network administrator finds the optimal selection of virtual machines in the cloud for task scheduling.

Manogaran et al. [ 141 ] proposed a secured cloud-fog-based architecture for storing and processing real-time data for health care applications. This architecture has two sub-architectures: meta fog-redirection and grouping and choosing architectures. The meta fog-redirection architecture has three phases: data collection, data transfer, and big data storage. The data collection phase collected data from sensors in fog computing. The data transfer phase used the ‘s3cmd utility’ method for transferring data to Amazon S3.The big data storage phase used Apache Pig and Apache HBase for storage. The grouping and choosing architecture protects data and provides security services in fog and cloud environments. Also, this architecture used MapReduce to predict.

García-Magariño et al. [ 156 ] is an agent-based simulation framework for IoT BDA in smart beds. This framework has two layers: the primary mechanism for simulating sleepers' postures and the information's analyzer. The first layer provides the simulation of the poses of sleeper mechanisms. The second layer analysis collected data from the first layer. The agent types in this framework are sleeper agent, weight sensor agent, bed agent, observer agent, analyzer agent, stochastic sleeper agent, bed sleeper agent, restless sleeper agent, and healthy sleeper agent. This framework helps researchers to test different sleeper posture recognition algorithms, discusses other sleeper behaviors, and performs online or offline detection mechanisms.

Yacchirema et al. [ 59 ] proposed a 3-layer architecture for sleep monitoring based on IoT and big data at the network's edge. The layers of this architecture are the IoT layer, the fog layer, and the cloud layer. The IoT layer collected and aggregated the big data and directed them to the fog layer. The fog layer is responsible for connectivity and interoperability between heterogeneous devices, preprocessing the collected data, and sending notifications to react in real-time. The big data is stored, processed, and analyzed in the cloud layer for intelligent decision-making. This layer has three modules: data management, big data analyzer, and web application. This architecture used HDFS for data storage and Spark for offline and real-time processing.

BigReduce [ 137 ] is a cloud-based IoT framework for big data reduction for health monitoring in smart cities that focuses on reducing energy costs. This framework has two schemes: real-time big data reduction and intelligent big data decision-making. The big data reduction is made in two phases: at the time of acquisition and before transmission using an event-insensitive frequency content process.

Ma et al. [ 33 ] proposed a 3-layer architecture for the IoT big health system based on cloud-to-end fusion. The layers of this architecture are the big health perception layer, transport layer, and big health cloud service layer. In the big health perception layer, data are collected and preprocessed. The transport layer sends data to sensor nodes and receives data from the perception layer using network technologies. The big health cloud service layer has two sub-layers: the cloud service support and the cloud service application. The cloud service support sub-layer is responsible for compressing, storing, processing, and analyzing the real-time data. The cloud service application sub-layer is the interface between users and health networking. This sub-layer controls the sensor nodes and visualizes the big data.

Rathore et al. [ 61 ] proposed the 5-layer architecture for big data IoT analytics-based real-time medical emergency response systems. The data collection layer is responsible for data sensing, acquisition, buffering, filtration, and processing. This layer collected and aggregated data using a coordinator or relay node and transmitted them to a polarization mode dispersion. The communication layer provides device-to-device communication to various smart devices. The processing layer divides big data into small chunks. Each chunk is processed separately, aggregated, and stored. This article used MapReduce, HDFS, and Spark for data processing and analysis. The management layer is responsible for managing all types of outcomes using a medical expert system. The service layer is the interface between end-users and health networking. This architecture minimized the processing time and increased the throughput.

El‐Hasnony et al. [ 84 ] proposed a hybrid real-time remote patient monitoring framework based on mist, fog, and cloud computing. This article provided the 5-layer architecture for near real-time data analysis. The layers are the perception layer, the mist layer, the fog layer, the cloud layer, and the service provider layer. The mist layer is responsible for data filtering, data fusion, anomaly detection, and data transmission to the fog layer. The fog layer has done local monitoring and analysis, data aggregation, local storage, data pre-analysis, and data transmission to the cloud layer. The cloud layer implemented several data analytics techniques for intelligent decision-making and storage. This article presented a case study comparing traditional data mining techniques, including REPtree, MLP, Naive Bayes (NB), and sequential minimal optimization algorithms. The results showed that the REPtree algorithm achieved better accuracy, and the NB achieved the least time.

Harb et al. [ 106 ] proposed the 4-layer architecture for real-time BDA for patient monitoring and decision-making in healthcare applications. The layers of this platform are real-time patient monitoring, real-time decision and data storage, patient classification, and disease diagnosis, and data retrieval and visualization. The first layer is responsible for data ingestion using Kafka and Sqoop tools. The second layer processes and stores data using Spark and Hadoop HDFS. This layer preprocesses data and finds the missing records using MissRec (a script for Spark). The third layer is responsible for classification data using stability-based K-means, an adapted version of K-means clustering, and disease diagnosis using a modified version of the association rule mining algorithm. The last layer retrieves and visualizes data to understand the patient’s situation using Hive, SparkSQL, and Matplotlib.

Zhou et al. [ 62 ] proposed a data mining technology based on the IoT. The layers of the proposed functional architecture are the data acquisition layer, data transmission layer, data storage layer, and cloud service center layer. This article used the WIT120 system for data collection, the adaptive k-means clustering method based on the MapReduce framework for data preprocessing, HDFS for storing, and the GM (1,1) grey model for users’ health status prediction.

Hong-Tan et al. [ 90 ] proposed a real-time Ambient Intelligence assisted Student Health Monitoring System (AmIHMS). The data required by time ambient intelligence environments are collected from the WSN and sent to the cloud for handling. Their work developed a framework for real-time effective alerting of student health information. The AmIHMS architecture has three layers. The IoT layer collects health data from medical devices and sensors and saves it on one mobile computer or smartphone. The cloud layer receives the data through internet platforms such as 4G, 5G, LTE, etc., and executes the mining algorithms to extract relevant data for processing. The student health monitoring layer performs four stages to provide information and warnings about student health status. These stages include data retrieval, preprocessing, normalization, and classification/health status recognition.

Li [ 30 ] designed the fog-based Smart and Real-time Healthcare Information Processing (SRHIP) system. SRHIP architecture has three layers. IoT body sensor network layer performs data collection (health, environment, and locality), aggregation, compression, and encryption. Fog processing and computation layer use Spark and Hadoop ecosystem for information extraction, data normalization, rule engine, data filtration, and data processing. This layer performs the classification using the NB classifier. The cloud computation layer performs in-depth data analysis, storage, and decision-making. SRHIP minimizes the delay, transmission cost, and data size. This article uses hierarchical symmetric key data encryption to increase confidentiality.

The Improved Bayesian Convolution Network (IBCN) was proposed for human activity recognition [ 87 ]. The system architecture includes Wi-Fi and clouds onboard applications. The combination of a variable autoencoder with a standard deep net classifier is used to improve the performance of IBCN. This article used the convolution layers to extract the features and Enhanced Deep Learning (EDL) for security issues. IBCN provided the ability to download data via traditional radio frequency or low-power back-distribution communication. According to the experimental analysis, the proposed method allows the network to be continuously improved as new training sets are added and distinguishes between data-dependency and model-dependency. This architecture has high accuracy, versatility, flexibility, and reliability.

Sengupta and Bhunia [ 88 ] implemented a 3-layer IoT-enabled e-health framework for secure real-time data management using Cloudlet. The IoT layer uses IoT Hub for communicating with IoT devices. The Cloudlet layer is an intermediate layer between the IoT and cloud layers. This layer performs in-depth healthcare data analytics and processes. The cloud layer performs various analytics applications and processes queries. This framework uses SQLite for data storage in IoT Hub and Cassandra for future storing of sensed data. The result demonstrated that this framework has high efficiency, low data transmission time, low communication energy, data-packet loss, and query response time.

IBDAM [ 133 ] is an Intelligent BDA Model for efficient cardiac disease prediction in the IoT using multi-level fuzzy rules and valuable feature selection. This article used the open-source UCI database. First, it performs preprocessing on the UCI database, and the next step uses multi-level fuzzy rule generation for feature selection. IBDAM uses an optimized Recurrent Neural Network (RNN) to train the features. Finally, the features are classified into labeled classes according to the risk of evaluation by a medical practitioner. The results of this article demonstrate that this architecture has high performance and is quick and accurate.

Ahmed et al. [ 158 ] proposed an IoT-based health monitoring framework for pandemic disease analysis, prediction, and detection, such as COVID-19, using BDA. In this framework, the COVID-19 data set is collected from different data sources. Four data analysis techniques are performed on these data, including descriptive, diagnostic, predictive, and prescriptive. The experts opine on the results, and then users receive the results of these analyses through the internet and cloud servers. This article uses a neural network-based model for diagnosing and predicting the pandemic. The results of this article indicated that the accuracy, precision, F-score, and recall of the proposed architecture are better than AdaBoost, k-Nearest Neighbors (KNN), logistic regression, NB, and linear Support Vector Machine (SVM).

Ahanger et al. [ 71 ] proposed an IoT-based healthcare architecture for real-time COVID-19 data monitoring and predicting based on fog and cloud computing. This architecture has four layers. The data collection layer collects data from sensors and uses protocols to guarantee information security. The information classification layer classifies the information into four classes: health data, meteorological data, location data, and environmental data. The COVID-19-mining and extraction layer is responsible for splitting information into two groups using a fuzzy C-means procedure in the fog layer. The COVID-19 prediction and decision modeling layer use temporal RNN for estimating the results of the COVID-19 measure and a self-organization map-based technique to increase the perceived viability of the model. This article, in contrast to the existing methods, has high classification efficiency, viability, precision, and reliability.

Oğur et al. [ 109 ] proposed a real-time data analytics architecture for smart healthcare in IoT. This architecture has two domains. The software-defined networking-based WSN and RFID technology are used in the vertical domain, and data analytics tools, including Kafka, Spark, MongoDB, and NodeJS, are used in the horizontal domain. The collected data from WSN using RFID transmit to the Kafka platform using TCP sockets. The Kafka sends data to three consumers: The Apache Spark analysis engine that analyzes data in real-time; the NodeJS web application that visualizes patient data; and the MongoDB database that stores data. This article uses logistic regression and Apache spark MLlib for data classification. The result demonstrated this architecture has high performance and accuracy and is appropriate for a time-saving experimental environment.

Table 13 shows the result of the analysis of the articles. This table shows each article's architecture or framework name, OS name, programming language, advantages, and disadvantages. As you can see, layered architecture is the most important, with 14 articles.

3.2.1.2 BDM architectural/framework for IoT-based smart cities

According to the United Nations forecasting, about 67% of the world population will live in urban areas by 2050, resulting in environmental pollution, ecosystem destruction, energy shortage, emission reduction, and resource limitation [ 36 , 174 , 175 ]. Smart cities are large-scale distributed systems that could be a solution to overcoming these problems and improving intelligent services for residents [ 112 , 176 ]. Smart cities have many implemented sensing devices that generate large amounts of data. These data must be stored, processed, and analyzed to extract valuable information [ 177 ]. BDM plays a significant role in this context and facilitates better resource management and decision-making [ 176 ]. Many research focused on BDM mechanisms in IoT-based smart cities with different objectives, including improving monitoring and communication, real-time controlling, and increased quality attributes (such as reliability, throughput, energy conservation, accuracy, scalability, delay, bandwidth usage, etc.). Therefore, this subsection examines the articles (14 articles; 22.22%) that have discussed the architectures or frameworks of BDM in IoT-based smart cities.

Jindal et al. [ 85 ] propose a tensor-based big data processing technique for energy consumption in smart cities. This article aims to reduce the dimensionality of data and decrease the overall complexity. The proposed framework has two phases. The first phase is the 3-layer data gathering and processing architecture. The layers of this architecture are data acquisition, transmission, and processing. In the second phase, the collected data was represented in tensor form, and SVM was used to identify the loads to manage the demand response services in smart cities. The technique reduces data storage by 38%.

ESTemd [ 105 ] is a distributed stream processing middleware framework for real-time analysis using big data techniques on Apache Kafka. The layers of this framework are the data ingestion layer, the data broker layer (source), the stream data processing engine and services, the data broker layer (sink), and the event hub. The data broker layer is responsible for data processing and transformation, with the support of multiple transport protocols. The third layer does stream processing and consists of the predictive data analytics model and Kafka CEP operators. This framework helps with performance improvement through data integration and distributed applications' interoperability.

CPSO [ 115 ] is a self-adaptive preprocessing approach for big data stream classification. This approach handles four mechanisms: sub-window processing; feature extraction; feature selection; and optimization of the window size and feature picking. CPSO uses clustering-based PSO for data stream mining; the sliding window technique for data segmentation; statistical feature extraction for variable partitioning; correlation feature selection, and information gain for feature selection. The proposed approach improves its accuracy.

Rani and Chauhdary [ 72 ] proposed a novel approach for smart city applications based on BDA and a new protocol for mobile IoT. They presented the 5-layer architecture where the layers are: data source, technology, data management, application, and utility programs. The data source layer collects, compresses, and filters data. The technology layer is responsible for communication between sensor nodes, edge nodes, and base station. The management layer used MapReduce, SQL, and Hbase for analyzing, storing, and processing. The utility program layer used WSN and IoT protocols to work with the other layers. Also, this article presented a new protocol that reduces energy consumption, increases throughput, and reduces the delay and transmission time.

SCDAP [ 107 ] is the 3-layer BDA architecture for smart cities. The first layer is the platform that includes hardware clusters, the operating system, communication protocols, and other required computing nodes. The second layer is security. The last layer is the data processing layer that supports online and batch data processing. This layer has ten components: data acquisition; data preprocessing; online analytics; real-time analytics; batch data repository; batch data analytics; model management; model aggregation; smart application; and user interface. This architecture used Hadoop and Spark for data analysis. Also, this article presented a taxonomy of literature reviews based on six characteristics: focus, goal, organization, perspective, audience, and coverage.

Chilipirea et al. [ 80 ] proposed a data flow-based architecture for big data processing in smart cities. The architecture has seven steps: data sources, data normalization; data brokering; data storage; data analysis; data visualization; and decision support systems. This article used Extract, Transform, and Load (ETL) and Electronic Batchload Service (EBS) for normalizing the real-time and batch data. The data brokering step created the links between the collected data and the relevant context. This architecture used Hadoop for batch data processing and Storm for real-time data processing.

Gohar et al. [ 92 ] proposed a four-layer architecture for analyzing and storing data on the Internet of Small Things (IoST). The layers of this architecture are the small things layer, the infrastructure layer, the platform layer, and the application layer. The first layer collected data by using the LoRa gateway from LoRa devices. The infrastructure layer provides connectivity to devices by using the Internet. The platform layer is responsible for data preprocessing. For processing, this layer employs Max–Min normalization, the Kalman filter, the Round-Robin load balancing technique, the Least Slack Time algorithm (LST), the divide-and-conquer approach for aggregation, and NoSQL databases for storage. In the last layer, data is visualized for decision-making. This article implemented the architecture by using Hadoop, Spark, and GraphX. In this article, throughput has increased with the rise in data size.

Farmanbar and Rong [ 113 ] proposed an interactive cloud-based dashboard for online data visualization and a data analytics toolkit for smart city applications. The proposed architecture has three layers: the data layer, application and analysis layer, and presentation layer. The data layer is the core of the architecture and contains data acquisition units, data ingestion, data storage, and data access. This architecture used Logstash for data ingesting, Elasticsearch for storing, and Kibana for accessing and real-time monitoring. This platform has been tested on five datasets, including transportation data, electricity consumption, cargo e-bikes, parking, vacancies, and energy. The results showed this architecture is robust, scalable, and improves communication between users and urban service providers.

He et al. [ 116 ] proposed a big data architecture to achieve high Quality of Experience (QoE) performance in smart cities. This architecture has three plans: the data storage plane, the data processing plane, and the data application plane. This article used MongoDB and HDFS for data storing and Spark and the deep-learning-based greedy algorithm for data processing. The simulation result indicated that the proposed architecture's accuracy, precision, and recall are better than SVM and KNN.

Khan et al. [ 128 ] proposed an SDN-based 3-tier architecture that includes data collection, data processing and management, and an application layer for real-time big data processing in smart cities with two intermediate levels that work on SDN principles. This architecture uses Spark and GraphX with Hadoop for offline and real-time data analysis and processing. Also, this article proposed an adaptive job scheduling mechanism for load balancing and achieving high performance. The results showed that when clusters and processing time increase, the proposed system's performance also increases.

IoTDeM [ 73 ] is the IoT big data-oriented multiple edge-cloud architectures for MapReduce performance prediction with varying cluster scales. This architecture consists of three parts: multiple edge cloud redirectors, an edge cloud-based big data platform, and a centralized cloud-based big data platform. This architecture used historical job execution records and Locally Weighted Linear Regression (LWLR) techniques for predicting jobs' executing times and Ceph for storing them. Because of Ceph, there was no need to transfer data to the newly added slave node. This article validated the accuracy of the proposed model by using the TESTDFSIO and Sort benchmark applications in a general implementation scenario based on Hadoop2 and Ceph and achieved an average relative error of less than 10%.

Ahab [ 112 ] is a generic, scalable, fault-tolerant, and cloud-based framework for online and offline big data processing. This framework has four components: the user API, repositories, messaging infrastructure, and stream processing. The API directs the published data streams from different sources. Ahab uses the component, stream, policy, and action repositories for storing data streams, management policies, and actions. Ahab uses distributed messaging for handling data streams, minimizing unnecessary network traffic. Also, it allows the components to choose an appropriate communication point freely. The Ahab architecture has two layers: the streaming and service layers. The streaming layer is implemented as a lambda architecture. This layer has three sub-layers for data stream processing: the batch layer, the speed layer, and the serving layer. The HDFS and Apache Spark are used for data storing and stream processing. The service layer is responsible for analyzing, managing, and adapting components.

Mobi-Het [ 81 ] is a mobility-aware optimal resource allocation architecture for remote big data task execution in mobile cloud computing. This article uses the SMOOTH random mobility model to propound the free movement of mobile devices and estimate their speed and direction. Mobi-Het has three layers: mobile devices, cloudlets, and the master cloud. The mobile devices component has a decision-maker module that decides whether tasks should be executed remotely or locally. The master cloud component implements the resource allocation algorithm. This article has a low execution time, high execution reliability, and efficiency in timeliness.

Hossain et al. [ 132 ] proposed a knowledge-driven framework that automatically selects the suitable data mining and ML algorithms for a dynamic IoT smart city dataset. The system architecture has four units: data Knowledgeextraction, extactGoalKnowledge, extractAlgoKnowledge, and matchKnowledge. The framework's inputs are three key factors: datasets, goals, and data mining and ML algorithms. This article discussed both supervised and unsupervised data mining. The results show that this framework reduces computational time and complexity and increases performance and flexibility while dynamically choosing a high-accuracy solution.

Table 14 shows the result of the analysis of the articles. This table shows the architecture or framework name, OS name, programming language, advantages, and disadvantages of each article. As you can see, layered architecture is the most important, with 13 articles.

3.2.1.3 BDM architectural/framework for IoT-based smart home/ building

BDM mechanisms and IoT (architecture/ frameworks) have a crucial role in smart home/building, including processing data collected by the home sensors; analyzing, classifying, monitoring, and managing energy consumption and saving; intelligently identifying user behavior patterns and home activities; and increasing safety and comfort at home [ 76 ]. This subsection presents a review of the articles (8 articles; 12.70%) that have discussed the architectures or frameworks of BDM in the IoT-based smart home/ building.

Al-Ali et al. [ 68 ] proposed a smart home energy management architecture using IoT and BDA approaches. This architecture is divided into two sub-architectures: hardware architecture and software architecture. The hardware architecture includes sensors and actuators, high-end microcontrollers, and server blocks. The software architecture comprises the data acquisition module on the edge device, a middleware module, and a client application module. The first module monitors and collects data and transmits them to the middleware module. The second module uses several tools to provide different services, including facilitating communication between edge devices and middleware, data storage, data analysis, and sending results to the requester. The third module develops the front-end mobile user interface using a cross-platform integrated development environment. This article is evaluated using a prototype. The results showed the proposed architecture has high scalability, security, privacy, throughput, and speed.

Silva et al. [ 55 ] proposed a real-time BDA embedded architecture for the smart city with the RESTful web of things. This article integrated the web and smart control systems using a smart gateway system. The proposed architecture consists of four levels: data creation and collection; data processing and management; event and decision management; and application. The data processing and management level utilized HDFS for primary data storing, MapReduce for processing, Hbase to speed up the processing, and HIVE for data querying and managing. The event and decision management level classified two events as service and resource events based on the processed information. The application level remotely provides access to the smart city services and has three sub-layers: departmental layer, services layer, and sub-services layer. This article has high performance and throughput, low processing time, and minimizes energy consumption.

Khan et al. [ 57 ] proposed a scheduling algorithm, an IoT BDA architecture, and a real-time platform for managing sensors' energy consumption. This architecture has four steps: appliance discovery, sensor configuration and deployment, event management and scheduling, and information gathering and processing. Appliances are identified and classified in the first step based on user availability and usage time. The second step used Poisson distribution for sensor distribution in an IoT environment. In the third step, the appliance sleep-scheduling mechanism is presented for job scheduling. In the last step, the collected data from sensors were directed to Hadoop, Spark, and GraphX for processing and analysis. This step used HDFS for data storage. This article minimized total execution time and energy consumption.

HEMS-IoT [ 76 ] is a 7-layer architecture based on big data and ML for in-home energy management. The layers of this architecture are the presentation layer, IoT services layer, security layer, management layer, communication layer, data layer, and device layer. The management layer uses the J48 ML algorithm and the Weka API for energy consumption reduction and user behavior pattern extraction. This layer also classifies the data and houses based on energy consumption using the C4.5 algorithm. The IoT services layer provides different REST-based web services. The security layer guarantees data confidentiality. This layer has two components, namely authorization and authentication. This article uses RULEML and Apache Mahout to generate energy-saving recommendations.

Yassine et al. [ 56 ] proposed a platform for IoT smart homes based on fog and cloud computing. The components of the proposed platform are smart home components, IoT management and integration services, fog computing nodes, and cloud systems. The smart home component is divided into three tiers. The three tiers are: 1) the cyber-physical tier is responsible for interacting with the outside world through the second tier; 2) the connectivity tier is responsible for communicating with the smart home; and 3) the context-aware tier consists of user-defined rules and policies that create a privacy and security configuration. The IoT management and integration services component is in charge of providing interoperability, handling requests, authentication, and service registration. The fog computing nodes performed preprocessing, pattern mining, event detection, behavioral and predictive analytics, and visualization functions. The cloud system is responsible for storing and performing historical data analytics.

Luo et al. [ 131 ] proposed a 4-layer ML-based energy demand predictive model for smart building energy demands. Firstly, the sensitization layer collected data and transferred them to the storage layer. The storage layer performed data cleaning and storing. The model’s smart core is in the analytics support layer, where Artificial Neural Network (ANN) and k-means clustering are used for identifying features in weather profile patterns. The service layer is an interface between the proposed model and the smart building management system. The proposed model improved accuracy and decreased mean absolute percentage error.

Bashir et al. [ 110 ] proposed an Integrated Big Data Management and Analytics (IBDMA) framework for smart buildings. The reference architecture and the metamodel are two phases of this framework. The reference architecture has eight layers: data monitoring, sourcing, ingestion, storage, analysis, visualization, decision-making, and action. People, processes, technology, information, and facility are the components of the metamodel phase. The core component of the metamodel is people (IoT policymakers, developers, and residents of intelligent buildings). The process component includes data monitoring, sourcing, ingesting, storage, decision-making, analytics, and action/control. The technology component consists of the tools and software packages to implement the IBDMA. Some of these tools are Apache Flume for data ingesting; HDFS for data storing; Apache Spark for data analysis; Microsoft Power BI for static data visualization; and Elasticsearch and Kibana for near-real-time data visualization. The information element manages disasters and controls various facilities based on results obtained by using the technology stack. The last element is the facility that improves the comfort, safety, and living conditions for the people of the building.

Table 15 shows the result of the analysis of the articles. This table shows each article's architecture or framework name, OS name, programming language, advantages, and disadvantages. As you can see, layered architecture is the most important, with five articles.

3.2.1.4 BDM architectural/framework for IoT-based intelligent transport

Safety, reliability, fault diagnosis, data transmission, and early warning in the intelligent transport system are critical for decision-making [ 178 ]. The intelligent transport system uses digital technologies, sensor networks, ML, and BDA mechanisms to overcome the challenges, including accident prevention, road safety, pollution reduction, automated driving, traffic control, intelligent navigation, and parking systems [ 179 ]. This subsection presents a review of the articles (2 articles; 3.17%) that have discussed the architectures or frameworks of BDM in IoT-based intelligent transport.

SMART TSS [ 129 ] is a BDA modular architecture for intelligent transportation systems. This architecture has four units: a big data acquisition and preprocessing unit, a big data processing unit, a big data analytics unit, and a data visualization unit. The big data processing unit stored the offline data in the cloud system for future analysis. The online data is sent to the extraction and filtration unit for load balancing on NoSQL databases. The big data analytics unit uses the map-reduce mechanism for analysis. This article uses Hadoop, Spark, and GraphX for big data processing and analysis. The throughput of the proposed system increases with increasing data size and has low accuracy and security.

Babar and Arif [ 89 ] proposed a real-time IoT big data analytics architecture for the smart transportation system. This architecture has three phases: big data organization and management, big data processing and analysis, and big data service management. The first phase performed data preprocessing, including big data detection, logging, integration, reduction, transformation, and cleaning. This phase used the divide-and-conquer technique for data aggregation, the Min–Max method for data transformation, and the Kalman filter technique for data cleaning. The second phase used Hadoop for big data processing, HDFS, Hive, and Hbase for data storage, and Spark for data stream analysis. This phase performed load balancing that caused increased throughput, minimized processor use, and reduced response time. The third phase is responsible for intelligent decision-making and event management.

Table 16 shows the result of the analysis of the articles. This table shows the architecture or framework name, OS name, programming language, advantages, and disadvantages of each article. As you can see, layered architecture is the most important, with two articles.

3.2.1.5 BDM architectural/framework for IoT-based traffic control and energy

Two reviewed articles discussed the architectures or frameworks of BDM in IoT-based traffic control and energy and used the ML for this purpose. ML4IoT [ 108 ] is a container-based ML framework for IoT data analytics and coordinating ML workflows. This framework aims to define and automate the execution of ML workflows. The proposed framework uses several types of ML algorithms. The ML4IoT framework has two layers: ML4IoT data management and ML4IoT core. The ML4IoT core layer trains and deploys ML models and consists of five components: a workflow designer, a workflow orchestrator, a workflow scheduler, container-based components, and a distributed data processing engine. ML4IoT data management is responsible for data ingesting and storing and has three sub-components: a messaging system, a distributed file system, and a NoSQL database. The results of this article reveal that this framework has high elasticity, scalability, robustness, and performance. Furthermore, Chhabra et al. [ 111 ] proposed a scalable and flexible cyber-forensics framework for IoT BDA analytics with high precision and sensitivity. This framework consisted of four modules: the data collector and information generator; feature analytics and extraction; designing ML models; and analyzing models on various efficiency matrices. This article used Google’s programming model, MapReduce, as the core for traffic translation, extraction, and analysis of dynamic traffic features. Also, they presented a comparative study of globally accepted ML models for peer-to-peer malware analysis in mocked real-time.

Table 17 shows the result of the analysis of the articles. This table shows the architecture or framework name, OS name, programming language, advantages, and disadvantages for each article. As you can see, the component-based architecture is the most important, with two articles.

3.2.1.6 BDM architectural/framework for IoT-based urban planning

To improve the quality, plan, design, sustainability, living standards, dynamic organization, mobility of urban space and structure, and maintain the urban services, BDM is responsible for offline and online aggregation, managing, processing, and analyzing the large amounts of big data in urbanization [ 180 , 181 , 182 ]. Rathore et al. [ 51 ] proposed the 4-layer IoT-based BDA architecture for smart city development and urban planning. The first layer generated, aggregated, registered, and filtrated data from various IoT sources. Using communication technologies, the second layer created communication between sensors and the relay node. The third layer used HDFS, Hbase, Hive, and SQL for storage; MapReduce for offline analysis; and Spark, VoltDB, and Storm for real-time analysis. The last layer is responsible for showing the study results for intelligent and fast decision-making. The results show that the architecture provides efficient outcomes even on IoT big data sets. Throughput has increased with the rise in data size, and the processing time has decreased.

Silva et al. [ 63 ] proposed a reliable 3-layer BDA-embedded architecture for urban planning. The layers of this architecture are data aggregation, data management, and service management. The purpose of this article is to increase throughput and minimize processing time. The real-time data management layer is the main layer and performs data filtration, analysis, processing, and storing. This layer used data filtration and min–max normalization techniques to improve energy data. This architecture used MapReduce for offline data processing, Spark for online data processing, and Hbase for storing.

Table 18 shows the result of the analysis of the articles. This table shows the architecture or framework name, OS name, programming language, advantages, and disadvantages for each article. As you can see, layered architecture is the most important, with two articles.

3.2.1.7 BDM architectural/framework for other IoT-based applications

This subsection presents a review of the articles (14 articles) that have discussed the architectures or frameworks of BDM in other IoT-based applications. These IoT applications are smart IoT systems (4 articles), smart flood (1 article), smart farms (2 articles), disaster management (1 article), laundry (1 article), smart pipeline (1 article), network traffic (1 article), digital manufacturing (1 article), smart factory (2 articles).

Al-Osta et al. [ 121 ] proposed an event-driven and semantic rules-based approach for IoT data processing. The main levels of this system are sensor, edge, and cloud levels. This article has two purposes: reducing the required resources and the volume of data before transfer to the cloud for storage. The collected data is first aggregated, filtered, and classified at the gateway level. This causes a saving in bandwidth and minimizes the network traffic. This approach used semantic rules for data filtering. It also employed a complex event processing module to analyze input events and detect processing priority.