Data Science Interview Practice: Machine Learning Case Study

A common interview type for data scientists and machine learning engineers is the machine learning case study. In it, the interviewer will ask a question about how the candidate would build a certain model. These questions can be challenging for new data scientists because the interview is open-ended and new data scientists often lack practical experience building and shipping product-quality models.

I have a lot of practice with these types of interviews as a result of my time at Insight , my many experiences interviewing for jobs , and my role in designing and implementing Intuit’s data science interview. Similar to my last article where I put together an example data manipulation interview practice problem , this time I will walk through a practice case study and how I would work through it.

My Approach

Case study interviews are just conversations. This can make them tougher than they need to be for junior data scientists because they lack the obvious structure of a coding interview or data manipulation interview . I find it’s helpful to impose my own structure on the conversation by approaching it in this order:

- Problem : Dive in with the interviewer and explore what the problem is. Look for edge cases or simple and high-impact parts of the problem that you might be able to close out quickly.

- Metrics : Once you have determined the scope and parameters of the problem you’re trying to solve, figure out how you will measure success. Focus on what is important to the business and not just what is easy to measure.

- Data : Figure out what data is available to solve the problem. The interviewer might give you a couple of examples, but ask about additional information sources. If you know of some public data that might be useful, bring it up here too.

- Labels and Features : Using the data sources you discussed, what features would you build? If you are attacking a supervised classification problem, how would you generate labels? How would you see if they were useful?

- Model : Now that you have a metric, data, features, and labels, what model is a good fit? Why? How would you train it? What do you need to watch out for?

- Validation : How would you make sure your model works offline? What data would you hold out to test your model works as expected? What metrics would you measure?

- Deployment and Monitoring : Having developed a model you are comfortable with, how would you deploy it? Does it need to be real-time or is it sufficient to batch inputs and periodically run the model? How would you check performance in production? How would you monitor for model drift where its performance changes over time?

Here is the prompt:

At Twitter, bad actors occasionally use automated accounts, known as “bots”, to abuse our platform. How would you build a system to help detect bot accounts?

At the start of the interview I try to fully explore the bounds of the problem, which is often open ended. My goal with this part of the interview is to:

- Understand the problem and all the edges cases.

- Come to an agreement with the interviewer on the scope—narrower is better!—of the problem to solve.

- Demonstrate any knowledge I have on the subject, especially from researching the company previously.

Our Twitter bot prompt has a lot of angles from which we could attack. I know Twitter has dozens of types of bots, ranging from my harmless Raspberry Pi bots , to “Russian Bots” trying to influence elections , to bots spreading spam . I would pick one problem to focus on using my best guess as to business impact. In this case spam bots are likely a problem that causes measurable harm (drives users away, drives advertisers away). Russian bots are probably a bigger issue in terms of public perception, but that’s much harder to measure.

After deciding on the scope, I would ask more about the systems they currently have to deal with it. Likely Twitter has an ops team to help identify spam and block accounts and they may even have a rules based system. Those systems will be a good source of data about the bad actors and they likely also have metrics they track for this problem.

Having agreed on what part of the problem to focus on, we now turn to how we are going to measure our impact. There is no point shipping a model if you can’t measure how it’s affecting the business.

Metrics and model use go hand-in-hand, so first we have to agree on what the model will be used for. For spam we could use the model to just mark suspected accounts for human review and tracking, or we could outright block accounts based on the model result. If we pick the human review option, it’s probably more important to get all the bots even if some good customers are affected. If we go with immediate action, it is likely more important to only ban truly bad accounts. I covered thinking about metrics like this in detail in another post, What Machine Learning Metric to Use . Take a look!

I would argue the automatic blocking model will have higher impact because it frees our ops people to focus on other bad behavior. We want two sets of metrics: offline for when we are training and online for when the model is deployed.

Our offline metric will be precision because, based on the argument above, we want to be really sure we’re only banning bad accounts.

Our online metrics are more business focused:

- Ops time saved : Ops is currently spending some amount of time reviewing spam; how much can we cut that down?

- Spam fraction : What percent of Tweets are spam? Can we reduce this?

It is often useful to normalize metrics, like the spam fraction metric, so they don’t go up or down just because we have more customers!

Now that we know what we’re doing and how to measure its success, it’s time to figure out what data we can use. Just based on how a company operates, you can make a really good guess as to the data they have. For Twitter we know they have to track Tweets, accounts, and logins, so they must have databases with that information. Here are what I think they contain:

- Tweets database : Sending account, mentioned accounts, parent Tweet, Tweet text.

- Interactions database : Account, Tweet, action (retweet, favorite, etc.).

- Accounts database : Account name, handle, creation date, creation device, creation IP address.

- Following database : Account, followed account.

- Login database : Account, date, login device, login IP address, success or fail reason.

- Ops database : Account, restriction, human reasoning.

And a lot more. From these we can find out a lot about an account and the Tweets they send, who they send to, who those people react to, and possibly how login events tie different accounts together.

Labels and Features

Having figured out what data is available, it’s time to process it. Because I’m treating this as a classification problem, I’ll need labels to tell me the ground truth for accounts, and I’ll need features which describe the behavior of the accounts.

Since there is an ops team handling spam, I have historical examples of bad behavior which I can use as positive labels. 1 If there aren’t enough I can use tricks to try to expand my labels, for example looking at IP address or devices that are associated with spammers and labeling other accounts with the same login characteristics.

Negative labels are harder to come by. I know Twitter has verified users who are unlikely to be spam bots, so I can use them. But verified users are certainly very different from “normal” good users because they have far more followers.

It is a safe bet that there are far more good users than spam bots, so randomly selecting accounts can be used to build a negative label set.

To build features, it helps to think about what sort of behavior a spam bot might exhibit, and then try to codify that behavior into features. For example:

- Bots can’t write truly unique messages ; they must use a template or language generator. This should lead to similar messages, so looking at how repetitive an account’s Tweets are is a good feature.

- Bots are used because they scale. They can run all the time and send messages to hundreds or thousands (or millions) or users. Number of unique Tweet recipients and number of minutes per day with a Tweet sent are likely good features.

- Bots have a controller. Someone is benefiting from the spam, and they have to control their bots. Features around logins might help here like number of accounts seen from this IP address or device, similarity of login time, etc.

Model Selection

I try to start with the simplest model that will work when starting a new project. Since this is a supervised classification problem and I have written some simple features, logistic regression or a forest are good candidates. I would likely go with a forest because they tend to “just work” and are a little less sensitive to feature processing. 2

Deep learning is not something I would use here. It’s great for image, video, audio, or NLP, but for a problem where you have a set of labels and a set of features that you believe to be predictive it is generally overkill.

One thing to consider when training is that the dataset is probably going to be wildly imbalanced. I would start by down-sampling (since we likely have millions of events), but would be ready to discuss other methods and trade offs.

Validation is not too difficult at this point. We focus on the offline metric we decided on above: precision. We don’t have to worry much about leaking data between our holdout sets if we split at the account level, although if we include bots from the same botnet into our different sets there will be a little data leakage. I would start with a simple validation/training/test split with fixed fractions of the dataset.

Since we want to classify an entire account and not a specific tweet, we don’t need to run the model in real-time when Tweets are posted. Instead we can run batches and can decide on the time between runs by looking at something like the characteristic time a spam bot takes to send out Tweets. We can add rate limiting to Tweet sending as well to slow the spam bots and give us more time to decide without impacting normal users.

For deployment, I would start in shadow mode , which I discussed in detail in another post . This would allow us to see how the model performs on real data without the risk of blocking good accounts. I would track its performance using our online metrics: spam fraction and ops time saved. I would compute these metrics twice, once using the assumption that the model blocks flagged accounts, and once assuming that it does not block flagged accounts, and then compare the two outcomes. If the comparison is favorable, the model should be promoted to action mode.

Let Me Know!

I hope this exercise has been helpful! Please reach out and let me know at @alex_gude if you have any comments or improvements!

In this case a positive label means the account is a spam bot, and a negative label means they are not. ↩

If you use regularization with logistic regression (and you should) you need to scale your features. Random forests do not require this. ↩

Data Science Case Study Interview: Your Guide to Success

by Sam McKay, CFA | Careers

Ready to crush your next data science interview? Well, you’re in the right place.

This type of interview is designed to assess your problem-solving skills, technical knowledge, and ability to apply data-driven solutions to real-world challenges.

So, how can you master these interviews and secure your next job?

To master your data science case study interview:

Practice Case Studies: Engage in mock scenarios to sharpen problem-solving skills.

Review Core Concepts: Brush up on algorithms, statistical analysis, and key programming languages.

Contextualize Solutions: Connect findings to business objectives for meaningful insights.

Clear Communication: Present results logically and effectively using visuals and simple language.

Adaptability and Clarity: Stay flexible and articulate your thought process during problem-solving.

This article will delve into each of these points and give you additional tips and practice questions to get you ready to crush your upcoming interview!

After you’ve read this article, you can enter the interview ready to showcase your expertise and win your dream role.

Let’s dive in!

Table of Contents

What to Expect in the Interview?

Data science case study interviews are an essential part of the hiring process. They give interviewers a glimpse of how you, approach real-world business problems and demonstrate your analytical thinking, problem-solving, and technical skills.

Furthermore, case study interviews are typically open-ended , which means you’ll be presented with a problem that doesn’t have a right or wrong answer.

Instead, you are expected to demonstrate your ability to:

Break down complex problems

Make assumptions

Gather context

Provide data points and analysis

This type of interview allows your potential employer to evaluate your creativity, technical knowledge, and attention to detail.

But what topics will the interview touch on?

Topics Covered in Data Science Case Study Interviews

In a case study interview , you can expect inquiries that cover a spectrum of topics crucial to evaluating your skill set:

Topic 1: Problem-Solving Scenarios

In these interviews, your ability to resolve genuine business dilemmas using data-driven methods is essential.

These scenarios reflect authentic challenges, demanding analytical insight, decision-making, and problem-solving skills.

Real-world Challenges: Expect scenarios like optimizing marketing strategies, predicting customer behavior, or enhancing operational efficiency through data-driven solutions.

Analytical Thinking: Demonstrate your capacity to break down complex problems systematically, extracting actionable insights from intricate issues.

Decision-making Skills: Showcase your ability to make informed decisions, emphasizing instances where your data-driven choices optimized processes or led to strategic recommendations.

Your adeptness at leveraging data for insights, analytical thinking, and informed decision-making defines your capability to provide practical solutions in real-world business contexts.

Topic 2: Data Handling and Analysis

Data science case studies assess your proficiency in data preprocessing, cleaning, and deriving insights from raw data.

Data Collection and Manipulation: Prepare for data engineering questions involving data collection, handling missing values, cleaning inaccuracies, and transforming data for analysis.

Handling Missing Values and Cleaning Data: Showcase your skills in managing missing values and ensuring data quality through cleaning techniques.

Data Transformation and Feature Engineering: Highlight your expertise in transforming raw data into usable formats and creating meaningful features for analysis.

Mastering data preprocessing—managing, cleaning, and transforming raw data—is fundamental. Your proficiency in these techniques showcases your ability to derive valuable insights essential for data-driven solutions.

Topic 3: Modeling and Feature Selection

Data science case interviews prioritize your understanding of modeling and feature selection strategies.

Model Selection and Application: Highlight your prowess in choosing appropriate models, explaining your rationale, and showcasing implementation skills.

Feature Selection Techniques: Understand the importance of selecting relevant variables and methods, such as correlation coefficients, to enhance model accuracy.

Ensuring Robustness through Random Sampling: Consider techniques like random sampling to bolster model robustness and generalization abilities.

Excel in modeling and feature selection by understanding contexts, optimizing model performance, and employing robust evaluation strategies.

Become a master at data modeling using these best practices:

Topic 4: Statistical and Machine Learning Approach

These interviews require proficiency in statistical and machine learning methods for diverse problem-solving. This topic is significant for anyone applying for a machine learning engineer position.

Using Statistical Models: Utilize logistic and linear regression models for effective classification and prediction tasks.

Leveraging Machine Learning Algorithms: Employ models such as support vector machines (SVM), k-nearest neighbors (k-NN), and decision trees for complex pattern recognition and classification.

Exploring Deep Learning Techniques: Consider neural networks, convolutional neural networks (CNN), and recurrent neural networks (RNN) for intricate data patterns.

Experimentation and Model Selection: Experiment with various algorithms to identify the most suitable approach for specific contexts.

Combining statistical and machine learning expertise equips you to systematically tackle varied data challenges, ensuring readiness for case studies and beyond.

Topic 5: Evaluation Metrics and Validation

In data science interviews, understanding evaluation metrics and validation techniques is critical to measuring how well machine learning models perform.

Choosing the Right Metrics: Select metrics like precision, recall (for classification), or R² (for regression) based on the problem type. Picking the right metric defines how you interpret your model’s performance.

Validating Model Accuracy: Use methods like cross-validation and holdout validation to test your model across different data portions. These methods prevent errors from overfitting and provide a more accurate performance measure.

Importance of Statistical Significance: Evaluate if your model’s performance is due to actual prediction or random chance. Techniques like hypothesis testing and confidence intervals help determine this probability accurately.

Interpreting Results: Be ready to explain model outcomes, spot patterns, and suggest actions based on your analysis. Translating data insights into actionable strategies showcases your skill.

Finally, focusing on suitable metrics, using validation methods, understanding statistical significance, and deriving actionable insights from data underline your ability to evaluate model performance.

Also, being well-versed in these topics and having hands-on experience through practice scenarios can significantly enhance your performance in these case study interviews.

Prepare to demonstrate technical expertise and adaptability, problem-solving, and communication skills to excel in these assessments.

Now, let’s talk about how to navigate the interview.

Here is a step-by-step guide to get you through the process.

Steps by Step Guide Through the Interview

This section’ll discuss what you can expect during the interview process and how to approach case study questions.

Step 1: Problem Statement: You’ll be presented with a problem or scenario—either a hypothetical situation or a real-world challenge—emphasizing the need for data-driven solutions within data science.

Step 2: Clarification and Context: Seek more profound clarity by actively engaging with the interviewer. Ask pertinent questions to thoroughly understand the objectives, constraints, and nuanced aspects of the problem statement.

Step 3: State your Assumptions: When crucial information is lacking, make reasonable assumptions to proceed with your final solution. Explain these assumptions to your interviewer to ensure transparency in your decision-making process.

Step 4: Gather Context: Consider the broader business landscape surrounding the problem. Factor in external influences such as market trends, customer behaviors, or competitor actions that might impact your solution.

Step 5: Data Exploration: Delve into the provided datasets meticulously. Cleanse, visualize, and analyze the data to derive meaningful and actionable insights crucial for problem-solving.

Step 6: Modeling and Analysis: Leverage statistical or machine learning techniques to address the problem effectively. Implement suitable models to derive insights and solutions aligning with the identified objectives.

Step 7: Results Interpretation: Interpret your findings thoughtfully. Identify patterns, trends, or correlations within the data and present clear, data-backed recommendations relevant to the problem statement.

Step 8: Results Presentation: Effectively articulate your approach, methodologies, and choices coherently. This step is vital, especially when conveying complex technical concepts to non-technical stakeholders.

Remember to remain adaptable and flexible throughout the process and be prepared to adapt your approach to each situation.

Now that you have a guide on navigating the interview, let us give you some tips to help you stand out from the crowd.

Top 3 Tips to Master Your Data Science Case Study Interview

Approaching case study interviews in data science requires a blend of technical proficiency and a holistic understanding of business implications.

Here are practical strategies and structured approaches to prepare effectively for these interviews:

1. Comprehensive Preparation Tips

To excel in case study interviews, a blend of technical competence and strategic preparation is key.

Here are concise yet powerful tips to equip yourself for success:

Practice with Mock Case Studies : Familiarize yourself with the process through practice. Online resources offer example questions and solutions, enhancing familiarity and boosting confidence.

Review Your Data Science Toolbox: Ensure a strong foundation in fundamentals like data wrangling, visualization, and machine learning algorithms. Comfort with relevant programming languages is essential.

Simplicity in Problem-solving: Opt for clear and straightforward problem-solving approaches. While advanced techniques can be impressive, interviewers value efficiency and clarity.

Interviewers also highly value someone with great communication skills. Here are some tips to highlight your skills in this area.

2. Communication and Presentation of Results

In case study interviews, communication is vital. Present your findings in a clear, engaging way that connects with the business context. Tips include:

Contextualize results: Relate findings to the initial problem, highlighting key insights for business strategy.

Use visuals: Charts, graphs, or diagrams help convey findings more effectively.

Logical sequence: Structure your presentation for easy understanding, starting with an overview and progressing to specifics.

Simplify ideas: Break down complex concepts into simpler segments using examples or analogies.

Mastering these techniques helps you communicate insights clearly and confidently, setting you apart in interviews.

Lastly here are some preparation strategies to employ before you walk into the interview room.

3. Structured Preparation Strategy

Prepare meticulously for data science case study interviews by following a structured strategy.

Here’s how:

Practice Regularly: Engage in mock interviews and case studies to enhance critical thinking and familiarity with the interview process. This builds confidence and sharpens problem-solving skills under pressure.

Thorough Review of Concepts: Revisit essential data science concepts and tools, focusing on machine learning algorithms, statistical analysis, and relevant programming languages (Python, R, SQL) for confident handling of technical questions.

Strategic Planning: Develop a structured framework for approaching case study problems. Outline the steps and tools/techniques to deploy, ensuring an organized and systematic interview approach.

Understanding the Context: Analyze business scenarios to identify objectives, variables, and data sources essential for insightful analysis.

Ask for Clarification: Engage with interviewers to clarify any unclear aspects of the case study questions. For example, you may ask ‘What is the business objective?’ This exhibits thoughtfulness and aids in better understanding the problem.

Transparent Problem-solving: Clearly communicate your thought process and reasoning during problem-solving. This showcases analytical skills and approaches to data-driven solutions.

Blend technical skills with business context, communicate clearly, and prepare to systematically ace your case study interviews.

Now, let’s really make this specific.

Each company is different and may need slightly different skills and specializations from data scientists.

However, here is some of what you can expect in a case study interview with some industry giants.

Case Interviews at Top Tech Companies

As you prepare for data science interviews, it’s essential to be aware of the case study interview format utilized by top tech companies.

In this section, we’ll explore case interviews at Facebook, Twitter, and Amazon, and provide insight into what they expect from their data scientists.

Facebook predominantly looks for candidates with strong analytical and problem-solving skills. The case study interviews here usually revolve around assessing the impact of a new feature, analyzing monthly active users, or measuring the effectiveness of a product change.

To excel during a Facebook case interview, you should break down complex problems, formulate a structured approach, and communicate your thought process clearly.

Twitter , similar to Facebook, evaluates your ability to analyze and interpret large datasets to solve business problems. During a Twitter case study interview, you might be asked to analyze user engagement, develop recommendations for increasing ad revenue, or identify trends in user growth.

Be prepared to work with different analytics tools and showcase your knowledge of relevant statistical concepts.

Amazon is known for its customer-centric approach and data-driven decision-making. In Amazon’s case interviews, you may be tasked with optimizing customer experience, analyzing sales trends, or improving the efficiency of a certain process.

Keep in mind Amazon’s leadership principles, especially “Customer Obsession” and “Dive Deep,” as you navigate through the case study.

Remember, practice is key. Familiarize yourself with various case study scenarios and hone your data science skills.

With all this knowledge, it’s time to practice with the following practice questions.

Mockup Case Studies and Practice Questions

To better prepare for your data science case study interviews, it’s important to practice with some mockup case studies and questions.

One way to practice is by finding typical case study questions.

Here are a few examples to help you get started:

Customer Segmentation: You have access to a dataset containing customer information, such as demographics and purchase behavior. Your task is to segment the customers into groups that share similar characteristics. How would you approach this problem, and what machine-learning techniques would you consider?

Fraud Detection: Imagine your company processes online transactions. You are asked to develop a model that can identify potentially fraudulent activities. How would you approach the problem and which features would you consider using to build your model? What are the trade-offs between false positives and false negatives?

Demand Forecasting: Your company needs to predict future demand for a particular product. What factors should be taken into account, and how would you build a model to forecast demand? How can you ensure that your model remains up-to-date and accurate as new data becomes available?

By practicing case study interview questions , you can sharpen problem-solving skills, and walk into future data science interviews more confidently.

Remember to practice consistently and stay up-to-date with relevant industry trends and techniques.

Final Thoughts

Data science case study interviews are more than just technical assessments; they’re opportunities to showcase your problem-solving skills and practical knowledge.

Furthermore, these interviews demand a blend of technical expertise, clear communication, and adaptability.

Remember, understanding the problem, exploring insights, and presenting coherent potential solutions are key.

By honing these skills, you can demonstrate your capability to solve real-world challenges using data-driven approaches. Good luck on your data science journey!

Frequently Asked Questions

How would you approach identifying and solving a specific business problem using data.

To identify and solve a business problem using data, you should start by clearly defining the problem and identifying the key metrics that will be used to evaluate success.

Next, gather relevant data from various sources and clean, preprocess, and transform it for analysis. Explore the data using descriptive statistics, visualizations, and exploratory data analysis.

Based on your understanding, build appropriate models or algorithms to address the problem, and then evaluate their performance using appropriate metrics. Iterate and refine your models as necessary, and finally, communicate your findings effectively to stakeholders.

Can you describe a time when you used data to make recommendations for optimization or improvement?

Recall a specific data-driven project you have worked on that led to optimization or improvement recommendations. Explain the problem you were trying to solve, the data you used for analysis, the methods and techniques you employed, and the conclusions you drew.

Share the results and how your recommendations were implemented, describing the impact it had on the targeted area of the business.

How would you deal with missing or inconsistent data during a case study?

When dealing with missing or inconsistent data, start by assessing the extent and nature of the problem. Consider applying imputation methods, such as mean, median, or mode imputation, or more advanced techniques like k-NN imputation or regression-based imputation, depending on the type of data and the pattern of missingness.

For inconsistent data, diagnose the issues by checking for typos, duplicates, or erroneous entries, and take appropriate corrective measures. Document your handling process so that stakeholders can understand your approach and the limitations it might impose on the analysis.

What techniques would you use to validate the results and accuracy of your analysis?

To validate the results and accuracy of your analysis, use techniques like cross-validation or bootstrapping, which can help gauge model performance on unseen data. Employ metrics relevant to your specific problem, such as accuracy, precision, recall, F1-score, or RMSE, to measure performance.

Additionally, validate your findings by conducting sensitivity analyses, sanity checks, and comparing results with existing benchmarks or domain knowledge.

How would you communicate your findings to both technical and non-technical stakeholders?

To effectively communicate your findings to technical stakeholders, focus on the methodology, algorithms, performance metrics, and potential improvements. For non-technical stakeholders, simplify complex concepts and explain the relevance of your findings, the impact on the business, and actionable insights in plain language.

Use visual aids, like charts and graphs, to illustrate your results and highlight key takeaways. Tailor your communication style to the audience, and be prepared to answer questions and address concerns that may arise.

How do you choose between different machine learning models to solve a particular problem?

When choosing between different machine learning models, first assess the nature of the problem and the data available to identify suitable candidate models. Evaluate models based on their performance, interpretability, complexity, and scalability, using relevant metrics and techniques such as cross-validation, AIC, BIC, or learning curves.

Consider the trade-offs between model accuracy, interpretability, and computation time, and choose a model that best aligns with the problem requirements, project constraints, and stakeholders’ expectations.

Keep in mind that it’s often beneficial to try several models and ensemble methods to see which one performs best for the specific problem at hand.

Related Posts

Top 22 Database Design Interview Questions Revealed

Database design is a crucial aspect of any software development process. Consequently, companies that...

Data Analyst Jobs for Freshers: What You Need to Know

You're fresh out of college, and you want to begin a career in data analysis. Where do you begin? To...

Data Analyst Jobs: The Ultimate Guide to Opportunities in 2024

Are you captivated by the world of data and its immense power to transform businesses? Do you have a...

Data Engineer Career Path: Your Guide to Career Success

In today's data-driven world, a career as a data engineer offers countless opportunities for growth and...

How to Become a Data Analyst with No Experience: Let’s Go!

Breaking into the field of data analysis might seem intimidating, especially if you lack experience....

33 Important Data Science Manager Interview Questions

As an aspiring data science manager, you might wonder about the interview questions you'll face. We get...

Top 22 Data Analyst Behavioural Interview Questions & Answers

Data analyst behavioral interviews can be a valuable tool for hiring managers to assess your skills,...

Masters in Data Science Salary Expectations Explained

Are you pursuing a Master's in Data Science or recently graduated? Great! Having your Master's offers...

How To Leverage Expert Guidance for Your Career in AI

So, you’re considering a career in AI. With so much buzz around the industry, it’s no wonder you’re...

Continuous Learning in AI – How To Stay Ahead Of The Curve

Artificial Intelligence (AI) is one of the most dynamic and rapidly evolving fields in the tech...

Learning Interpersonal Skills That Elevate Your Data Science Role

Data science has revolutionized the way businesses operate. It’s not just about the numbers anymore;...

Top 20+ Data Visualization Interview Questions Explained

So, you’re applying for a data visualization or data analytics job? We get it, job interviews can be...

Last Chance to Join Data Science Interview MasterClass 🚀 | Just 3 Slots Remaining...

[2023] Machine Learning Interview Prep

Got a machine learning interview lined up? Chances are that you are interviewing for ML engineering and/or data scientist position. Companies that have ML interview portions are Google , Meta , Stripe , McKinsey , and startups. And, the ML questions are peppered throughout the technical screen, take-home, and on-site rounds. So, what are entailed in the ML engineering interview? There are generally five areas👇

📚 ML Interview A reas

Area 1 – ML Coding

ML coding is similar to LeetCode style, but the main difference is that it is the application of machine learning using coding. Expect to write ML functions from scratch. In some cases, you will not be allowed to import third-party libraries like SkLearn as the questions are designed to assess your conceptual understanding and coding ability.

Area 2 – ML Theory (”Breath”)

These assess the candidate’s breath of knowledge in machine learning. Conceptual understanding of ML theories including the bias-variance trade-off, handling imbalanced labels, and accuracy vs interpretability are what’s assessed in ML theory interviews.

Area 3 – ML Algorithms (”Depth”)

Don’t confuse ML algorithms (sometimes called “Depth”) as the same coverage as ML “Breath”. While ML breath covers the general understanding of machine learning. ML Depth, on the other hand, assesses an in-depth understanding of the particular algorithm. For instance, you may have a dedicated round just focusing on the random forest. E.g. Here’s a sample question set you could be asked in a single round at Amazon.

Area 4 – Applied ML / Business Case

These are solve ML cases in the context of a business problem. Scalability and productionization are not the main concern as they are more so relevant in ML system design portions. Business case could be assessed in various form; it could be verbal explanation, or hands-on coding on Jupyter or Colab.

Area 5 – ML System Design

These assess the soundness and scalability of the ML system design. They are often assessed in the ML engineering interview, and you will be required to discuss the functional & non-functional requirements, architecture overview, data preparation, model training, model evaluation, and model productionization.

📚 ML Questions x Track (e.g. product analyst, data scientist, MLE)

Depending on the tracks, the type of ML questions you will be exposed to will vary. Here are some examples. Consider the following questions posed in various roles:

- Product Analyst – Build a model that can predict the lifetime value of a customer

- Data Scientist (Generalist) – Build a fraud detection model using credit card transactions

- ML Engineering – Build a recommender system that can scale to 10 million daily active users

For product analyst roles, the emphasis is on the application of ML on product analysis, user segmentation, and feature improvement. Rigor in scalable system is not required as most of the analysis is conducted on offline dataset.

For data scientist roles, you will most likely be assessed on ML breath, depth, and business case challenges. Understanding scalable systems is not required unless the role is more focused on “full-stack” type of data science role.

For ML engineering role, you will be asked coding, ML breath & depth and ML system design design questions. You will most likely have dedicated rounds on ML coding and ML system design with ML breath & depth questions peppered throughout the interview process.

✍️ 7 Algorithms You Should Know

In general you should have a in-depth understanding of the following algorithms. Understand the assumption, application, trade-offs and parameter tuning of these 7 ML algorithms. The most important aspect isn’t whether you understand 20+ ML algorithms. What’s more important is that you understand how to leverage 7 algorithms in 20 different situations.

- Linear Regression

- Logistic Regression

- Decision Tree

- Random Forest

- Gradient Boosted Trees

- Dense Neural Networks

📝 More Questions

- What is the difference between supervised and unsupervised learning?

- Can you explain the concept of overfitting and underfitting in machine learning models?

- What is cross-validation? Why is it important?

- Describe how a decision tree works. When would you use it over other algorithms?

- What is the difference between bagging and boosting?

- How would you validate a model you created to generate a predictive analysis?

- How does KNN work?

- What is PCA?

- How would you perform feature selection?

- What are the advantages and disadvantages of a neural network?

💡 Prep Tips

Tip 1 – Understand How ML Interviews are Screen

The typical format is 20 to 40 minutes embedded in a technical phone screen or a dedicated ML round within an onsite. You will be assessed by Sr./Staff-level data scientist or ML engineer. Here’s a sample video. You can also get coaching with a ML interviewer at FAANG companies: https://www.datainterview.com/coaching

Tip 2 – Practice Explaining Verbally

Interviewing is not a written exercise, it’s a verbal exercise. Whether the interviewer asks you conceptual knowledge of ML, coding question, or ML system design, you will be expected to explain with clarity and in-details. As you practice interview questions, practice verbally.

Tip 3 – Join the Ultimate Prep

Get access to ML questions, cases and machine learning mock interview recordings when you join the interview program: Join the Data Science Ultimate Prep created by FAANG engineers/Interviewers

How to Nail your next Technical Interview

You may be missing out on a 66.5% salary hike*, nick camilleri, how many years of coding experience do you have, free course on 'sorting algorithms' by omkar deshpande (stanford phd, head of curriculum, ik).

How can we help?

Interview Kickstart has enabled over 21000 engineers to uplevel.

Register for Webinar

Our founder takes you through how to Nail Complex Technical Interviews.

Read our Reviews

Our alumni credit the Interview Kickstart programs for their success.

Send us a note

One of our Program Advisors will get back to you ASAP.

.jpeg)

Top 15 Machine Learning Case Studies: Transforming Industries with Innovative Solutions

Last updated by Rishabh Dev Choudhary on Aug 30, 2024 at 05:16 PM | Reading time: 17 minutes

Rishabh Dev Choudhary

Attend our free webinar on how to nail your next technical interview.

Worried About Failing Tech Interviews?

Attend our webinar on "How to nail your next tech interview" and learn

.png)

15 Ethical Implications of Generative AI Beyond Deepfakes

.jpeg)

Unleashing Creativity with Generative Models: The AI Renaissance

What are JavaScript Frameworks? Understanding Most Popular Frameworks

.jpeg)

5 Ways to Boost Your Engineering Manager Salary

Risk Management for Technical Program Managers: Mitigating Challenges

Engineering Team Leadership: Strategies for High Performance

Top python scripting interview questions and answers you should practice, complex sql interview questions for interview preparation, zoox software engineer interview questions to crack your tech interview, rubrik interview questions for software engineers, top advanced sql interview questions and answers, twilio interview questions, ready to enroll, next webinar starts in.

Get tech interview-ready to navigate a tough job market

- Designed by 500 FAANG+ experts

- Live training and mock interviews

- 17000+ tech professionals trained

Data science case interviews (what to expect & how to prepare)

Data science case studies are tough to crack: they’re open-ended, technical, and specific to the company. Interviewers use them to test your ability to break down complex problems and your use of analytical thinking to address business concerns.

So we’ve put together this guide to help you familiarize yourself with case studies at companies like Amazon, Google, and Meta (Facebook), as well as how to prepare for them, using practice questions and a repeatable answer framework.

Here’s the first thing you need to know about tackling data science case studies: always start by asking clarifying questions, before jumping in to your plan.

Let’s get started.

- What to expect in data science case study interviews

- How to approach data science case studies

- Sample cases from FAANG data science interviews

- How to prepare for data science case interviews

Click here to practice 1-on-1 with ex-FAANG interviewers

1. what to expect in data science case study interviews.

Before we get into an answer method and practice questions for data science case studies, let’s take a look at what you can expect in this type of interview.

Of course, the exact interview process for data scientist candidates will depend on the company you’re applying to, but case studies generally appear in both the pre-onsite phone screens and during the final onsite or virtual loop.

These questions may take anywhere from 10 to 40 minutes to answer, depending on the depth and complexity that the interviewer is looking for. During the initial phone screens, the case studies are typically shorter and interspersed with other technical and/or behavioral questions. During the final rounds, they will likely take longer to answer and require a more detailed analysis.

While some candidates may have the opportunity to prepare in advance and present their conclusions during an interview round, most candidates work with the information the interviewer offers on the spot.

1.1 The types of data science case studies

Generally, there are two types of case studies:

- Analysis cases , which focus on how you translate user behavior into ideas and insights using data. These typically center around a product, feature, or business concern that’s unique to the company you’re interviewing with.

- Modeling cases , which are more overtly technical and focus on how you build and use machine learning and statistical models to address business problems.

The number of case studies that you’ll receive in each category will depend on the company and the position that you’ve applied for. Facebook , for instance, typically doesn’t give many machine learning modeling cases, whereas Amazon does.

Also, some companies break these larger groups into smaller subcategories. For example, Facebook divides its analysis cases into two types: product interpretation and applied data .

You may also receive in-depth questions similar to case studies, which test your technical capabilities (e.g. coding, SQL), so if you’d like to learn more about how to answer coding interview questions, take a look here .

We’ll give you a step-by-step method that can be used to answer analysis and modeling cases in section 2 . But first, let’s look at how interviewers will assess your answers.

1.2 What interviewers are looking for

We’ve researched accounts from ex-interviewers and data scientists to pinpoint the main criteria that interviewers look for in your answers. While the exact grading rubric will vary per company, this list from an ex-Google data scientist is a good overview of the biggest assessment areas:

- Structure : candidate can break down an ambiguous problem into clear steps

- Completeness : candidate is able to fully answer the question

- Soundness : candidate’s solution is feasible and logical

- Clarity : candidate’s explanations and methodology are easy to understand

- Speed : candidate manages time well and is able to come up with solutions quickly

You’ll be able to improve your skills in each of these categories by practicing data science case studies on your own, and by working with an answer framework. We’ll get into that next.

2. How to approach data science case studies

Approaching data science cases with a repeatable framework will not only add structure to your answer, but also help you manage your time and think clearly under the stress of interview conditions.

Let’s go over a framework that you can use in your interviews, then break it down with an example answer.

2.1 Data science case framework: CAPER

We've researched popular frameworks used by real data scientists, and consolidated them to be as memorable and useful in an interview setting as possible.

Try using the framework below to structure your thinking during the interview.

- Clarify : Start by asking questions. Case questions are ambiguous, so you’ll need to gather more information from the interviewer, while eliminating irrelevant data. The types of questions you’ll ask will depend on the case, but consider: what is the business objective? What data can I access? Should I focus on all customers or just in X region?

- Assume : Narrow the problem down by making assumptions and stating them to the interviewer for confirmation. (E.g. the statistical significance is X%, users are segmented based on XYZ, etc.) By the end of this step you should have constrained the problem into a clear goal.

- Plan : Now, begin to craft your solution. Take time to outline a plan, breaking it into manageable tasks. Once you’ve made your plan, explain each step that you will take to the interviewer, and ask if it sounds good to them.

- Execute : Carry out your plan, walking through each step with the interviewer. Depending on the type of case, you may have to prepare and engineer data, code, apply statistical algorithms, build a model, etc. In the majority of cases, you will need to end with business analysis.

- Review : Finally, tie your final solution back to the business objectives you and the interviewer had initially identified. Evaluate your solution, and whether there are any steps you could have added or removed to improve it.

Now that you’ve seen the framework, let’s take a look at how to implement it.

2.2 Sample answer using the CAPER framework

Below you’ll find an answer to a Facebook data science interview question from the Applied Data loop. This is an example that comes from Facebook’s data science interview prep materials, which you can find here .

Try this question:

Imagine that Facebook is building a product around high schools, starting with about 300 million users who have filled out a field with the name of their current high school. How would you find out how much of this data is real?

First, we need to clarify the question, eliminating irrelevant data and pinpointing what is the most important. For example:

- What exactly does “real” mean in this context?

- Should we focus on whether the high school itself is real, or whether the user actually attended the high school they’ve named?

After discussing with the interviewer, we’ve decided to focus on whether the high school itself is real first, followed by whether the user actually attended the high school they’ve named.

Next, we’ll narrow the problem down and state our assumptions to the interviewer for confirmation. Here are some assumptions we could make in the context of this problem:

- The 300 million users are likely teenagers, given that they’re listing their current high school

- We can assume that a high school that is listed too few times is likely fake

- We can assume that a high school that is listed too many times (e.g. 10,000+ students) is likely fake

The interviewer has agreed with each of these assumptions, so we can now move on to the plan.

Next, it’s time to make a list of actionable steps and lay them out for the interviewer before moving on.

First, there are two approaches that we can identify:

- A high precision approach, which provides a list of people who definitely went to a confirmed high school

- A high recall approach, more similar to market sizing, which would provide a ballpark figure of people who went to a confirmed high school

As this is for a product that Facebook is currently building, the product use case likely calls for an estimate that is as accurate as possible. So we can go for the first approach, which will provide a more precise estimate of confirmed users listing a real high school.

Now, we list the steps that make up this approach:

- To find whether a high school is real: Draw a distribution with the number of students on the X axis, and the number of high schools on the Y axis, in order to find and eliminate the lower and upper bounds

- To find whether a student really went to a high school: use a user’s friend graph and location to determine the plausibility of the high school they’ve named

The interviewer has approved the plan, which means that it’s time to execute.

4. Execute

Step 1: Determining whether a high school is real

Going off of our plan, we’ll first start with the distribution.

We can use x1 to denote the lower bound, below which the number of times a high school is listed would be too small for a plausible school. x2 then denotes the upper bound, above which the high school has been listed too many times for a plausible school.

Here is what that would look like:

Be prepared to answer follow up questions. In this case, the interviewer may ask, “looking at this graph, what do you think x1 and x2 would be?”

Based on this distribution, we could say that x1 is approximately the 5th percentile, or somewhere around 100 students. So, out of 300 million students, if fewer than 100 students list “Applebee” high school, then this is most likely not a real high school.

x2 is likely around the 95th percentile, or potentially as high as the 99th percentile. Based on intuition, we could estimate that number around 10,000. So, if more than 10,000 students list “Applebee” high school, then this is most likely not real. Here is how that looks on the distribution:

At this point, the interviewer may ask more follow-up questions, such as “how do we account for different high schools that share the same name?”

In this case, we could group by the schools’ name and location, rather than name alone. If the high school does not have a dedicated page that lists its location, we could deduce its location based on the city of the user that lists it.

Step 2: Determining whether a user went to the high school

A strong signal as to whether a user attended a specific high school would be their friend graph: a set number of friends would have to have listed the same current high school. For now, we’ll set that number at five friends.

Don’t forget to call out trade-offs and edge cases as you go. In this case, there could be a student who has recently moved, and so the high school they’ve listed does not reflect their actual current high school.

To solve this, we could rely on users to update their location to reflect the change. If users do not update their location and high school, this would present an edge case that we would need to work out later.

To conclude, we could use the data from both the friend graph and the initial distribution to confirm the two signifiers: a high school is real, and the user really went there.

If enough users in the same location list the same high school, then it is likely that the high school is real, and that the users really attend it. If there are not enough users in the same location that list the same high school, then it is likely that the high school is not real, and the users do not actually attend it.

3. Sample cases from FAANG data science interviews

Having worked through the sample problem above, try out the different kinds of case studies that have been asked in data science interviews at FAANG companies. We’ve divided the questions into types of cases, as well as by company.

For more information about each of these companies’ data science interviews, take a look at these guides:

- Facebook data scientist interview guide

- Amazon data scientist interview guide

- Google data scientist interview guide

Now let’s get into the questions. This is a selection of real data scientist interview questions, according to data from Glassdoor.

Data science case studies

Facebook - Analysis (product interpretation)

- How would you measure the success of a product?

- What KPIs would you use to measure the success of the newsfeed?

- Friends acceptance rate decreases 15% after a new notifications system is launched - how would you investigate?

Facebook - Analysis (applied data)

- How would you evaluate the impact for teenagers when their parents join Facebook?

- How would you decide to launch or not if engagement within a specific cohort decreased while all the rest increased?

- How would you set up an experiment to understand feature change in Instagram stories?

Amazon - modeling

- How would you improve a classification model that suffers from low precision?

- When you have time series data by month, and it has large data records, how will you find significant differences between this month and previous month?

Google - Analysis

- You have a google app and you make a change. How do you test if a metric has increased or not?

- How do you detect viruses or inappropriate content on YouTube?

- How would you compare if upgrading the android system produces more searches?

4. How to prepare for data science case interviews

Understanding the process and learning a method for data science cases will go a long way in helping you prepare. But this information is not enough to land you a data science job offer.

To succeed in your data scientist case interviews, you're also going to need to practice under realistic interview conditions so that you'll be ready to perform when it counts.

For more information on how to prepare for data science interviews as a whole, take a look at our guide on data science interview prep .

4.1 Practice on your own

Start by answering practice questions alone. You can use the list in section 3 , and interview yourself out loud. This may sound strange, but it will significantly improve the way you communicate your answers during an interview.

Play the role of both the candidate and the interviewer, asking questions and answering them, just like two people would in an interview. This will help you get used to the answer framework and get used to answering data science cases in a structured way.

4.2 Practice with peers

Once you’re used to answering questions on your own , then a great next step is to do mock interviews with friends or peers. This will help you adapt your approach to accommodate for follow-ups and answer questions you haven’t already worked through.

This can be especially helpful if your friend has experience with data scientist interviews, or is at least familiar with the process.

4.3 Practice with ex-interviewers

Finally, you should also try to practice data science mock interviews with expert ex-interviewers, as they’ll be able to give you much more accurate feedback than friends and peers.

If you know a data scientist or someone who has experience running interviews at a big tech company, then that's fantastic. But for most of us, it's tough to find the right connections to make this happen. And it might also be difficult to practice multiple hours with that person unless you know them really well.

Here's the good news. We've already made the connections for you. We’ve created a coaching service where you can practice 1-on-1 with ex-interviewers from leading tech companies. Learn more and start scheduling sessions today .

Related articles:

Machine learning (ML) has become central to every big company’s operations, whether Facebook, Google, or Microsoft. Its ability to automate tasks and solve complex problems has made it one of the most popular technologies in multiple fields, such as marketing, finance, software, transportation, and healthcare.

So machine learning specialists are highly in demand. Recent statistics state the global ML market is projected to reach USD 79.29 billion by the end of 2024 and showcase an annual growth rate of 36.08%, resulting in a market volume of USD 503.4 billion by 2030.

Competition is high, and to stand out from millions, you’ll have to ace the machine learning interviews that come your way.

What are employers looking for?

Employers are looking for well-rounded professionals who are polished when it comes to their knowledge, adaptability, and ambition in the field of machine learning . Before the interview prep, look at the skills you should have on a broader spectrum.

Technical skills

A strong foundation in mathematics is necessary for machine learning. Linear algebra, statistics, probability, and calculus are just some concepts you must be proficient in—these are the backbone of most ML algorithms.

Fluency in programming languages such as Python and R is a requirement, and a grasp of ML libraries and frameworks such as TensorFlow, PyTorch, scikit-learn, and pandas is valuable, especially for statistical analysis, data manipulation, numeral computing, and more.

Technical skills also involve knowledge in programming, data processing, model evaluation, etc. Familiarity with the end-to-end model deployment processes is also necessary, as is experience with big data technologies such as Hadoop and Spark and cloud platforms such as AWS, Google Cloud, and Azure.

Practical experience

Employers prefer candidates who don’t just hoard knowledge but know how to effectively apply what they learn. Gaining practical experience shows your expertise; end-to-end ML projects, domain-specific projects, big data, and distributed computing are some areas where you can prove your expertise.

Collaborative projects within a team and participating in competitions and hackathons are other interesting ways you can showcase your passion and knack for problem-solving to your future employer.

Knowing the business

Whatever industry you are applying in, it is necessary to understand it deeper beyond the surface. Employers look for machine learning professionals who prioritize working toward business goals, and it is only possible to design effective machine learning solutions if you are familiar with the domain you are working in.

Ethical awareness

Machine learning and AI are connected to multiple ethical implications, something employers are increasingly becoming aware of, such as:

Bias and transparency: ML models can perpetuate or amplify biases present in training data, leading to various consequences, such as unfair treatment of individuals in areas like hiring and law enforcement. For this reason, AI decisions should be transparent, especially in high-stakes applications. Understanding responsible AI helps prevent such biases.

Legal and regulatory compliance: AI systems are increasingly under scrutiny by regulators, and aspiring ML professionals must ensure that the models they create comply with user privacy and other associated laws.

Long-term viability: Negative outcomes such as discrimination and harm are always possible with irresponsible AI. ML professionals must design robust systems that align with societal values. Responsible AI practices ensure that ML and AI technologies are sustainable for the long term, avoiding unethical practices.

Innovation: Responsible AI has a competitive advantage—companies whose ML professionals push forward with strong values can differentiate themselves from competitors, driving innovation for reliable and more resistant systems.

Risk management: AI is not invincible, and in cases where AI systems may fail or cause harm, a strong foundation that can help minimize damage is necessary.

Machine learning interview format

Companies may have their own formats for interviewing candidates for a machine learning interview . This brief overview can help you know what to expect once you have been shortlisted.

Initial screening

The initial screening round is usually non-technical and conducted by a recruiter or a hiring manager. The main objective is to check whether the candidate qualifies for the minimum requirements for the role. You will be asked about yourself, your work history, and other relevant qualifications.

You may be asked basic technical questions about ML concepts such as algorithm types, evaluation metrics, or statistics. For this stage, you must be familiar with the information you’ve put down in your resume and be honest about your skills.

Technical screening

The technical screening, also known as the algorithm design or coding round, aims to filter out candidates who can write and optimize code.

You may face a coding challenge where you must solve programming problems within a time limit on a shared coding platform such as HackerRank, CodeChef, or LeetCode. In this case, the interviewer will not be involved.

In another case, you could also end up with a coding interview, where you are asked questions related to machine learning topics such as decision trees, neural networks, regularization, etc. The questions may also revolve around math and statistics to understand your base understanding; your problem-solving approach, fluency, and skills will be tested.

Machine learning round

If you make it to this round, be prepared for a deep dive. Here, you will be tested on both your primary and in-depth knowledge of machine learning.

The focus could be on designing a model, explaining how you would handle a data-related challenge, or you may be given a dataset and asked to analyze it and explain your approach. You must be familiar with machine learning libraries such as Keras, NumPy, and Scikit-learn to help you with the tests in this round.

Some commonly asked questions will revolve around machine learning concepts such as linear regression, logistic regression, SVM, KNN, ensemble learning methods, artificial neural networks (ANN), clustering, recurrent neural networks (RNN), feature engineering, data processing and visualization, loss functions, error metrics, and more.

Remember System Design and its associated concepts, such as ingestion, model training, deployment, and monitoring. You may also be given tasks related to coding and algorithms and tested on your ability to implement machine learning algorithms from scratch.

Project presentation

Depending on the industry you are applying in, you may face a technical presentation round where you present a past project to a panel of experts or be given a machine learning case study problem rooted in a real-world context to solve. Besides your technical insight and problem-solving skills, communication is a key element you will be tested on.

Final round

Pat yourself on the back for reaching the final round, but don’t get too comfortable just yet. Besides the interviewer evaluating your career goals and whether you’ll be a cultural fit, you may be faced with a technical discussion around your approach to machine learning, knowledge of industry trends, and what your strategic approach to projects looks like.

Common machine learning interview questions

What are the different types of machine learning (ml) .

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

Supervised: It learns a function that maps an input to an output based on sample input-output pairs. It uses labeled training data and new data.

Unsupervised: It analyzes unlabeled datasets without human intervention. Its primary use is extracting generative features, identifying structures and trends, grouping results, etc. The model is trained on a dataset that contains only input data without corresponding output labels.

Reinforcement: This enables software agents and machines to evaluate the optimal behavior in a specific context or environment to enhance efficiency. It involves learning from feedback (rewards or penalties) received after conducting specific actions.

Differentiate between training sets and test sets.

The three-step process of creating a model involves training, testing, and deploying it. While the training set provides examples for the machine learning model to analyze, the test set is used to judge the accuracy of the hypothesis generated by the model and provides an unbiased result.

What’s the difference between deep learning, AI, and machine learning?

Artificial intelligence (AI) is the concept of simulated human intelligence in machines that can be programmed to think, learn, and solve problems. Machine learning is a subset of AI that creates algorithms and statistical models to learn patterns from data and make predictions or decisions based on them. Lastly, deep learning is a subset of machine learning that uses neural networks with multiple layers to model complex patterns in large amounts of data.

What is overfitting, and how do you avoid it?

Overfitting occurs when a machine learning model learns the training set to the extent that it starts taking in noise and random fluctuations in training data sets as concepts. This causes a failure to generalize new, unseen data, resulting in poor performance on the test set, particularly in real-world applications.

The various ways to avoid overfitting include regularization, making a simple model with fewer variables and parameters, data augmentation, dropout (neural networks), cross-validation methods like k-folds, pruning decision trees, and regularization techniques such as LASSO.

When will you use classification over regression?

Classification is used when the goal is to predict a categorical outcome, while regression is used when your target variable is continuous. Both are supervised machine learning algorithms.

Final words

If you are confident in your machine learning knowledge and skills, the only course of action is to stay calm and walk into that interview with your head high.

Do a last review of the machine learning fundamentals with Educative’s Machine Learning Theory and Practice module.

Learn in-demand tech skills in half the time

Mock Interview

Skill Paths

Assessments

Learn to Code

Tech Interview Prep

Generative AI

Data Science

Machine Learning

GitHub Students Scholarship

Early Access Courses

For Individuals

Try for Free

Gift a Subscription

Become an Author

Become an Affiliate

Earn Referral Credits

Cheatsheets

Frequently Asked Questions

Privacy Policy

Cookie Policy

Terms of Service

Business Terms of Service

Data Processing Agreement

Copyright © 2024 Educative, Inc. All rights reserved.

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Machine Learning Interview Question & Answers

Machine learning is a subfield of artificial intelligence that involves the development of algorithms and statistical models that enable computers to improve their performance in tasks through experience. So, Machine Learning is one of the booming careers in upcoming years.

If you are preparing for your next machine learning interview , this article is a one-stop destination for you. We will discuss the top 50+ most frequently asked machine learning interview questions for 2024. Our focus will be on real-life situations and questions that are commonly asked by companies like Google , Microsoft, and Amazon during their interviews.

In this article, we’ve covered a wide range of machine learning questions for both freshers and experienced individuals, ensuring thorough preparation for your next ML interview.

Table of Content

Machine Learning Interview Questions For Freshers

1. how machine learning is different from general programming, 2. what are some real-life applications of clustering algorithms, 3. how to choose an optimal number of clusters, 4. what is feature engineering how does it affect the model’s performance , 5. what is a hypothesis in machine learning, 6. how do measure the effectiveness of the clusters, 7. why do we take smaller values of the learning rate, 8. what is overfitting in machine learning and how can it be avoided, 9. why we cannot use linear regression for a classification task, 10. why do we perform normalization, 11. what is the difference between precision and recall, 12. what is the difference between upsampling and downsampling, 13. what is data leakage and how can we identify it, 14. explain the classification report and the metrics it includes., 15. what are some of the hyperparameters of the random forest regressor which help to avoid overfitting, 16. what is the bias-variance tradeoff, 17. is it always necessary to use an 80:20 ratio for the train test split, 18. what is principal component analysis, 19. what is one-shot learning, 20. what is the difference between manhattan distance and euclidean distance, 21. what is the difference between covariance and correlation, 22. what is the difference between one hot encoding and ordinal encoding, 23. how to identify whether the model has overfitted the training data or not, 24. how can you conclude about the model’s performance using the confusion matrix, 25. what is the use of the violin plot, 26. what are the five statistical measures represented in a boxplot, 27. what is the difference between stochastic gradient descent (sgd) and gradient descent (gd), 28. what is the central limit theorem, advanced machine learning interview questions, 29. explain the working principle of svm., 30. what is the difference between the k-means and k-means++ algorithms, 31. explain some measures of similarity which are generally used in machine learning., 32. what happens to the mean, median, and mode when your data distribution is right skewed and left skewed, 33. whether decision tree or random forest is more robust to the outliers., 34. what is the difference between l1 and l2 regularization what is their significance, 35. what is a radial basis function explain its use., 36. explain smote method used to handle data imbalance., 37. does the accuracy score always a good metric to measure the performance of a classification model, 38. what is knn imputer, 39. explain the working procedure of the xgb model., 40. what is the purpose of splitting a given dataset into training and validation data, 41. explain some methods to handle missing values in that data., 42. what is the difference between k-means and the knn algorithm, 43. what is linear discriminant analysis, 44. how can we visualize high-dimensional data in 2-d, 45. what is the reason behind the curse of dimensionality, 46. whether the metric mae or mse or rmse is more robust to the outliers., 47. why removing highly correlated features are considered a good practice, 48. what is the difference between the content-based and collaborative filtering algorithms of recommendation systems.

This ML Questions is also beneficial for individuals who are looking for a quick revision of their machine-learning concepts.

In general programming, we have the data and the logic by using these two we create the answers. But in machine learning, we have the data and the answers and we let the machine learn the logic from them so, that the same logic can be used to answer the questions which will be faced in the future.

Also, there are times when writing logic in codes is not possible so, at those times machine learning becomes a saviour and learns the logic itself.

The clustering technique can be used in multiple domains of data science like image classification, customer segmentation, and recommendation engine. One of the most common use is in market research and customer segmentation which is then utilized to target a particular market group to expand the businesses and profitable outcomes.

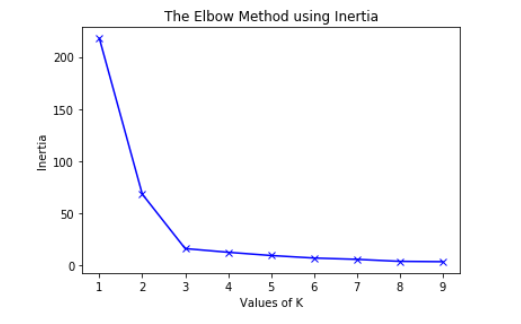

By using the Elbow method we decide an optimal number of clusters that our clustering algorithm must try to form. The main principle behind this method is that if we will increase the number of clusters the error value will decrease.

But after an optimal number of features, the decrease in the error value is insignificant so, at the point after which this starts to happen, we choose that point as the optimal number of clusters that the algorithm will try to form.

ELBOW METHOD

The optimal number of clusters from the above figure is 3.

Feature engineering refers to developing some new features by using existing features. Sometimes there is a very subtle mathematical relation between some features which if explored properly then the new features can be developed using those mathematical operations.

Also, there are times when multiple pieces of information are clubbed and provided as a single data column. At those times developing new features and using them help us to gain deeper insights into the data as well as if the features derived are significant enough helps to improve the model’s performance a lot.

A hypothesis is a term that is generally used in the Supervised machine learning domain. As we have independent features and target variables and we try to find an approximate function mapping from the feature space to the target variable that approximation of mapping is known as a hypothesis .

There are metrics like Inertia or Sum of Squared Errors (SSE), Silhouette Score, l1, and l2 scores. Out of all of these metrics, the Inertia or Sum of Squared Errors (SSE) and Silhouette score is a common metrics for measuring the effectiveness of the clusters.

Although this method is quite expensive in terms of computation cost. The score is high if the clusters formed are dense and well separated.

Smaller values of learning rate help the training process to converge more slowly and gradually toward the global optimum instead of fluctuating around it. This is because a smaller learning rate results in smaller updates to the model weights at each iteration, which can help to ensure that the updates are more precise and stable. If the learning rate is too large, the model weights can update too quickly, which can cause the training process to overshoot the global optimum and miss it entirely.

So, to avoid this oscillation of the error value and achieve the best weights for the model this is necessary to use smaller values of the learning rate.

Overfitting happens when the model learns patterns as well as the noises present in the data this leads to high performance on the training data but very low performance for data that the model has not seen earlier. To avoid overfitting there are multiple methods that we can use:

- Early stopping of the model’s training in case of validation training stops increasing but the training keeps going on.

- Using regularization methods like L1 or L2 regularization which is used to penalize the model’s weights to avoid overfitting .

The main reason why we cannot use linear regression for a classification task is that the output of linear regression is continuous and unbounded, while classification requires discrete and bounded output values.