Data & Finance for Work & Life

What is data modeling? A Visual Introduction with Examples

In their simplest form, data models are diagrams that show 3 dimensions: 1. what data an organization collects, 2. in which section of the organization it is collected, and 3. how each section’s data relates to others. Data modeling (modelling) is the process of creating those data models.

But for most people, this description isn’t very helpful, and it makes things seem more complex than they really are. I believe data models are easiest to understand in context, so let’s consider a business.

Businesses consist of three fundamental units: suppliers , products , and customers . Each of these units consists of data collected independently . For example, the sales team records customer name, location, and sale amount , whereas the production teams records product name, price, and size .

Businesses usually want to compile this data in a company database , which allows them to analyze it and better serve their customers.

To do so, they develop data models to understand what data they have, where it is, and how it all relates. Only then are they confident enough to put it in database. Since every business is different, data modeling is the process of creating a data model that meets the specific structure of the business in question.

Data models: data tables, data objects, & databases

To understand data models and how to model them, you need to know about data tables , data objects and databases .

A data table is a table of columns and rows in which the left most column is a unique ID (aka primary key ), and the columns to its right are characteristics of that unique ID. Data tables are what most people think of when they hear “data.” For example, look at this data table of vendor data:

| Vendor ID | Avg. sale ($) | Last Purchase | Number of items purchased |

|---|---|---|---|

| MetalOne Inc. | 100 | September 4, 2020 | 5 |

| Dynamic Metal Inc. | 50 | February 10, 2020 | 2 |

| ForceFive Metals Inc. | 75 | March 25, 2020 | 3 |

A database consists of many data tables either compiled into one big table or stored individually. The reason we consider multiple individual tables as part of one database is that they relate to each other. If not, they’re just different tables in space.

Don’t forget, you can get the free Intro to Data Analysis eBook , which covers data fundamentals (including models, tables, and objects).

The important thing to see here is that one database can also have multiple data models . As we said, a data model is just a combination of data tables that relate to each other. Once you store them together, they’re a model. And since databases can store a huge number of data tables, it can store multiple models. This sounds complicated, but it’s easy to understand with a picture:

Data object is another name for data tables within a database. The reason we use a separate names is that, at more advanced levels, data models contain objects other than tables (bit that’s outside the scope of this article). You will often hear or read data analysts refer to “data objects.” Nine time out of ten, they’re referring to tables, but you should be aware that there are others as you progress as a data analyst.

Types of Data Models with Real-World Example

So, we know that data models are pictorial representation of the contents and relationships of data tables. But there is not a on-size-fits-all data model, especially in a business.

C-level executives don’t want to see the gritty details behind the model. They just want to see the high-level relationships. At the same time, a database analyst wants as much detail as possible to ensure the relationships are correct.

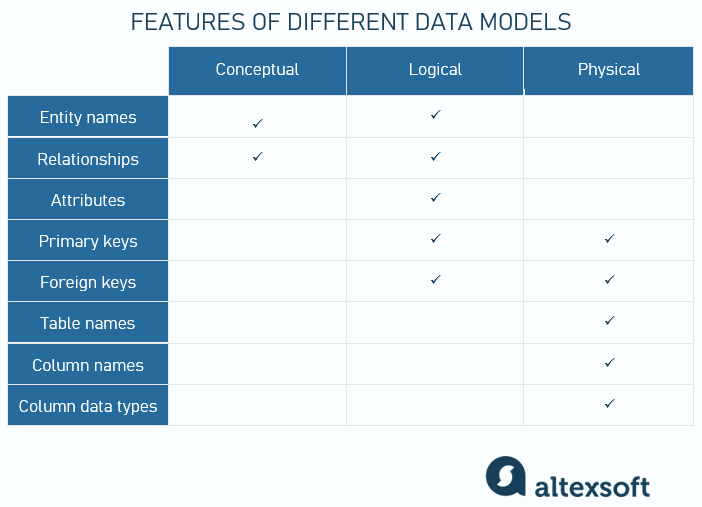

The need for different views has led to three primary types of data models:

- Conceptual Data Models. High-level.

- Logical Data Models. Mid-level.

- Physical Data Models. Low-level.

Though they differ, each of these models consists of at least one of these elements:

- Entities. Entities represent data tables (or more generally, data objects) that contain data relevant for comparison.

- Primary keys . Primary keys are another word for the unique ID of the data table within an entity.

- Attributes . Attributes show additional information stored under each unique ID in an entity.

- Relationships . Relationships are shown by lines and symbols and explain how entities interact. The most common are “one to many,” “one to one,” and “many to many.” Relationships are also referred to as cardinality . The notation for these relationships is called crows foot notation , and it’s very simple. Here are the most important examples:

Real-World Example

Imagine you own a wholesale e-commerce company that sells watches, and its called Batch Watch . Your three business units are vendors, products, and customers. You buy metal and glass from the vendors to build your high-quality watches, then sell them to boutiques and other retail stores. And let me tell you… people love your watches!

Let’s look at conceptual, logical, and physical data models using this example.

Conceptual Data Model

Conceptual models are the most general of the three. You don’t need to be a data analyst to understand them. Conceptual data models show the high-level business units that collect data, but do not show any information about the contents . They sometimes include pictures to more easily communicate their structure.

Using our Batch Watch example, a conceptual model may look as simple as this:

Logical Data Model

Logical models go a step further than conceptual models to show the primary key and attributes within each entity, as well as the relationships between them. Logical data models are the most common type of data model.

To understand logical data models, let’s look at this example of our three entities in Batch Watch to understand their primary keys and attributes. Then we can see how they’re related with crows feet notation.

As you can see, the primary keys (PKs) for each entity are a unique ID of the key component. The attributes under them provide a view on what data is stored within these data tables.

We saw a simplistic view of relationships in the conceptual model, and now we’ll add more detail with the crows feet notation . What will this help us understand? It will show how many primary keys in each entity links into primary keys in the other entities. Remember, the most common of these is “one to many.”

In the case of Batch Watch , each vendor supplies general materials for many of our watches ( product ), OR only one of our watches. This is because one watch requires a special kind of glass. Each product then sells to many retailers .

Using crows feet notation, it looks like this in a data model:

The logical model thus helps us understand that “one and only one” Vendor ID (along with their attributes) links into “one or many” Product IDs. Then, one and only one Product IDs link into many Retailer IDs.

Note: “many to many” relationships do not exist in data models

When analysts learn about crows feet relationships, they often get stuck on the idea of “many to many” relationships. After all, if one PK in Entity A links to many PKs in Entity B, aren’t there already “many” combinations? The answer is yes, “many to many” relationships exist, but they’re already accounted for in multiple “one to many” relationships .

Don’t let this confuse you (most professionals have a hard time explaining it). Just know that in data modeling, we do not use “many to many” relationships. Instead, whenever more than one PK links into other PKs, we simplify. We use two entity relationships of one to many.

From Logical Model to Database

The logical model is great, but it’s difficult to understand without seeing what it looks like once all of the entity’s data tables are linked together in a database .

Imagine we have only two vendors , three products , and four retail partners . Even with so few players, the database becomes complex, and quickly.

Here’s what it would look like using our example. I leave out the attributes in this picture so it’s easier to understand how this database is compiled:

This complexity is arguably the most important reason for using data models. They simplify the relationships between business units and entities so that it’s digestible, and easy to act on. Without them, it would be difficult to work with databases at all!

Why use a data model?

If they’re so complex, why even bother with databases and data models at all? The most obvious reason is that data is key to extracting insights and improving a company’s competitive edge .

When companies ignore data, they miss out on opportunities to understand their operations, markets, and customers better.

Moreover, data is becoming a compliance necessity . Businesses must be able to show how their company operates through data to be compliant with growing government data regulations.

Physical Data Model

Once you understand logical models, physical data models are easy. Physical data model entities are exactly the same as logical model entities, but they add in the types of data that each PK an attribute uses, as well as the number of characters . Here’s an example:

As you can see, the added value of physical models is the detail they provide on data in its tables. Experienced data modelers are able to quickly understand how the data model translates to the database and make decisions based on this knowledge .

Types of data are seemingly endless. The most common types include text , numeric , and boolean (true/false), but they can be as complex as the following list:

- Integer – any number that is not a decimal. Examples include -11, 34, 0, 100.

- Tinyint – an integer, but only numbers from 0 to 255

- Bigint – an integer bigger than 1 trillion

- Float – numbers too big to write out, and the scientific method is needed

- Real – any fixed point on a line

- Date – the date sorted in different forms, including “mm/dd/yyyy” (US), “dd/mm/yyyy” (Europe), “mmmm dd, yyyy”, and “mm-dd-yy” among many more.

- Time – the time of day, broken down as far as milliseconds

- Date time – the date and time value of an event

- Timestamp – stores number of seconds passes since 1970-01-01 00:00:00’ UTC

- Year – stores years ranging from 1901 to 2155 in two-digit or four-digit ranges

- Char – fixed length of characters, with a maximum of 8,000

- Varchar – max of 8,000 characters like char, but each entry can differ in length (variable)

- Text – similar to varchar, but the maximum is 2GB instead of a specific length

- nchar – fixed length with maximum length of 8,000 characters

- nvarchar – variable length with maximum of 8,000 characters

- ntext – variable length storage, only now the maximum is 1GB rather than a specific length

- binary – fixed length with maximum of 8,000 bytes

- varbinary – variable length storage with maximum bytes, topped at 8,000

- clob – also known as C haracter L arge O bject, is a type of sub-character that carries Unicode texts up to 2GB

- blob – carries big binary objects

- xml – a specific data type that stores XML data. XML stands for extensible markups language, and is common in data bases

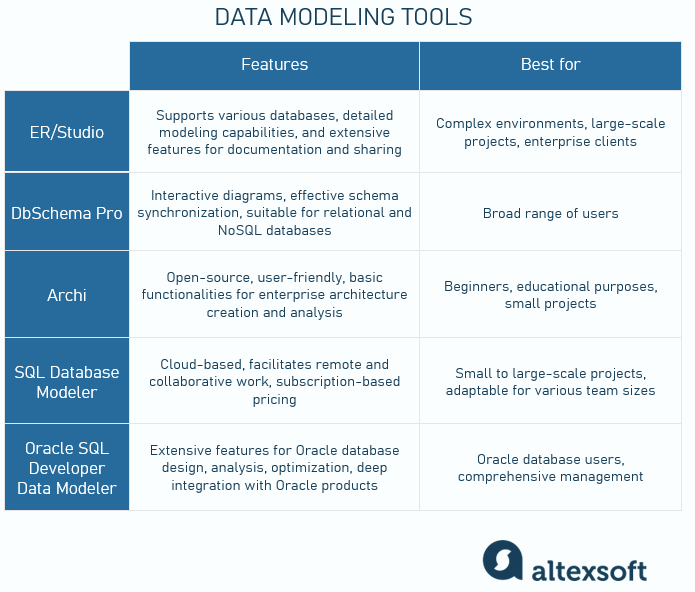

Data Modeling tools

To build all three types of data models, you will need a tool. The most important one to have in your toolbox is Microsoft Powerpoint. While it is heavily manual, its available in almost every professional setting. Especially as a a consultant, you need to be flexible.

With that said, the best tools for data models are ERP modeling tools and UML modeling language . They’re common among systems administrators and software engineering, where structures similar to data models are an everyday event.

- Entity Relationship (E-R) Model programs. As you can imagine, structural models are not unique to data models. In fact, the idea of entities and relationships is a driving principle in engineering . That’s where E-R models surfaced, as well as the programs to automate them. These programs are user friendly and require minimal coding skills that you can learn as you go . While a tutorial on E-R technology is outside the scope of this article, you can check out a trial account with Lucidchart for free if you want to get your feet wet. It’s common in big companies.

- UML (Unified Modeling Language). UML is a “coding” language for entity relationship models. I put “coding” in quotes because it’s actually called a mockup language , but the principle is the same. You use a program to write code that becomes the model. For many people, coding separately to build a model that represents an underlying database feels like overkill. For this reason, UML is considered an advanced technique.

Data modeling steps

We’ve talked a lot about types of data models, their content, and why we should use them, but what about actually building one ? What are the steps needed to build a data model, or “do” data modeling?

To answer this question, let’s take the perspective of an external data consultant rather than an internal analyst (since internal specialists can sometimes be biased).

The most important and first step is understanding the organization and its data collection capabilities and desires . Without data, there isn’t much to model.

Then, the consultant must understand the goals of the organization and set up a data collection plan to be approved by business decision-makers. That’s the bird’s eye view.

More specifically, 12 steps to data modeling are:

- Understand what kinds of data analysis and data insights the company is looking for . This is a crucial step. It consists of speaking with decision-makers to better understand

- Identify key business units. This step consists of identifying the most important business units. These are not necessarily departments, as products are a key unit as well. A good test is to ask yourself: which units do business units usually refer to when they ask “why”?

- Perform a data collection audit. This step consists of identifying which business units need data in order to build the business model. In almost all cases, they will be the units identified in step one, but not always. Business units without data collection, or without sufficient data collection, should be noted in a text document. A good test is to ask yourself: what data dimensions will this unit need to answer the “why” questions from business decision-makers?

- Perform a data collection GAP analysis. This step consists of identifying what technical and non-technical changes must be made in order to execute the data collection requirements identified in step 3.

- Build a draft conceptual data model. This step is the first model you build. It’s the conceptual model mentioned earlier in the article .

- Get feedback for this structure from management. In this step you get a critical view on how well your work responds to the decision-maker requirements identified in step 1.

- Adapt to feedback from management. Make changes to you conceptual model based on feedback from decision-makers.

- Build logical data model. This step consists of building a logical model with the information gathered and feedback. We move on from the conception model even without managerial approval because it’s better to adapt progressively than get stuck on the conceptual model.

- Get feedback and make adjustments. Repeat step six, but with the logical model. While decision makers may not want to see PKs and attributes, the conceptual structure remains, and it’s useful to get additional feedback and approval.

- Create physical data model to share with database management teams and BI. Using either an E-R modeling program or UML, build a physical model to share with relevant teams.

- Implement data collection improvements. This step consists of implementing the technical and non-technical requirements identified in step 4’s GAP analysis. You may need to work with external providers for this.

- Build dashboard. Build a dashboard to show the conceptual, logical, and physical models in a user-friendly framework for everyone in the company. Dashboard creation is often possible through the database management system the company stores its data in.

Techniques and best practices

Data modeling best practices include the following items:

- Where possible, use a single Enterprise Resource Planning (ERP) program to ensure ongoing integrity of data collection and modeling process.

- Always document decision-maker requirements to ensure coherence throughout the implementation process.

- Do not use both UML and E-R model programs. Choose one and stick with it. Since data collection and data modeling are an ongoing effort, you want it to keep it as user friendly as possible.

Advantages and disadvantages

While data modeling is an industry standard, it has its disadvantages. We’ve spoken a lot about the good parts, but here’s an overview of the advantages and limitations of data modeling:

Advantages:

- Easy to access

- Creates structure for an organization

- Flexible to the needs of any organization

- Ensures the integrity of data by splitting

Disadvantages:

- Compounding complexity. As company entities and the data stored in them become more complex, so does the data model. In fact, it can become so complex that it looses its simplistic appeal.

- Rigidity . Once a data model is put in place, it is incredibly difficult to modify. This article described the steps to set up a data model, but maintaining and modifying one is another story.

- Dependance with growth . Just like any web of logic, any change to one element has an impact on many others. While the purpose of the model is to limit this risk, as the business units grow, so too does the difficulty of maintaining entity independence.

Data modeling is a must-know technique for any good data analyst. It’s a window into the complex database that hosts any company’s data. While it may seem intimidating at first, you’ll quickly adjust to the logic as you spend more time with different materials.

At AnalystAnswers.com, I believe that data analysis is becoming more and more critical in our digital world. Learning it shouldn’t break the bank, and everyone should have access to understanding the data that’s growing under our fingertips every day.

To learn more about data analysis, check out the Understand Data Analysis tab!

About the Author

Noah is the founder & Editor-in-Chief at AnalystAnswers. He is a transatlantic professional and entrepreneur with 5+ years of corporate finance and data analytics experience, as well as 3+ years in consumer financial products and business software. He started AnalystAnswers to provide aspiring professionals with accessible explanations of otherwise dense finance and data concepts. Noah believes everyone can benefit from an analytical mindset in growing digital world. When he's not busy at work, Noah likes to explore new European cities, exercise, and spend time with friends and family.

File available immediately.

Notice: JavaScript is required for this content.

- Business Blog

- Data Solutions

Data modelling: a guide to techniques, models and best practices

Are you looking to streamline your data for better analysis and decision-making data modelling is the critical first step. this practice shapes raw data into a clear structure, optimising not just storage and retrieval, but also comprehension across diverse teams. through this guide, you’ll uncover the key principles of data modelling, explore its various forms, and access the tools to refine your data strategy., key takeaways.

- Data modelling is a strategic process that creates a visual representation of an information system, critical for simplifying, organising, and optimising databases, and supports business analysis and decision-making.

- There are various types of data models, including conceptual, logical, and physical models, each serving different purposes and providing different levels of abstraction, suitable for various audiences within an organisation.

- Data modelling techniques and processes are essential to accurately represent and organise data, with a range of available tools specifically designed to enhance the efficiency and effectiveness of database management and support evolving industry trends.

What is data modelling and why its important?

Data modelling is the process of creating a visual representation of an information system, illustrating the linkages among data points and organisational structures.

It serves as a blueprint for both the structure and the flow of data within an organisation. This visual representation aids in understanding and managing data, but its importance extends far beyond its graphical nature.

At its core, data modelling is about creating a system that not only stores information efficiently but also allows for effective retrieval and innovative use of that data . It helps organisations make sense of complex data landscapes, enabling them to harness the full potential of their information assets.

Furthermore, data modelling is not just a technical exercise; it is a strategic business activity . It supports business analysis and decision-making by providing a clear framework for data collection , storage, and use. It lays the groundwork for data-centric initiatives.

If you want to find out more about how you can use data in your business, take a look at this:

- Data Transformation: the complete guide for effective data management

- Creating a data-driven culture: a roadmap for organizational transformation

- The role of Business Data Analysis in a data-oriented project

What are the different types of data models?

Delving deeper into the labyrinth of data modelling, we encounter three distinct types of models :

- conceptual,

- and physical models.

Each of these models serves different purposes and caters to diverse audiences within a company. From how data is physically stored in a database to the levels of data abstraction, these models pave the way for diverse approaches to data representation and organisation.

Conceptual data modelling

Conceptual data modelling, a type of conceptual model, is akin to painting a broad picture of a company’s data landscape. It sets up and defines business rules and concepts, providing an abstract representation of the conceptual data model necessary to support business requirements without being tied to any specific technology implementation.

This high-level approach focuses on how different data elements interconnect, and the overarching relationships that define the business domain. By doing so, it acts as a bridge between the technical data modelers and stakeholders, translating complex data structures into a language that everyone can understand.

Conceptual data modelling is the cornerstone of a robust data management strategy, paving the way for future growth and adaptation.

Logical data modelling

Logical data modelling is the process of creating a visual representation of the structure of an organisation’s data. Logical data models (LDMs) typically use diagrams to illustrate the relationships between different data entities, attributes, and the rules governing those relationships.

Logical data modelling is a vital step in bridging the gap between abstract concepts and tangible database structures. Its benefits, ranging from improved data quality to enhanced communication and better risk management , make it an indispensable tool for any organisation looking to leverage data effectively.

By investing in logical data modelling, businesses can ensure that their data systems are aligned with their goals, adaptable to change, and capable of supporting complex, data-driven processes.

Physical data modelling

A physical data model is the tangible manifestation of data organisation in the realm of data modelling. Often referred to as a physical model, it outlines the actual implementation of the database, including:

- constraints

- relationships between tables

The design elements in physical data models, such as tables, columns, and relationships, directly influence the efficiency of business intelligence systems by effectively managing data elements.

Creating narrower tables helps to minimise long scans or reads, proving valuable for handling large data volumes or when operating across multiple tables. This is just one of the many ways physical data models contribute to optimal database performance.

Find out more about the data tasks and workflows:

- Data preprocessing: a comprehensive step-by-step guide

- Data classification: the backbone of effective data security

- Data visualisation: unlock insights in your data

Data modelling techniques

A toolbox for data modelling would be incomplete without an array of techniques to tackle diverse data scenarios. These tools include:

- Relational models

- Hierarchical models

- Network models

- Entity-relationship models

- Dimensional models

- Object-oriented models

- Graph models

Relational data modelling

Stepping into the realm of relational data modelling, we encounter a technique that has been ruling the roost since the mid-1990s. In this model, data is organised in a table-like structure where each table represents a specific entity and each row a specific record.

What sets relational data models apart is their ability to depict entities with various relationships, including:

- One-to-many

- Many-to-one

- Many-to-many

The overarching purpose of the relational model is to describe different relationships between data entities, making it a popular choice in many database systems.

Hierarchical data modelling

Hierarchical data modelling arranges data in a tree structure consisting of one root and multiple connected data nodes. Within the model, relationships are represented as single one-to-many relationships between different levels of data.

A practical application of the hierarchical model can be seen in a supermarket where one parent department has several child aisles under it. Though originated in mainframe databases, hierarchical data models are still utilised by businesses today through systems like IMS.

Network data modelling

Unlike hierarchical database models, the network model allows for each record to have multiple parent and child records, thereby forming a more complex structure.

Adopted by the CODASYL Data Base Task Group in 1969, the network model was considered to offer a more natural way to model relationships between entities compared to the hierarchical model. Despite its heyday in the 1970s, the network model continues to influence modern database systems.

Entity relationship data modelling (E-R model)

The Entity-Relationship (ER) Model is a visual storyteller of data structures. It uses a diagrammatic approach to represent the structure of database systems, illustrating the relationships between different entities.

The ER Diagram is composed of entities, attributes, and relationships which are depicted using specialised symbols such as rectangles, ellipses, diamonds, and lines.

Introduced by Peter Chen in 1971, ER Diagrams provide a standardised modelling approach for conceptual database design. They include

- entities (represented by rectangles),

- attributes (classified into key, composite, multivalued, and derived attributes; represented by different shapes)

- and relationships (represented by diamonds).

Dimensional data modelling

Dimensional data modelling aims to simplify data structures for better performance and speed of data retrieval in a data warehouse environment . This modelling technique supports data reading, analysis, and summarisation processes.

While dimensional data models have numerous benefits, they also present certain limitations, such as the need for domain knowledge in designing schemas and challenges in maintaining data integrity during warehouse loading.

Object-oriented data modelling

Object-oriented data modelling (OODM) is a data modelling approach that uses object-oriented concepts to represent data structures and their relationships.

This methodology integrates the principles of object-oriented programming (OOP), where data is encapsulated within objects, and objects are instances of classes that define the attributes and behaviors (methods) they can have.

It provides numerous benefits, including natural mapping to real-world concepts, reusability, flexibility, and improved maintainability.

Graph data modelling

Graph data modelling is like a map of a city, depicting various landmarks (nodes) and the paths (relationships) connecting them. Nodes in a graph data model represent entities with a unique identity, and can contain properties that hold name-value pairs of data.

Relationships in a graph model connect nodes and are directional, representing actions or verbs between entities. Graph databases enforce the rule of no broken links, ensuring relationships always point to existing endpoints.

Properties in a graph model are attributes stored on nodes or relationships, which can answer specific queries about the data.

Data modelling: process and best practices

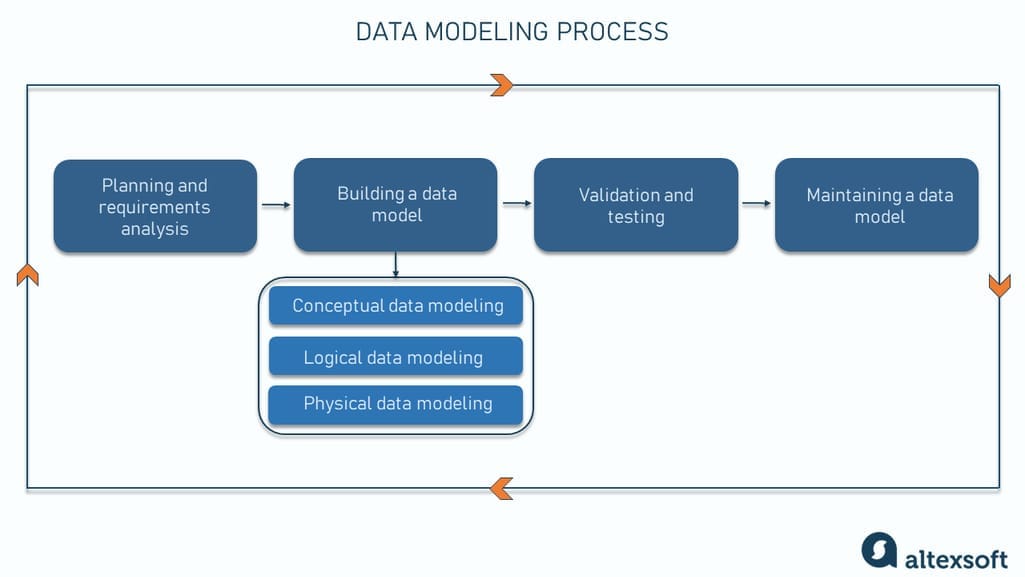

The data modelling process involves six steps:

- Identifying business entities

- Defining key properties

- Creating a draft ER model

- Identifying data attributes

- Mapping attributes to entities

- Finalising and validating the data model

This iterative nature of the data modelling process allows for continuous improvement and adaptation.

What else is worth noting – data modelers adhere to certain best practices to ensure the overall quality of the database. Data models should emphasise:

- Data completeness

- Traceability

- Consistency

Applying data normalisation techniques can minimise redundancy and enhance flexibility in data models to support evolving business requirements.

Building data models around business processes facilitates easier navigation, evaluation of data, and appropriate placement within the model. To mitigate data bias and improve fairness in model predictions, techniques such as over-sampling, under-sampling, SMOTE, and population adjustment can be implemented.

Selecting the right data modelling tools

A variety of data modelling tools are available, offering broad compatibility and specialised features for SQL, NoSQL, and Cloud databases.

Here are the key steps to consider when selecting the right data modelling tools:

- identify your requirements (scope of modelling, complexity, scale and collaboration needs),

- evaluate key features (e.g. user interface, usability, integrations, customisation, reverse and forward engineering),

- assess performance and scalability,

- consider collaboration features and security,

- evaluate cost and licensing (pricing models and total cost of ownership),

- support and community,

- review case studies and testimonials.

By considering these factors, you can choose a tool that not only meets your current requirements but also scales with your organisation’s evolving needs.

And if you need a consultation or an IT partner for data solutions – do not hesitate to get in touch with us ! Our specialists are prepared to support you in enhancing, controlling, and fully utilising your data resources!

Data Science and Engineering

Process data, base business decisions on knowledge and improve your day-to-day operations.

Discover similar posts

Data-driven insights: how to outperform competitors?

6 data quality dimensions: a comprehensive overview

Data readiness assessment: checklist of 6 key elements

© Future Processing . All rights reserved.

- Privacy policy

What is Data Modeling?

May 31, 2019 | 4 Min Read

Author: Michael Nixon

Market News

Data modeling is the process of organizing and mapping data using simplified diagrams, symbols, and text to represent data associations and flow.

Engineers use these models to develop new software and to update legacy software. Data modeling also ensures the consistency and quality of data. Data modeling differs from database schemas . A schema is a database blueprint while a data model is an overarching design that determines what can exist in the schema.

Benefits of Data Modeling

- Improved accuracy, standardization, consistency, and predictability of data

- Expanded access to actionable insights

- Smoother integration of data systems with less development time

- Faster, less expensive maintenance and updates of software

- Quicker identification of errors and omissions

- Reduced risk

- Better collaboration between teams, including non-developers

- Expedited training and onboarding for anyone accessing data

Types of Approaches

There are four primary approaches to data modeling .

1. Hierarchical

A hierarchical database model organizes data into tree-like structures with data stored as interconnected records with one-to-many arrangements. Hierarchical database models are standard in XML and GIS.

2. Relational

A relational data model, AKA a relational model, manages data by providing methodology for specifying data and queries. Most relational data models use SQL for data definition and query language.

3. Entity-relationship

Entity-relationship models use diagrams to portray data and their relationships. Integrated with relational data models, entity-relationship models graphically depict data elements to understand underlying models.

Graph data models are visualizations of complex relationships within data sets that are limited by a chosen domain.

Types of Data Models

There are three primary types of data models.

1. Conceptual , defining what data system contains, used to organize, scope, and define business concepts and rules.

2. Logical , defining how a data system should be implemented, used to develop a technical map of rules and data structures.

3. Physical , defining how the data system will be implemented according to the specific use case.

Role of a Modeler

A data modeler maps complex software system designs into easy-to-understand diagrams, using symbols and text to represent proper data flows. Data modelers often build multiple models for the same data to ensure all data flows and processes have been properly mapped. Data modelers work closely with data architects.

Data Modeling versus Database Architecture

Data architecture defines a blueprint for managing data assets by aligning with organizational needs to establish data requirements and designs to meet these requirements.

Database architecture and data modeling align when new systems are integrated into an existing system, as part of the overall architecture. With data modeling, it’s possible to compare data from two systems and integrate smoothly.

Snowflake Data Cloud and Data Modeling

The Snowflake’s platform is ANSI SQL-compliant, allowing customers to leverage a wide selection of data modeling tools tailored to specific needs and purposes.

Snowflake has introduced several features enhancing data modeling capabilities.

Snowpark Enhancements : The Snowpark ML Modeling API , now generally available, allows data modelers to use Python ML frameworks like scikit-learn and XGBoost for feature engineering and model training within Snowflake. This integration simplifies the data modeling process by enabling direct operation on the data stored in Snowflake, reducing the need for data movement.

Advanced Analytics with Snowflake Cortex : The new ML-based functions for forecasting and anomaly detection provide data modelers with powerful tools to perform complex analyses directly through SQL. This simplifies the process of incorporating advanced analytics into data models, making it accessible even to those with limited ML expertise.

Developer Experience with Snowflake Python API : In public preview, this API enhances Python's integration with Snowflake, making it easier for data modelers to manipulate and interact with data within Snowflake using familiar Python constructs.

Learn more about the Data Cloud , or see Snowflake’s capabilities for yourself. To give it a test drive, sign up for a free trial .

Like what you read? Show your appreciation through likes and shares!

- Snowflake Workloads Overview

Applications

Data Engineering

Collaboration

- AI and Data Science

- Data Warehousing

Cybersecurity

Why Snowflake

Customer Stories

The Data Cloud

Snowflake Marketplace

Powered by Snowflake

Data Science & ML

Data Warehouse

Pricing Options

Value Calculator

For Industries

Advertising, Media, and Entertainment

Financial Services

Healthcare & Life Sciences

Manufacturing

Public Sector

Retail / CPG

For Departments

Marketing Analytics

Product Development

Resource Library

Quickstarts

Documentation

Hands-on Labs

About Snowflake

Investor Relations

Leadership & Board

Speakers Bureau

ESG at Snowflake

Snowflake Ventures

Sign Up for Our Newsletter

© 2023 Snowflake Inc. All Rights Reserved

What Is Data Modeling? Tips, Examples And Use Cases

May 4, 2023 21 mins read

Data modeling can be considered the foundational stone of data analytics and data science. It gives meaning to the enormous amount of data that organizations produce. It generates an effectively organized representation of the data to assist the organizations with better insights into data understanding and analysis .

The domain of data utilization is vast beyond the limitations of a human. It is being used as a source for personalized social media advertisement, discovering treatments for numerous diseases, and more. The data is readable by software machines but generates significant results with maximized accuracy. It simplifies the data by implementing rational rules assignment.

The task of getting the required data, transforming it into an understandable representation, and using it as needed for the average user is simplified through data modeling. It plays a pivotal role in transforming data into valuable analytics that helps organizations make business strategies and essential decisions in this fast-paced era of transformation.

Data modeling provides in-depth insights into organizations’ daily data despite the process’s complexity. It helps organizations in efficient and innovative business growth.

Data Modeling Definition

Let us understand what data modeling is. So, data modeling conceptualizes the data and relationships among data entities in any sphere. It describes the data structure , organization, storage methods, and constraints of the data.

- Data modeling promotes uniformity in naming, rules, meanings, and security, ultimately improving data analysis. These models represent data conceptually using symbols, text, or diagrams to visualize relationships. The main goal is to make the data available and organized however it is used.

- Data modeling helps store and organize data to fulfill business needs and allow for the processing and retrieving of information of use. Thus, it is a crucial element in designing and developing information systems.

Firstly, data modeling signifies the arrangements of the data that already exist. Then this process proceeds to define the data structure, relationship of entities, and data scope that is reusable and can be encrypted.

Data modeling creates a conceptual representation of data and its relationships to other data within a specific domain. It involves defining the structure, relationships, constraints, and rules of data to understand and organize information meaningfully. So, data modeling conceptualizes the data and relationships among data entities in any sphere. It describes the data structure, organization, storage methods, and constraints of the data.

- Data modeling signifies the data arrangements of the data that already exist. Then this process proceeds to define the data structure, relationship of entities, and data scope that is reusable and can be encrypted.

- Data modeling creates a conceptual representation of data and its relationships to other data within a specific domain. It involves defining the structure, relationships, constraints, and rules of data to understand and organize information meaningfully.

Data modeling is essential in software engineering, database design, and other fields that require the organization and analysis of large amounts of data. It enables developers to create accurate, efficient, and scalable systems by ensuring the data is properly structured, normalized, and stored to support the organization’s business requirements.

Importance of Data Modeling

Data modeling is the stepping stone of the data management process to achieve business objectives and other essential utilization. It is the fundamental phase of the data management process to achieve crucial business objectives and other vital usages that assist in decision-making driven by data analysis.

The following insights can help comprehend the importance of data modeling.

- We may comprehend the data structure, relationships, and limitations by building a data model.

- By making it easier to ensure everyone working on the project is familiar with the data.

- You can avoid uncertainties and inaccuracies.

- Data continuity, reliability, and validity are improved by addressing issues.

- Provides a common language and a framework or schema for better data management practices.

- Processing insights from raw data to discover patterns, trends, and relationships in data.

- Improved data storage efficiency to cancel out useless data.

- Streamlined data retrieval with organized storage.

- Good database schema designs can significantly reduce data redundancy issues.

- Cost efficiency and an increase in system performance due to reduced and optimized data storage.

Steps of the Data Modeling Process

What we select to make a data model depends mainly on the data characteristics and the individual business requirements. The steps of the data modeling process for data engineering include the following:

Step 1: Requirements gathering

Gathering requirements from analysts, developers, and other stakeholders and then realizing how they need the data, how they plan to use it, and any blockers they face regarding the quality or other data specifics.

Step 2: Conceptual data modeling

In this step, you must map entities, attributes, and the relationship among them in a generalized concept of understanding the data.

Step 3: Logical data modeling

The third step of the data modeling process is to develop a logical interpretation of the data entities and the relationship among them. The logical rules definition is also defined in this step.

Step 4: Physical data modeling

A database based on the logical rules defined in the previous step is implemented physically, where attributes are defined with primary and foreign keys of a data entity table.

Types of Data Modeling

Below are the types of data modeling that are being implemented:

1. Conceptual Data Modeling

Data entities are modeled as high-level entities with relationships when using this method. Rather than focusing on specific technologies or implementations, it focuses on business needs.

2. Logical Data Modeling

This type of data modeling focuses on just the high-level view of the data entities and relationships. It has comprehensive data models in which entities, relationships, and attributes are stipulated in detail, along with constraints and implementation rules.

3. Physical Data Modeling

It is the type of data modeling in which the model is defined physically, constituting tables, database objects, data in tables and columns, and indexes defined appropriately. It mainly focuses on the physical storage of data, data access requirements, and other database management.

4. Dimensional Data Modeling

Dimensional data modeling requires data arrangement into ‘facts’ and ‘dimensions.’ Where ‘facts’ mean metrics of interest and ‘dimensions’ mean attributes for facts’ context

5. Object-Oriented Data Modeling

This specific data model is based on realistic scenarios represented as objects and independent attributes, with several relationships in between.

Data Modeling Techniques

Several techniques are used to model data, of which some are and would tell you what is data modeling in general:

1. Entity-relationship Modeling

This technique uses entities and relationships to represent their associations to perform conceptual data modeling. It utilizes subtypes and supertypes to represent hierarchies of entities that share common attributes and distinct properties, cardinality constraints to identify the number of entities that can take part in a relationship and are expressed in the form of symbols, weak entities depend on another entity for existence, recursive relationships that occur when an entity has a relationship with itself and attributes to help describe entities and are their properties.

2. Object-oriented Modeling

Object-oriented data modeling is linked to relational databases and broadly used in software development and data engineering. It represents data as objects with attributes and behaviors, and relationships between objects are defined by inheritance, composition, or association.

3. NoSQL Modeling

NoSQL modeling is a technique that uses non-relational databases to store semi-structured, flexible data in an unstructured format which usually utilizes key-value pairs, documents, or graph structures. Since the database is non-relational, the modeling technique implemented differs from relational database modeling techniques. With column-family modeling, data is usually stored as columns where each column family is a group of relevant columns. With graph modeling, data is usually stored as nodes and edges which represent entities and the relationship between entities, respectively.

4. Unified Modeling Language (UML) Modeling

A data modeling technique that uses visual modeling to describe software systems with diagrams and models and is used for complex data flow modeling and for defining relationships between multiple data entities. Used as a standard to visualize, design, and document systems, it constitutes dynamic diagrams like sequence, class, and use case diagrams used to model data and system behavior. One possible way to extend UML is by using class diagrams and by representing data entities and their attributes.

5. Data Flow Modeling

Data flow among different processes utilizes the data flow modeling technique, constituting different diagrams showing how a process and its sub-processes are interlinked and how the data flows in between.

6. Data Warehousing Modeling

This technique is used to design data warehouses and data marts, which are used for business intelligence and reporting. It involves creating dimensional models that organize data into facts and dimensions and creating a star or snowflake schema that supports efficient querying and reporting.

Each method has its own pros and cons. Ensure that the technique you use is per your project’s requirements and the data available.

Data Modeling Use Cases

Data modeling is used in various industries and contexts to support various business objectives. Some everyday use cases of data modeling include:

- Predictive Modeling: Creating a statistical or mathematical model to predict the future based on data for sales forecasting, resource allocation, quality controlling and demand planning. Identifying new patterns and relationships will lead to new insights and possibly better opportunities.

- Customer Segmentation: Through the division of customers into different groups on the basis of behaviors, preferences, demographics or other characteristics, you can do customer segmentation which is a popular data modeling use case.

- Fraud Detection: Identifying fraudulent activities by analyzing patterns and data inconsistency is now possible due to data models that can detect fraud patterns like an individual filing multiple claims immediately after they get the policy.

- Recommendation Engines: Recommendation engines for eCommerce, search engines, movies, and TV shows, and many more industries use data models that rely on quick data access, storage and manipulation which keeps them up-to-date at all times without affecting the performance and user experience.

- Natural Language Processing: Utilizing topic modeling that auto-learns to analyze word clusters through text and Named Entity Recognition (NER) that detects and classifies significant information from text, we can perform Natural Language Processing (NLP) on social media, messaging apps and other data sources.

- Data governance: A process of ensuring that a company’s data is extracted, stored, processed and discarded as per data governance policies. It has a data quality management process to ensure monitoring and improvement of data gathering. Tracking data from the original state to a final state, maintaining metadata that ensures a track record of data for accuracy and completion, ensuring data security and compliance. Data stewards are responsible for the integrity and accuracy of specific data sets.

- Data integration: If any data has ambiguity or inconsistency, then the data integration use case is ideal for identifying those gaps and modeling the data entities, attributes, and relationships into a database.

- Application development: Data modeling plays a key role in data management and intelligence reports, data filtration, and other uses while developing web applications, mobile apps, and dynamic user experience interfaces like business intelligence applications and data dashboards. Data modeling is a versatile tool supporting various business objectives, from database design to data governance and application development.

Also, see: How to Download Images from Amazon? Tools and Tips Explained

Tips for Effective Data Modeling

Practical data modeling tips are as follows:

1. Identify the purpose and scope of the data model

To build a data model that not only addresses users’ needs but also high-performance and scalable, you need to know what problem it is solving, the data sources for the model, the type of data the model would store, the kind of people who would be using the model, level of details required for them, key entities, attributes and their relationships. You would also need to address the data quality requirements by all stakeholders.

2. Involve stakeholders and subject matter experts

Involving stakeholders and subject matter experts is crucial when designing a data model as they provide valuable insight into the business needs and can help identify potential issues early on.

3. Follow best practices and standards

There are a few things that you need to make sure are right and up to their standards when creating a data model. Firstly, choose an industry-wide accepted standardized modeling notations like Entity-Relationship (ER) diagrams, and Unified Modeling Language (UML), Business Process Model and Notation (BPMN), etc consistently to make sure things are clear and understandable.

4. Use a collaborative approach

Make sure you encourage stakeholders to let you know of their input in the form of thoughts and opinions so that all outlooks are considered. All stakeholders including IT staff, subject matters, end-users, etc are represented to maintain group diversity. Use diagrams and flowcharts to help stakeholders understand data model and give feedback in an efficient manner. Regularly schedule meetings to discuss progress, review blockers or concerns and give an update to all stakeholders.

5. Document and communicate the data model

Documenting business requirements play a vital role when a project is initiated. In the first step, when requirements are gathered and analyzed, it is important to map them in official documents. Similarly, documenting a data model is important when implementing a collaborative approach because it provides coherent guidelines to the teammates working on a project.

Avoid using technical jargon and acronyms that all stakeholders are not familiar with. Instead, use clear and concise language to define data model and its components. Use diagrams and flowcharts with a standardized notation to explain data model of how it relates to business processes to the stakeholders.

Official documents of data models bridge the communication gap between application developers and stakeholders and bring everyone on a coherent approach of what has been implemented along with all data entities, attributes, relationships, and the rules defined on a logical layer of the data model. Overall, documenting and communicating the data model is an essential aspect of data modeling and helps to ensure its effectiveness and long-term viability.

Data Modeling Tools

A wide range of data modeling tools is being used for data modeling, out of which six are mentioned below:

A popular tool utilized by developers to create custom applications through its API which lets them create custom data modeling tools that can be integrated with ERwin to provide additional functionality for users. This allows the users to customize the tool as per their needs.

2. SAP PowerDesigner:

SAP PowerDesigner tool meant to be customized and used per the user’s specific needs. It has the option to use script in VBScript, JScript and PerlScript to automate tasks, apply validation rules and perform complex calculations. Adding macros to automate repetitive tasks can be done in a snap. Add-ins can be custom-developed using .NET or Java and interacted via API. Templates of data models define entities, attributes, relationships and other key elements. With the model extensions, a user can create custom extensions to store specific domain concepts and customize the tool as per their needs.

3. Oracle SQL Developer Data Modeler:

Oracle SQL Data Modeler is a powerful data models design and management tool that allows the user to create and alter data structures like ER diagrams, data types and constraints so the users may utilize it as needed. Custom plug-ins can be developed using Java to support custom reports, implement specific data modeling conventions, etc, and can be shared across teams for easier collaboration and to maintain a consistent data model.

4. Toad Data Modeler:

This tool supports relational and NoSQL data modeling, including entity relationship diagramming, reverse engineering, and database schema generation. It also supports integration with other data management tools like Toad for Oracle. According to db-engine , Oracle is the most used database management system.

Microsoft Visio is a general-purpose diagramming tool that can use for data modeling. It includes templates for entity relationship diagrams, data flow diagrams, and other types commonly used in data modeling.

6. MySQL Workbench:

MySQL Workbench is an open-source tool explicitly designed to allow users to create and interact with MySQL databases by adding new features and functionalities like Entity-Relationship diagrams, forward and reverse engineering, and database schema generation.

Many other data modeling tools are available, and the choice of tool depends on the project’s specific requirements and the user’s preferences.

Benefits of Data Modeling

Data modeling has several benefits, including that data modeling can help ensure that the database is designed to quickly accommodate future growth and changes in business requirements. Data modeling assists in identifying data redundancies, errors, and irregularities for better insights.

It equips data scientists with an in-depth understanding of data structure, attributes of data, relationships, and constraints of the data. Data modeling also helps in data storage optimization, which plays a significant role in minimizing data storage costs.

Related: Best Web Scraping Tools For Data Gathering In 2023

Final Remarks

Finally, we shed light on the fact that data modeling is the stepping stone of the data management process to achieve business objectives and other essential utilization. We may comprehend the data structure, relationships, and limitations by building a data model.

By making it easier to ensure everyone working on the project is familiar with the data. It is the fundamental phase of the data management process to achieve crucial business objectives and other vital usages that assist in decision-making driven by data analysis.

You can avoid uncertainties and inaccuracies. Data continuity, reliability, and validity are improved by addressing issues. Provides a common language and a framework or schema for better data management practices.

The examples and discussion of this writing provided insight into how data modeling processes raw data to discover patterns, trends, and relationships in data. Also, it provides improved data storage efficiency to cancel out useless data.

Streamlined data retrieval with organized storage. By adopting best practices and leveraging the right tools and techniques, data professionals can help organizations unlock their data’s full potential, driving business growth and innovation.

Our solution

Scraper api.

Easily scrape search engines and avoid being blocked

Share this post

Similar to "What Is Data Modeling? Tips, Examples And Use Cases"

Web scraping for machine learning 2024.

Feb 8, 2024 12 mins read

Most read from web scraping for beginners

How to use a backconnect proxy.

Nov 26, 2019 2 mins read

Best Time to Send Marketing Emails to Boost Open Rate

Apr 4, 2023 15 mins read

How To Build A Java Web Crawler

Jan 20, 2021 16 mins read

Start crawling and scraping the web today

Try it free. No credit card required. Instant set-up.

The Computing Tutor

"inspiring students to succeed".

100% Student Pass Rate at AQA A Level!

STEM Learning Support Specialist in GCSE Maths, L2 & L3 BTEC IT and A Level Computer Science. Online 1:1 support available to the whole of the UK!

NEW ARRIVAL! A Complete Scheme of Work Resource for the

Level 3 BTEC IT Unit 2 Exam Parts A and B

"Well worth the money . "

Mrs J. Martin-Johnson, Teacher of Business and IT, Thomas Tallis School, London.

The BTEC Unit 5 Data Modelling Unit is an optional unit for all certification levels from the Extended Certificate upwards Here is a complete 27 Lesson scheme of work for the BTEC IT Unit 5 Data Modelling Unit. The resource has been developed with a primary focus on using Microsoft Excel with over 70 exercises, class tasks, discussion and research activities with suggested answers covering Learning Aims A, B and C for the Unit 5 Specification. The Scheme of Work includes a brand new scenario that students can use to practice the coursework requirements for Learning Aim B and C before attempting the Assignment.

The resources include: Learning Aim A • lessons covering all specification content theory including: • Class tasks covering stages in the decision making process. • Class tasks covering spreadsheet features to support data modelling. • Lots of data model examples for analysis in the assignment. • Consistent use of data models within the lessons to promote student understanding. • Lots of practice data model questions with answers. Learning Aim B Lessons covering all specification content theory including: • How to create a functional specification with suggested Answers. • How to design a data model to support a given scenario with suggested Answers. • How to review and refine data model designs with suggested Answers. • A new scenario with full worked answers is included for students where they can practice their design & documentation skills before attempting the assignment. Learning Aim C Lessons covering all specification content theory including: • Advanced spreadsheet features as detailed in the specification. • A fully worked original and revised solution for the new example scenario. • Answers for carrying out a test plan. • How to optimise a data model from testing, feedback and documentation. • How to carry out a data model review and evaluation.

Each lesson includes: • A teacher presentation with learning objectives, lesson content and end of lesson review. • A range of class tasks, from worked examples to discussion activities. • Student Worksheets for all class tasks. • Suggested answers for the class tasks to encourage and promote discussion and further learning. • Resource links to relevant websites and videos that can be used in lesson.

Also included is a full outline Scheme of Work for all suggested 27 lessons, which includes: • Learning objectives. • Lesson overview. • Assessment opportunities. • EDI considerations. • Homework suggestions. • A format that can be used for SLT inspections. • Teacher notes on resource content with suggested ideas for unit delivery

Available for purchase - a full model answer for the Pearson Authorised Assignment 2 'The Cheese Shop'.

TheComputingTutor is pleased to announce the release of a full worked model Answer exemplar for the BTEC IT Unit 5 Data Modelling Assignment 2 "The Cheese Shop" and is one of the more challenging assignments from Pearson.

Available for purchase - a full model answer for the Pearson Authorised Assignment 2 'The BMI Tracker'

TheComputingTutor is pleased to announce the release of a full worked model Answer exemplar for the BTEC IT Unit 5 Data Modelling Assignment 2 "The BMI Tracker".

More Information

Both of these exemplars are fully worked model answers which includes Named Ranges, Macros, VBA code, Graphs, Functions and Formulas as well as cell Styles and Formatting, all of which are designed to answer the Assignment 2 Authorised Assignment Brief from Pearson. In addition to the spreadsheet, there is also a Teacher Guide showing how the solution was designed along with explanation of key functionality. You will have full access to the worksheets, the macros and the functions so you can see how it works and then use this to help guide your students. The sample downloads are only available as a limited functionality excel sheet. All macros and functions have been removed and these will only be available in the full version.

Assignment Resources for Assignments 1 and 2 are now available!

The Unit 05 Assignments are relatively straightforward, except that there is a lot of content to cover which could get missed. As part of the RQF BTEC assessment procedures, assessors are not allowed to give students a list of tasks to cover to achieve a grade once the assignment is running. This means that learners can often forget to include key pieces of evidence, or to not provide evidence in the right format. TheComputingTutor is pleased to announce the release of a full set of Student Guides for the Edexel BTEC IT UNIT 05 Data Modelling. The resources include: • A full set of Student Guide Assignment Resources covering what is required for Assignments 1 & 2. • A Teacher presentation showing your students how to approach each of the Assignments. • A Teacher resource document, with suggested hints and ideas for Unit delivery and how to structure the assignment content. • An editable PowerPoint and a printable PDF for each Student Guide. • A tracking sheet for your students to monitor their own progress based on COMPLETE, IN PROGRESS and NOT STARTED for all tasks. Each Student Guide covers all the marking requirements for Pass criteria for Learning Aims A, B and C as well as showing extensive opportunities for where to include Merit and Distinction criteria evidence. The Student Guides are ideal for weaker candidates, or students struggling to organise their work, as it gives a simple, easy to follow checklist of all required Assignment criteria.

Please note: this is not a theory resource; this resource focuses entirely on making sure that your students are able to answer the Assignment tasks independently. Theory content is covered in the associated Scheme of Work.

Each guide has references on every page to where the required content is covered in the 2016 Authorised Pearson textbook as well as continual assessment opportunities so students can identify the areas they are struggling with so you can support them where allowed. You can use these learning resources for individual students who are finding the Assignment procedure challenging, or you can give the Guides to your entire group before they start each Assignment - the choice is yours. The Student Guides only contain the required Assignment content headings, the actual theory content taught is entirely down to you. You have full control of each Guide PowerPoint, so you can edit the slides and move them around to suit your teaching and the needs of your classroom. These resources will help your learners to know exactly what they have to do to independently achieve their grades.

Documents included in the teaching resource sample download include: • 3x Teaching presentations, 1 each for Learning Aims A, B and C. • 3x Student Guides, 1 each for Learning Aims A, B and C as an editable powerpoint. • 3x Student Guides, 1 each for Learning Aims A, B and C as a read only PDF. • 2x Student Checklists for Learning Aim A and Learning Aim B&C. • 1x Student Tracking Document. • 1x Teacher Notes with ideas and information about Unit Delivery. In addition, documents in the data model sample include: • 1x Technical Information about the Data Model. • 1x reduced functionality spreadsheet. To view a free sample of files available for the following resources click on the links below

See What People Think Of Our Resources!

"The resources for Unit 5 Data Modelling are excellent and are really saving me a lot of time particularly with the current climate. The resources have helped to differentiate the class and their learning abilities whilst at the same time encouraging students to work independently, as well as stretching and challenging them. Well worth the money!!"

Mrs. J Martin-Johnson, Teacher of Business and IT, The Thomas Tallis School, London

What is Data Modeling? Types, Process, and Tools

- 14 min read

- Data Science

- Published: 29 Dec, 2023

- 1 Comment Share

Whatever domain you operate in, data is the blood that keeps the heart of your business pumping. If you don’t have enough information or if it’s there but you don’t know how to make sense of it, you will be far behind your rivals.

Mastering data modeling ensures you have reliable data and the expertise to apply it strategically, keeping you ahead in the competitive landscape.

This article explains data modeling, exploring its types, components, techniques, tools, and practical steps for effective design.

What is data modeling?

Data modeling is the process of discovering, analyzing, and representing data requirements for business operations and software applications. It starts with identifying and scoping data needs, followed by visualizing and precisely communicating these needs through a data model . This model serves as a blueprint, detailing the connections and structures of the organization's data.

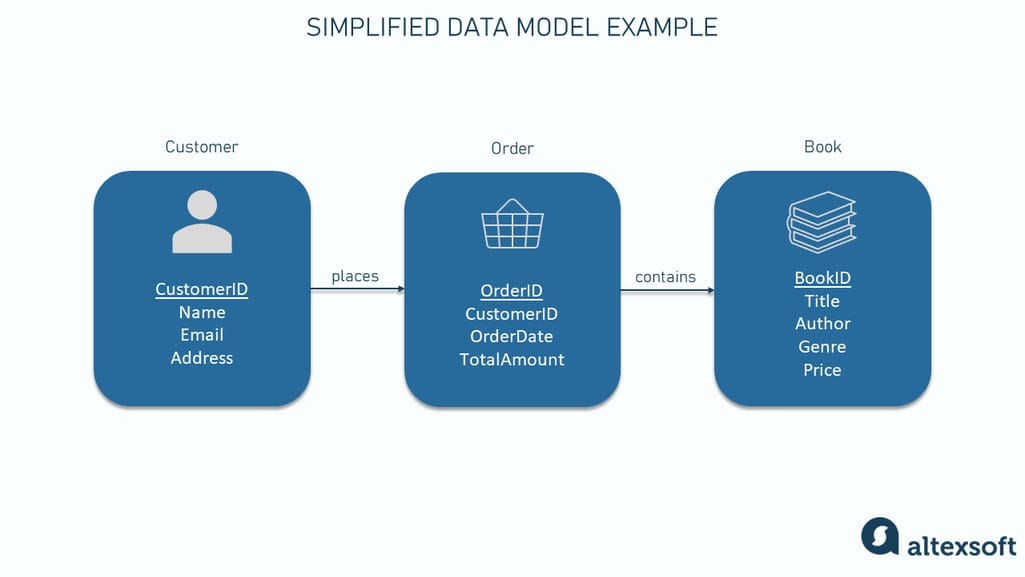

A simple data model in the form of an Entity-Relationship Diagram (ERD) for the online bookstore

For example, the diagram above represents a simplified data model for an online bookstore, featuring three entities "Customer," "Order," and "Book," each with their listed attributes and relationships.

Benefits of data modeling

Looking at the beneficial part of data modeling, it

- provides a shared vocabulary for discussing data across the organization;

- captures and documents essential information about the organization's data and systems;

- serves as a primary communication tool, especially useful during projects involving business process design, software development, and database structuring; and

- offers a foundational starting point for system customization, integration, or replacement.

This process streamlines data management by making it more accessible and understandable, ensuring its integrity and efficient utilization within databases and systems. Additionally, it guides the development and optimization of data architecture and database design, supporting the effective use and flow of data in various applications.

Speaking of data architecture, many people think that data modeling is similar to data architecture. And that is not so.

Data modeling vs data architecture

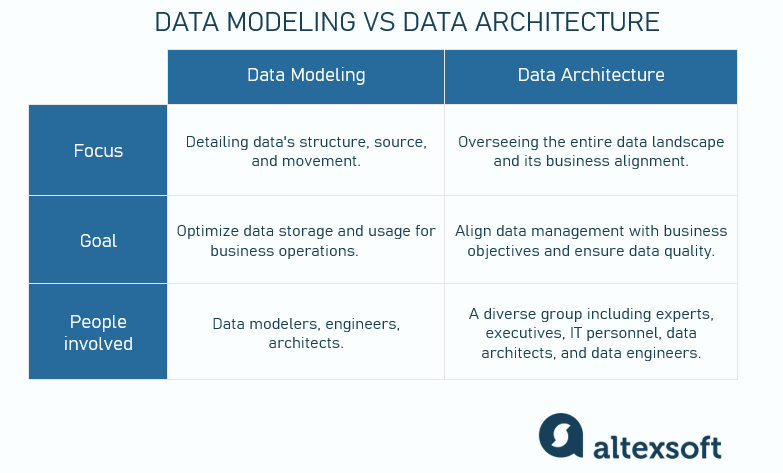

While data modeling and data architecture are both crucial in managing an organization's data, they serve different purposes and operate at different levels.

Data modeling vs. data architecture in a nutshell

Data modeling f ocu s. Data modeling is about creating detailed diagrams or models of data. It deals with identifying what data is needed, where it comes from, how it moves, and how it should be structured.

Data modeling goal. The primary aim is to align the business's core rules with data definitions, optimizing how data is stored and used for business activities.

People involved in data modeling. In most cases, these are data modelers (we’ll explain what they are further). However, other technical professionals like software engineers, data architects , and sometimes data scientists can participate in the data modeling. They may use AI tools to assist in this process.

Data architecture focus. Data architecture takes a broader view: It encompasses not just the data itself but also how it aligns with the business's overall strategy. It involves planning and overseeing the entire data landscape of an organization.

Data architecture goal. The aim is to ensure data quality, manage data governance , and align data management with business objectives.

People involved in data architecture. Data architecture involves many participants, including IT personnel, data architects, data engineers , nontechnical industry experts, executives, data consumers, and producers.

Data modeling generally focuses on the detailed design and structure of specific data sets. In contrast, data architecture looks at the bigger picture, organizing and governing the entire data ecosystem of an organization.

D ata model ing co ncepts

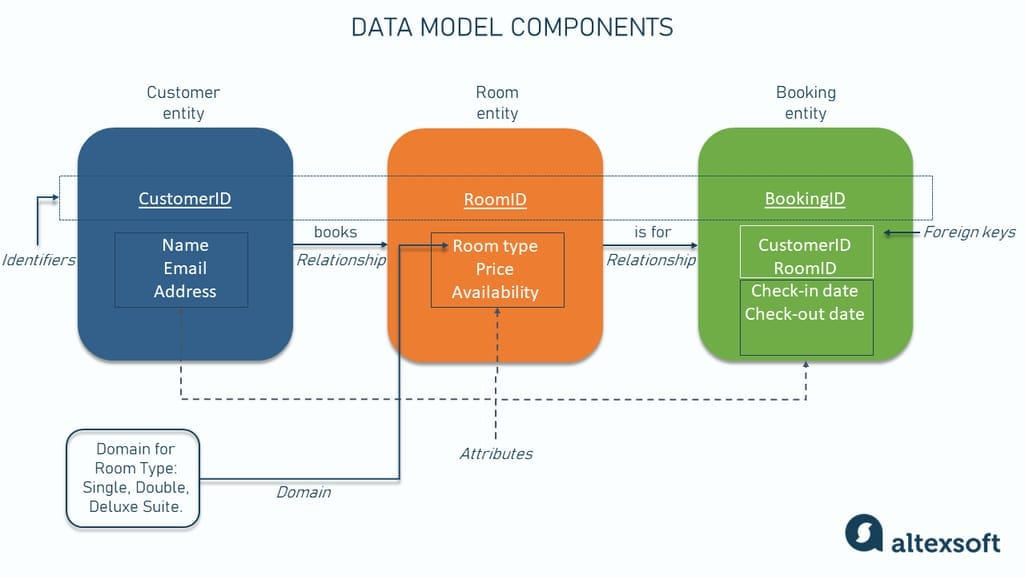

Different data models may use various conventions to represent data, but they fundamentally consist of the same basic building blocks: entities, relationships, attributes, and domains. Let’s take a look at each of them.

Data model components

Entities. An entity, typically depicted as a rectangle in data models, represents a category or object an organization collects information about. Entities are the “nouns” of a data model, answering fundamental questions like who, what, when, where, why, or how. For example, a “who” may be a person or organization of interest, a “what” — a product or service, and a “when” — some time interval like a date of purchase, etc.

In a data model for a hotel property management system , “Customer” is an entity represented as a rectangle labeled “Customer” containing information about hotel guests.

Relationships. Graphically represented as lines, relationships illustrate the associations between entities. They can show high-level interactions between conceptual entities, detailed interactions between logical entities, or constraints between physical entities. We’ll explain conceptual, logical, and physical data modeling types in the next section.

For example, a line connecting the “Customer” and “Room” entities indicates a relationship, such as which customer booked which room.

Attributes. These are properties or characteristics of an entity, usually depicted as a list inside the entity's rectangle. They describe, identify, or measure aspects of the entity.

Returning to our hotel example, attributes like CustomerID, Name, and Contact Information will be listed inside the “Customer” rectangle, providing specific details about each customer.

Identifiers/Primary keys. Identifiers or primary keys, often underlined in the entity, are unique attributes that distinctly identify each instance of an entity. The CustomerID within the “Customer” entity is an identifier, uniquely distinguishing each customer.

Foreign key s. The goal of a primary key is to create connections between entities. For instance, a foreign key in the “Booking” entity might reference the CustomerID from the “Customer” entity, linking a booking to a specific customer.

Domains. These components define the set of possible values for an attribute, providing a means of standardizing characteristics.

The domain for the “Room Type” attribute in the “Room” entity might include specific room categories like “Single,” “Double,” and “Deluxe Suite.”

These components collectively form the structure of a data model, making it a vital tool for organizing and understanding a company's data, such as in a hotel's customer management system.

Data modeling types based on their abstraction levels

In the data modeling process, a data model passes through three phases, evolving in complexity and detail : conceptual, logical, and physical. At each stage, it needs input from both business users and data management professionals.

Different features of conceptual, logical, and physical data models

Conceptual data model

This high-level, simplified representation defines key entities and their relationships according to the business requirements. It's abstract and not tied to technical specifics, aiming at understanding the “what” of the business data, e.g., “What are the key things the business deals with, and how are they related?”

At the conceptual data modeling phase, entity-relationship (ER) diagrams are often utilized. These diagrams help you identify the main entities (like customers, products, etc.) and illustrate how they interact. ( We’ll explain ER and other schemas along with their use cases later.)

For example, in a hotel's conceptual data model, you might have entities like "Guest," "Room," and "Reservation," showing fundamental relationships such as guests making room reservations.

Logical data model

This model is more detailed than the conceptual one. It further refines specific data structures, including entities, their attributes, and relationships, while still not addressing physical storage details.

As far as our hotel example goes, the logical model would detail attributes for "Guest" (like name, contact information), "Room" (like room type, rate), and "Reservation" (like reservation dates, room assigned), providing a more comprehensive understanding of how data elements interrelate within the hotel's operations.

Physical data model

As the most detailed model, the physical data model outlines how to store and access data in a particular database. It includes data types, sizes, constraints, and table relationships. In the hotel context, this model would lay out the database schema, describing how guest information, room details, and reservation data are stored, with specific table structures. Additionally, it includes optimization strategies for database performance.

It’s worth noting that each model serves a specific purpose within the phases of the data modeling process: The conceptual model establishes the overall framework, the logical model details specific data structures and relationships, and the physical model translates these into an actual database schema. You can’t just go with one and ignore the others.

Data modeling techniques: examples and applications

There are a few core data modeling techniques or schemas: relational, entity-relationship, hierarchical, network, dimensional, object-oriented database, and object-relational models. They provide frameworks for organizing, storing, and managing data, each suited to different business needs and data peculiarities.

You can determine the most appropriate technique at the logical data modeling stage once the entities and relationships are clearly defined. Different factors influence the decision, such as the characteristics of the business operations, the complexity of queries and reports needed, the nature of the data ( structured, unstructured, or semi-structured ), performance considerations, the intended database management system, etc.

Relational model

In this model, you structure your data in tables, each representing a different entity. Every row in a table is a record with a unique identifier (key), and each column keeps an entity's attribute. Foreign keys establish relationships between tables by referencing primary keys in other tables. This simple and flexible model allows easy data retrieval and manipulation through SQL queries.

Application: Relational models are fundamental for any application that relies on a relational database. T hey are essential for accurately representing and understanding data relationships in a wide range of solutions, from enterprise resource planning systems to customer relationship management software.

Entity-relationship model

As we said earlier, t his schema is foundational in conceptual data modeling. But it can be used at later phases as well, especially if you work with relational database systems. It employs entities (representing data objects) and relationships (connections between entities) to map out data structures.