10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Abhinav Agarwal

Graduate Student at Northwestern University

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn

LinkedIn is the largest professional social networking site with nearly 800 million members in more than 200 countries worldwide. Almost 40% of the users access LinkedIn daily, clocking around 1 billion interactions per month. The data science team at LinkedIn works with this massive pool of data to generate insights to build strategies, apply algorithms and statistical inferences to optimize engineering solutions, and help the company achieve its goals. Here are some of the real world data science projects at LinkedIn:

i) LinkedIn Recruiter Implement Search Algorithms and Recommendation Systems

LinkedIn Recruiter helps recruiters build and manage a talent pool to optimize the chances of hiring candidates successfully. This sophisticated product works on search and recommendation engines. The LinkedIn recruiter handles complex queries and filters on a constantly growing large dataset. The results delivered have to be relevant and specific. The initial search model was based on linear regression but was eventually upgraded to Gradient Boosted decision trees to include non-linear correlations in the dataset. In addition to these models, the LinkedIn recruiter also uses the Generalized Linear Mix model to improve the results of prediction problems to give personalized results.

ii) Recommendation Systems Personalized for News Feed

The LinkedIn news feed is the heart and soul of the professional community. A member's newsfeed is a place to discover conversations among connections, career news, posts, suggestions, photos, and videos. Every time a member visits LinkedIn, machine learning algorithms identify the best exchanges to be displayed on the feed by sorting through posts and ranking the most relevant results on top. The algorithms help LinkedIn understand member preferences and help provide personalized news feeds. The algorithms used include logistic regression, gradient boosted decision trees and neural networks for recommendation systems.

iii) CNN's to Detect Inappropriate Content

To provide a professional space where people can trust and express themselves professionally in a safe community has been a critical goal at LinkedIn. LinkedIn has heavily invested in building solutions to detect fake accounts and abusive behavior on their platform. Any form of spam, harassment, inappropriate content is immediately flagged and taken down. These can range from profanity to advertisements for illegal services. LinkedIn uses a Convolutional neural networks based machine learning model. This classifier trains on a training dataset containing accounts labeled as either "inappropriate" or "appropriate." The inappropriate list consists of accounts having content from "blocklisted" phrases or words and a small portion of manually reviewed accounts reported by the user community.

Here is a Text Classification Project to help you understand NLP basics for text classification. You can find a news recommendation system dataset to help you build a personalized news recommender system. You can also use this dataset to build a classifier using logistic regression, Naive Bayes, or Neural networks to classify toxic comments.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Pfizer is a multinational pharmaceutical company headquartered in New York, USA. One of the largest pharmaceutical companies globally known for developing a wide range of medicines and vaccines in disciplines like immunology, oncology, cardiology, and neurology. Pfizer became a household name in 2010 when it was the first to have a COVID-19 vaccine with FDA. In early November 2021, The CDC has approved the Pfizer vaccine for kids aged 5 to 11. Pfizer has been using machine learning and artificial intelligence to develop drugs and streamline trials, which played a massive role in developing and deploying the COVID-19 vaccine. Here are a few data analytics case studies by Pfizer :

i) Identifying Patients for Clinical Trials

Artificial intelligence and machine learning are used to streamline and optimize clinical trials to increase their efficiency. Natural language processing and exploratory data analysis of patient records can help identify suitable patients for clinical trials. These can help identify patients with distinct symptoms. These can help examine interactions of potential trial members' specific biomarkers, predict drug interactions and side effects which can help avoid complications. Pfizer's AI implementation helped rapidly identify signals within the noise of millions of data points across their 44,000-candidate COVID-19 clinical trial.

ii) Supply Chain and Manufacturing

Data science and machine learning techniques help pharmaceutical companies better forecast demand for vaccines and drugs and distribute them efficiently. Machine learning models can help identify efficient supply systems by automating and optimizing the production steps. These will help supply drugs customized to small pools of patients in specific gene pools. Pfizer uses Machine learning to predict the maintenance cost of equipment used. Predictive maintenance using AI is the next big step for Pharmaceutical companies to reduce costs.

iii) Drug Development

Computer simulations of proteins, and tests of their interactions, and yield analysis help researchers develop and test drugs more efficiently. In 2016 Watson Health and Pfizer announced a collaboration to utilize IBM Watson for Drug Discovery to help accelerate Pfizer's research in immuno-oncology, an approach to cancer treatment that uses the body's immune system to help fight cancer. Deep learning models have been used recently for bioactivity and synthesis prediction for drugs and vaccines in addition to molecular design. Deep learning has been a revolutionary technique for drug discovery as it factors everything from new applications of medications to possible toxic reactions which can save millions in drug trials.

You can create a Machine learning model to predict molecular activity to help design medicine using this dataset . You may build a CNN or a Deep neural network for this data analyst case study project.

Access Data Science and Machine Learning Project Code Examples

9) Shell Data Analyst Case Study Project

Shell is a global group of energy and petrochemical companies with over 80,000 employees in around 70 countries. Shell uses advanced technologies and innovations to help build a sustainable energy future. Shell is going through a significant transition as the world needs more and cleaner energy solutions to be a clean energy company by 2050. It requires substantial changes in the way in which energy is used. Digital technologies, including AI and Machine Learning, play an essential role in this transformation. These include efficient exploration and energy production, more reliable manufacturing, more nimble trading, and a personalized customer experience. Using AI in various phases of the organization will help achieve this goal and stay competitive in the market. Here are a few data analytics case studies in the petrochemical industry:

i) Precision Drilling

Shell is involved in the processing mining oil and gas supply, ranging from mining hydrocarbons to refining the fuel to retailing them to customers. Recently Shell has included reinforcement learning to control the drilling equipment used in mining. Reinforcement learning works on a reward-based system based on the outcome of the AI model. The algorithm is designed to guide the drills as they move through the surface, based on the historical data from drilling records. It includes information such as the size of drill bits, temperatures, pressures, and knowledge of the seismic activity. This model helps the human operator understand the environment better, leading to better and faster results will minor damage to machinery used.

ii) Efficient Charging Terminals

Due to climate changes, governments have encouraged people to switch to electric vehicles to reduce carbon dioxide emissions. However, the lack of public charging terminals has deterred people from switching to electric cars. Shell uses AI to monitor and predict the demand for terminals to provide efficient supply. Multiple vehicles charging from a single terminal may create a considerable grid load, and predictions on demand can help make this process more efficient.

iii) Monitoring Service and Charging Stations

Another Shell initiative trialed in Thailand and Singapore is the use of computer vision cameras, which can think and understand to watch out for potentially hazardous activities like lighting cigarettes in the vicinity of the pumps while refueling. The model is built to process the content of the captured images and label and classify it. The algorithm can then alert the staff and hence reduce the risk of fires. You can further train the model to detect rash driving or thefts in the future.

Here is a project to help you understand multiclass image classification. You can use the Hourly Energy Consumption Dataset to build an energy consumption prediction model. You can use time series with XGBoost to develop your model.

10) Zomato Case Study on Data Analytics

Zomato was founded in 2010 and is currently one of the most well-known food tech companies. Zomato offers services like restaurant discovery, home delivery, online table reservation, online payments for dining, etc. Zomato partners with restaurants to provide tools to acquire more customers while also providing delivery services and easy procurement of ingredients and kitchen supplies. Currently, Zomato has over 2 lakh restaurant partners and around 1 lakh delivery partners. Zomato has closed over ten crore delivery orders as of date. Zomato uses ML and AI to boost their business growth, with the massive amount of data collected over the years from food orders and user consumption patterns. Here are a few examples of data analyst case study project developed by the data scientists at Zomato:

i) Personalized Recommendation System for Homepage

Zomato uses data analytics to create personalized homepages for its users. Zomato uses data science to provide order personalization, like giving recommendations to the customers for specific cuisines, locations, prices, brands, etc. Restaurant recommendations are made based on a customer's past purchases, browsing history, and what other similar customers in the vicinity are ordering. This personalized recommendation system has led to a 15% improvement in order conversions and click-through rates for Zomato.

You can use the Restaurant Recommendation Dataset to build a restaurant recommendation system to predict what restaurants customers are most likely to order from, given the customer location, restaurant information, and customer order history.

ii) Analyzing Customer Sentiment

Zomato uses Natural language processing and Machine learning to understand customer sentiments using social media posts and customer reviews. These help the company gauge the inclination of its customer base towards the brand. Deep learning models analyze the sentiments of various brand mentions on social networking sites like Twitter, Instagram, Linked In, and Facebook. These analytics give insights to the company, which helps build the brand and understand the target audience.

iii) Predicting Food Preparation Time (FPT)

Food delivery time is an essential variable in the estimated delivery time of the order placed by the customer using Zomato. The food preparation time depends on numerous factors like the number of dishes ordered, time of the day, footfall in the restaurant, day of the week, etc. Accurate prediction of the food preparation time can help make a better prediction of the Estimated delivery time, which will help delivery partners less likely to breach it. Zomato uses a Bidirectional LSTM-based deep learning model that considers all these features and provides food preparation time for each order in real-time.

Data scientists are companies' secret weapons when analyzing customer sentiments and behavior and leveraging it to drive conversion, loyalty, and profits. These 10 data science case studies projects with examples and solutions show you how various organizations use data science technologies to succeed and be at the top of their field! To summarize, Data Science has not only accelerated the performance of companies but has also made it possible to manage & sustain their performance with ease.

FAQs on Data Analysis Case Studies

A case study in data science is an in-depth analysis of a real-world problem using data-driven approaches. It involves collecting, cleaning, and analyzing data to extract insights and solve challenges, offering practical insights into how data science techniques can address complex issues across various industries.

To create a data science case study, identify a relevant problem, define objectives, and gather suitable data. Clean and preprocess data, perform exploratory data analysis, and apply appropriate algorithms for analysis. Summarize findings, visualize results, and provide actionable recommendations, showcasing the problem-solving potential of data science techniques.

|

|

|

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

- Register or Log In

- 0) { document.location='/search/'+document.getElementById('quicksearch').value.trim().toLowerCase(); }">

Statistics Case Study and Dataset Resources

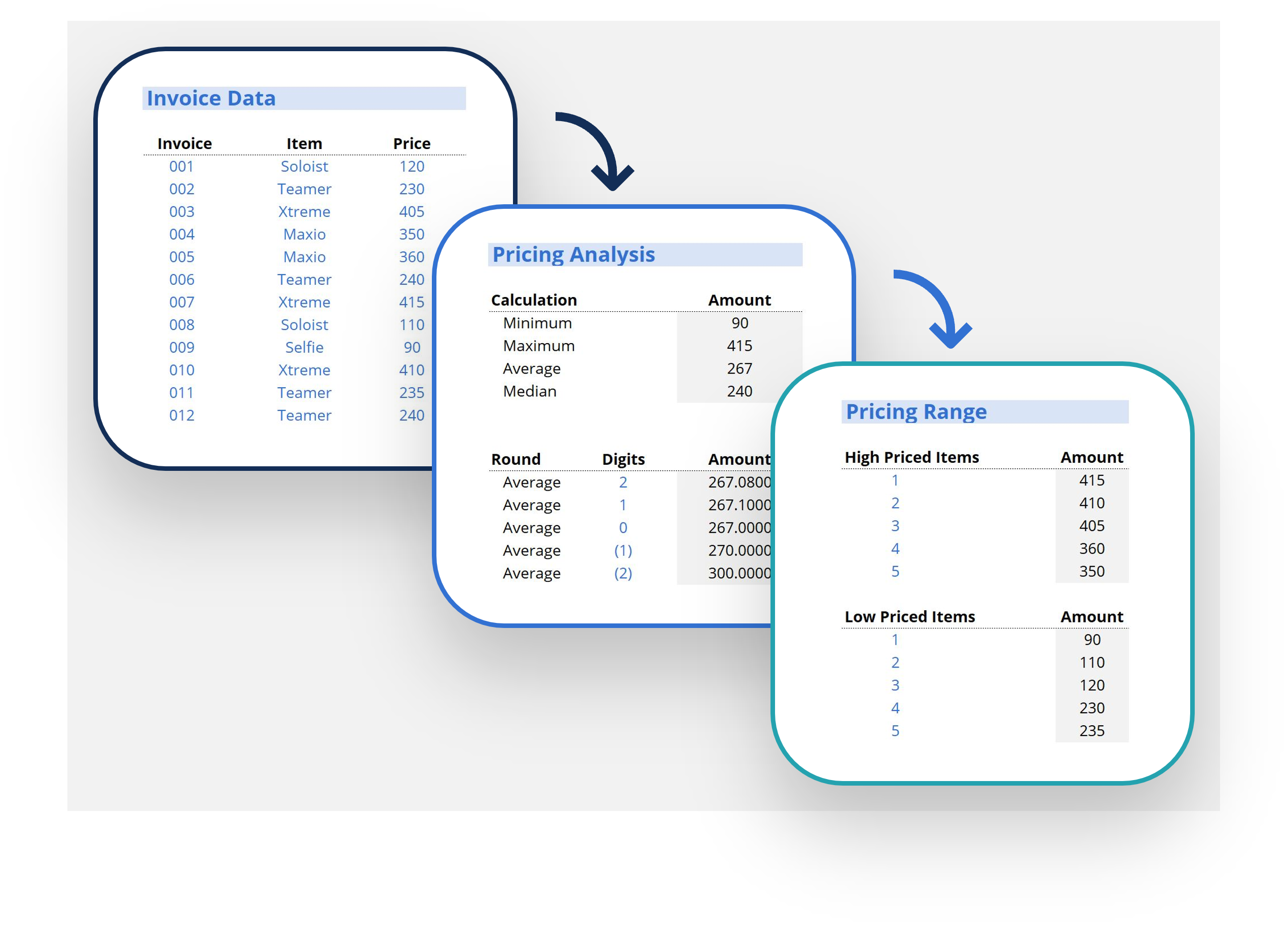

The philosophies of transparency and open access are becoming more widespread, more popular, and—with the ever-increasing expansion of the Internet—more attainable. Governments and institutions around the world are working to make more and more of their accumulated data available online for free. The datasets below are just a small sample of what is available. If you have a particular interest, do not hesitate to search for datasets on that topic. The table below provides a quick visual representation of what each resource offers, while the annotated links below the table provide further information on each website. Links to additional data sets are also provided.

|

|

|

|

|

|

|

|

|

|

|

|

| Biology, Climate, Environment, Geography, Health, Medicine, Methodology, Physics, Population, Sociology |

|

|

|

|

|

| Biology, Culture, Economics, Education, Geography, Health, History, Inequality, Medicine, Methodology, Physics, Sports, Sociology |

|

|

|

|

|

| Economics, Education, Environment, Health, History, Labour, Law, Media, Politics, Population, Psychology, Sociology, Technology, Travel |

|

|

|

|

|

| Computer Science, Crime, Economics, Health, Human Resources, Medicine, Psychology |

|

|

|

|

|

| Agriculture, Crime, Development, Economics, Education, Energy, Environment, Food, Health, Labour, Population, Sociology, Technology |

|

|

|

|

|

| Agriculture, Culture, Crime, Development, Economics, Education, Energy, Environment, Food, Geography, Government, Health, History, Labour, Law, Military, Population, Sociology, Technology |

|

|

|

|

|

| Economics, Population, Sociology |

|

|

|

|

|

| Climate, Geography, Weather |

|

|

|

|

|

| Geography, GIS, Topography |

|

|

|

|

|

| Economics, Population, Poverty, Sociology |

|

|

|

|

|

| Biology, Climate, Economics, Health, History Education, Law, Media, Medicine, Politics, Sociology, Transportation |

|

|

|

|

|

| Economics, Environment, Health, Physics, Science, Sociology |

|

|

|

|

|

| Biology, Physics, Transportation |

Annotated Links and Further Data Sources

The links below follow a general-to-specific trajectory and have been marked with a maple leaf where content is Canada-specific. At the top of the list are datasets that have been created with post-secondary statistics students in mind.

Approximately two case studies per year have been featured at the Statistical Society of Canada Annual Meetings. This website includes all case studies since 1996. Case Studies vary widely in subject matter, from the cod fishery in Newfoundland, to the gender gap in earnings among young people, to the effect of genetic variation on the relationship between diet and cardiovascular disease risk. The data is contextualized, provided for download in multiple formats, and includes questions to consider as well as references for each data set. The case studies for the current year can be found by clicking on the “Meetings” tab in the navigation sidebar, or by searching for “case study” in the search bar.

Journal of Statistics Education

This international journal, published and accessible online for free, includes at least two data sets with each volume. All volumes to 1993 are archived and available online. Each data set includes the context, methodology, questions asked, analysis, and relevant references. The data are included in the journal’s data archive , linked to both on the webpage sidebar and at the end of each data set.

UK Data Service, Economic and Social Data Service Teaching Datasets

The Economic and Social Data Service (run by the government of the United Kingdom) has an online catalogue of over 5,000 datasets with over 35 sampler survey datasets tailor-made to be easier for students to use. Study methods and data can be downloaded free of charge. These datasets use UK studies from the University of Essex and the University of Manchester. The datasets are for NESSTOR, not SPSS, but can also be downloaded in plain-text format.

The Rice Virtual Lab in Statistics, Case Studies

The Rice Virtual Lab in Statistics is an initiative by the National Science Foundation in the United States created to provide free online statistics help and practice. The online case studies are fantastic not only because they provide context, datasets, and downloadable raw data where appropriate, but they also allow the user to search by type of statistical analysis required for the case study, allowing you to focus on t-tests, histograms, regression, ANOVA, or whatever you need the most practice with. There are a limited number of case studies on this site.

The United Nations (UN) Statistics Division of the Department of Economic and Social Affairs has pooled major UN databases from the various divisions as accumulated over the past sixty or more years in order to allow users to access information from multiple UN sources simultaneously. This database of datasets includes over 60 million data points. The datasets can be searched, filtered, have columns changed, and downloaded for ease of use.

Open Data is an initiative by the Government of Canada to provide free, easily navigable access to data collected by the Canadian Government in areas such as health, environment, agriculture, and natural resources. You can browse the datasets by subject, file format, or department, or use an advanced search to filter using all of the above as well as keywords. The site also includes links to Provincial and Municipal-level open data sites available across Canada (accessible in the “Links” section of the left-hand sidebar).

The University of Toronto Library has prepared this excellent and exhaustive list of sources for Canadian Statistics on a wide variety of topics, organized by topic. Some have restricted access; you may or may not be able to access these through your university library, depending on which online databases your institution is subscribed to. The restricted links are all clearly labelled in red. This resource also has an international section, accessible through the horizontal vertical toolbar at the top left of the page.

CANSIM is Statistics Canada’s key socioeconomic database, providing fast and easy access to a large range of the latest statistics available in Canada. The data is sorted both by category and survey in which the data was collected. The site not only allows you to access tables of data, but lets you customize your own table of data based on what information you would like CANSIM to display. You can add or remove content, change the way in which the information is summarized, and download your personalized data table.

The National Climate Data and Information Archive provides historical climate data for major cities across Canada, both online and available for download, as collected by the Government of Canada Weather Office. The data can be displayed hourly for each day, or daily for each month. Other weather statistics including engineering climate datasets can be found at http://climate.weather.gc.ca/prods_servs/engineering_e.html .

GeoGratis is a portal provided by Natural Resources Canada which provides a single point of access to a broad collection of geospatial data, topographic and geoscience maps, images, and scientific publications that cover all of Canada at no cost and with no restrictions. Most of this data is in GIS format. You can use the Government of Canada’s GeoConnections website’s advanced search function to filter out only information that includes datasets available for download. Not all of the data that comes up on GeoConnections is available online for free, which is why we have linked to GeoGratis in this guide.

This website allows users to download datasets collected by the Canadian Association of Research Libraries (CARL) on collection size, emerging services, and salaries, by year, in excel format.

Online Sources of International Statistics Guide, University of Maryland

This online resource, provided by the University of Maryland’s Libraries website, has an impressive list of links to datasets organized by Country and Region, as well as by category (Economic, Environmental, Political, Social, and Population). Some of the datasets are only available through subscriptions to sites such as Proquest. Check with your institution’s library to see if you can access these resources.

Organization for Economic Co-Operation and Development (OECD) Better Life Index

The OECD ’s mission is to promote policies that will improve the economic and social well-being of people around the world. Governments work together, using the OECD as a forum to share experiences and seek solutions to common problems. In service to this mission, the OECD created the Better Life Index, which uses United Nations statistics as well as national statistics, to represent all 34 member countries of the OECD in a relational survey of life satisfaction. The index is interactive, allowing you to set your own levels of importance and the website organizes the data to represent how each country does according to your rankings. The raw index data is also available for download on the website (see the link on the left-hand sidebar).

Human Development Index

The HDI, run by the United Nations Development Programme , combines indicators of life expectancy, educational attainment, and income into a composite index, providing a single statistic to serve as a frame of reference for both social and economic development. Under the “ Getting and Using Data ” tab in the left-hand sidebar, the HDI website provides downloads of the raw data sorted in various ways (including an option to build your own data table), as well as the statistical tables underlying the HDI report. In the “ Tools and Rankings ” section ( also in the left-hand side bar) you can also see various visualizations of the data and tools for readjusting the HDI.

The World Bank DataBank

The World Bank is an international financial institution that provides loans to developing countries towards the goal of worldwide reduction of poverty. DataBank is an analysis and visualization tool that allows you to generate charts, tables, and maps based on the data available in several databases. You can also access the raw data by country, topic, or by source on their Data page.

Commission for Environmental Cooperation (CEC): North American Environmental Atlas

The CEC is a collaborative effort between Canada, the United States, and Mexico to address environmental issues of continental concern. The North American Environmental Atlas (first link above) is an interactive mapping tool to research, analyze, and manage environmental issues across the continent. You can also download the individual map files and data sets that comprise the interactive atlas on the CEC website. Most of the map layers are available in several mapping files, but also provide links to the source datasets that they use, which are largely available for download.

Population Reference Bureau DataFinder

The Population Reference Bureau informs people about population, health, and the environment, and empowers them to use that information to advance the well-being of current and future generations. It is based in the United States but has international data. The DataFinder website combines US Census Bureau data with international data from national surveys. It allows users to search and create custom tables comparing countries and variables of your choice.

Mathematics-in-Industry Case Studies Journal

This international online journal (run by the FIELDS Institute for Research in Mathematical Sciences, Toronto) is dedicated to stimulating innovative mathematics by the modelling and analysis of problems across the physical, biological, and social sciences. While the information in this journal is more about the process of modelling various industry-related issues, and so it does not explicitly provide case study data sets for students to explore on their own, this journal does provide examples of problems worked on by mathematicians in industry, and can give you an understanding of the myriad ways in which statistics and modelling can be applied in a variety of industries.

UCLA Department of Statistics Case Studies

The University of California Los Angeles offers HTML-based case studies for student perusal. Many of these include small datasets, a problem, and a worked solution. They are short and easy to use, but not formatted to allow students to try their hand before seeing the answer. This website has not been updated since 2001.

National Center for Case Study Teaching in Science

This website, maintained by the National Center for Case Study Teaching in Science out of the University of Buffalo, is a collection of over 450 peer-reviewed cases at the high school, undergraduate, and graduate school levels. The cases can be filtered by subject, and several are listed under “statistics.” In order to access the answer keys, you must be an instructor affiliated with an educational institution. If you would like to access the answer to a particular case study, you can ask your professor to register in order to access the answer key, if he or she will not be marking your case study his/herself.

The DHS Program

The Demographic and Health Surveys Program collects and has ready to use data for over 90 countries from over 300 surveys. The website is very comprehensive and contains detailed information pertaining to the different survey data available for each of the participating countries, a guide to the DHS statistics and recode manual, as well as tips on working with the different data sets. Although registration is required for access to the data, registration is free.

Select your Country

- Digital Marketing

- Facebook Marketing

- Instagram Marketing

- Ecommerce Marketing

- Content Marketing

- Data Science Certification

- Machine Learning

- Artificial Intelligence

- Data Analytics

- Graphic Design

- Adobe Illustrator

- Web Designing

- UX UI Design

- Interior Design

- Front End Development

- Back End Development Courses

- Business Analytics

- Entrepreneurship

- Supply Chain

- Financial Modeling

- Corporate Finance

- Project Finance

- Harvard University

- Stanford University

- Yale University

- Princeton University

- Duke University

- UC Berkeley

- Harvard University Executive Programs

- MIT Executive Programs

- Stanford University Executive Programs

- Oxford University Executive Programs

- Cambridge University Executive Programs

- Yale University Executive Programs

- Kellog Executive Programs

- CMU Executive Programs

- 45000+ Free Courses

- Free Certification Courses

- Free DigitalDefynd Certificate

- Free Harvard University Courses

- Free MIT Courses

- Free Excel Courses

- Free Google Courses

- Free Finance Courses

- Free Coding Courses

- Free Digital Marketing Courses

Top 25 Data Science Case Studies [2024]

In an era where data is the new gold, harnessing its power through data science has led to groundbreaking advancements across industries. From personalized marketing to predictive maintenance, the applications of data science are not only diverse but transformative. This compilation of the top 25 data science case studies showcases the profound impact of intelligent data utilization in solving real-world problems. These examples span various sectors, including healthcare, finance, transportation, and manufacturing, illustrating how data-driven decisions shape business operations’ future, enhance efficiency, and optimize user experiences. As we delve into these case studies, we witness the incredible potential of data science to innovate and drive success in today’s data-centric world.

Related: Interesting Data Science Facts

Top 25 Data Science Case Studies [2024]

Case study 1 – personalized marketing (amazon).

Challenge: Amazon aimed to enhance user engagement by tailoring product recommendations to individual preferences, requiring the real-time processing of vast data volumes.

Solution: Amazon implemented a sophisticated machine learning algorithm known as collaborative filtering, which analyzes users’ purchase history, cart contents, product ratings, and browsing history, along with the behavior of similar users. This approach enables Amazon to offer highly personalized product suggestions.

Overall Impact:

- Increased Customer Satisfaction: Tailored recommendations improved the shopping experience.

- Higher Sales Conversions: Relevant product suggestions boosted sales.

Key Takeaways:

- Personalized Marketing Significantly Enhances User Engagement: Demonstrating how tailored interactions can deepen user involvement and satisfaction.

- Effective Use of Big Data and Machine Learning Can Transform Customer Experiences: These technologies redefine the consumer landscape by continuously adapting recommendations to changing user preferences and behaviors.

This strategy has proven pivotal in increasing Amazon’s customer loyalty and sales by making the shopping experience more relevant and engaging.

Case Study 2 – Real-Time Pricing Strategy (Uber)

Challenge: Uber needed to adjust its pricing dynamically to reflect real-time demand and supply variations across different locations and times, aiming to optimize driver incentives and customer satisfaction without manual intervention.

Solution: Uber introduced a dynamic pricing model called “surge pricing.” This system uses data science to automatically calculate fares in real time based on current demand and supply data. The model incorporates traffic conditions, weather forecasts, and local events to adjust prices appropriately.

- Optimized Ride Availability: The model reduced customer wait times by incentivizing more drivers to be available during high-demand periods.

- Increased Driver Earnings: Drivers benefitted from higher earnings during surge periods, aligning their incentives with customer demand.

- Efficient Balance of Supply and Demand: Dynamic pricing matches ride availability with customer needs.

- Importance of Real-Time Data Processing: The real-time processing of data is crucial for responsive and adaptive service delivery.

Uber’s implementation of surge pricing illustrates the power of using real-time data analytics to create a flexible and responsive pricing system that benefits both consumers and service providers, enhancing overall service efficiency and satisfaction.

Case Study 3 – Fraud Detection in Banking (JPMorgan Chase)

Challenge: JPMorgan Chase faced the critical need to enhance its fraud detection capabilities to safeguard the institution and its customers from financial losses. The primary challenge was detecting fraudulent transactions swiftly and accurately in a vast stream of legitimate banking activities.

Solution: The bank implemented advanced machine learning models that analyze real-time transaction patterns and customer behaviors. These models are continuously trained on vast amounts of historical fraud data, enabling them to identify and flag transactions that significantly deviate from established patterns, which may indicate potential fraud.

- Substantial Reduction in Fraudulent Transactions: The advanced detection capabilities led to a marked decrease in fraud occurrences.

- Enhanced Security for Customer Accounts: Customers experienced greater security and trust in their transactions.

- Effectiveness of Machine Learning in Fraud Detection: Machine learning models are greatly effective at identifying fraud activities within large datasets.

- Importance of Ongoing Training and Updates: Continuous training and updating of models are crucial to adapt to evolving fraudulent techniques and maintain detection efficacy.

JPMorgan Chase’s use of machine learning for fraud detection demonstrates how financial institutions can leverage advanced analytics to enhance security measures, protect financial assets, and build customer trust in their banking services.

Case Study 4 – Optimizing Healthcare Outcomes (Mayo Clinic)

Challenge: The Mayo Clinic aimed to enhance patient outcomes by predicting diseases before they reach critical stages. This involved analyzing large volumes of diverse data, including historical patient records and real-time health metrics from various sources like lab results and patient monitors.

Solution: The Mayo Clinic employed predictive analytics to integrate and analyze this data to build models that predict patient risk for diseases such as diabetes and heart disease, enabling earlier and more targeted interventions.

- Improved Patient Outcomes: Early identification of at-risk patients allowed for timely medical intervention.

- Reduction in Healthcare Costs: Preventing disease progression reduces the need for more extensive and costly treatments later.

- Early Identification of Health Risks: Predictive models are essential for identifying at-risk patients early, improving the chances of successful interventions.

- Integration of Multiple Data Sources: Combining historical and real-time data provides a comprehensive view that enhances the accuracy of predictions.

Case Study 5 – Streamlining Operations in Manufacturing (General Electric)

Challenge: General Electric needed to optimize its manufacturing processes to reduce costs and downtime by predicting when machines would likely require maintenance to prevent breakdowns.

Solution: GE leveraged data from sensors embedded in machinery to monitor their condition continuously. Data science algorithms analyze this sensor data to predict when a machine is likely to disappoint, facilitating preemptive maintenance and scheduling.

- Reduction in Unplanned Machine Downtime: Predictive maintenance helped avoid unexpected breakdowns.

- Lower Maintenance Costs and Improved Machine Lifespan: Regular maintenance based on predictive data reduced overall costs and extended the life of machinery.

- Predictive Maintenance Enhances Operational Efficiency: Using data-driven predictions for maintenance can significantly reduce downtime and operational costs.

- Value of Sensor Data: Continuous monitoring and data analysis are crucial for forecasting equipment health and preventing failures.

Related: Data Engineering vs. Data Science

Case Study 6 – Enhancing Supply Chain Management (DHL)

Challenge: DHL sought to optimize its global logistics and supply chain operations to decreases expenses and enhance delivery efficiency. It required handling complex data from various sources for better route planning and inventory management.

Solution: DHL implemented advanced analytics to process and analyze data from its extensive logistics network. This included real-time tracking of shipments, analysis of weather conditions, traffic patterns, and inventory levels to optimize route planning and warehouse operations.

- Enhanced Efficiency in Logistics Operations: More precise route planning and inventory management improved delivery times and reduced resource wastage.

- Reduced Operational Costs: Streamlined operations led to significant cost savings across the supply chain.

- Critical Role of Comprehensive Data Analysis: Effective supply chain management depends on integrating and analyzing data from multiple sources.

- Benefits of Real-Time Data Integration: Real-time data enhances logistical decision-making, leading to more efficient and cost-effective operations.

Case Study 7 – Predictive Maintenance in Aerospace (Airbus)

Challenge: Airbus faced the challenge of predicting potential failures in aircraft components to enhance safety and reduce maintenance costs. The key was to accurately forecast the lifespan of parts under varying conditions and usage patterns, which is critical in the aerospace industry where safety is paramount.

Solution: Airbus tackled this challenge by developing predictive models that utilize data collected from sensors installed on aircraft. These sensors continuously monitor the condition of various components, providing real-time data that the models analyze. The predictive algorithms assess the likelihood of component failure, enabling maintenance teams to schedule repairs or replacements proactively before actual failures occur.

- Increased Safety: The ability to predict and prevent potential in-flight failures has significantly improved the safety of Airbus aircraft.

- Reduced Costs: By optimizing maintenance schedules and minimizing unnecessary checks, Airbus has been able to cut down on maintenance expenses and reduce aircraft downtime.

- Enhanced Safety through Predictive Analytics: The use of predictive analytics in monitoring aircraft components plays a crucial role in preventing failures, thereby enhancing the overall safety of aviation operations.

- Valuable Insights from Sensor Data: Real-time data from operational use is critical for developing effective predictive maintenance strategies. This data provides insights for understanding component behavior under various conditions, allowing for more accurate predictions.

This case study demonstrates how Airbus leverages advanced data science techniques in predictive maintenance to ensure higher safety standards and more efficient operations, setting an industry benchmark in the aerospace sector.

Case Study 8 – Enhancing Film Recommendations (Netflix)

Challenge: Netflix aimed to improve customer retention and engagement by enhancing the accuracy of its recommendation system. This task involved processing and analyzing vast amounts of data to understand diverse user preferences and viewing habits.

Solution: Netflix employed collaborative filtering techniques, analyzing user behaviors (like watching, liking, or disliking content) and similarities between content items. This data-driven approach allows Netflix to refine and personalize recommendations continuously based on real-time user interactions.

- Increased Viewer Engagement: Personalized recommendations led to longer viewing sessions.

- Higher Customer Satisfaction and Retention Rates: Tailored viewing experiences improved overall customer satisfaction, enhancing loyalty.

- Tailoring User Experiences: Machine learning is pivotal in personalizing media content, significantly impacting viewer engagement and satisfaction.

- Importance of Continuous Updates: Regularly updating recommendation algorithms is essential to maintain relevance and effectiveness in user engagement.

Case Study 9 – Traffic Flow Optimization (Google)

Challenge: Google needed to optimize traffic flow within its Google Maps service to reduce congestion and improve routing decisions. This required real-time analysis of extensive traffic data to predict and manage traffic conditions accurately.

Solution: Google Maps integrates data from multiple sources, including satellite imagery, sensor data, and real-time user location data. These data points are used to model traffic patterns and predict future conditions dynamically, which informs updated routing advice.

- Reduced Traffic Congestion: More efficient routing reduced overall traffic buildup.

- Enhanced Accuracy of Traffic Predictions and Routing: Improved predictions led to better user navigation experiences.

- Integration of Multiple Data Sources: Combining various data streams enhances the accuracy of traffic management systems.

- Advanced Modeling Techniques: Sophisticated models are crucial for accurately predicting traffic patterns and optimizing routes.

Case Study 10 – Risk Assessment in Insurance (Allstate)

Challenge: Allstate sought to refine its risk assessment processes to offer more accurately priced insurance products, challenging the limitations of traditional actuarial models through more nuanced data interpretations.

Solution: Allstate enhanced its risk assessment framework by integrating machine learning, allowing for granular risk factor analysis. This approach utilizes individual customer data such as driving records, home location specifics, and historical claim data to tailor insurance offerings more accurately.

- More Precise Risk Assessment: Improved risk evaluation led to more tailored insurance offerings.

- Increased Market Competitiveness: Enhanced pricing accuracy boosted Allstate’s competitive edge in the insurance market.

- Nuanced Understanding of Risk: Machine learning provides a deeper, more nuanced understanding of risk than traditional models, leading to better risk pricing.

- Personalized Pricing Strategies: Leveraging detailed customer data in pricing strategies enhances customer satisfaction and business performance.

Related: Can you move from Cybersecurity to Data Science?

Case Study 11 – Energy Consumption Reduction (Google DeepMind)

Challenge: Google DeepMind aimed to significantly reduce the high energy consumption required for cooling Google’s data centers, which are crucial for maintaining server performance but also represent a major operational cost.

Solution: DeepMind implemented advanced AI algorithms to optimize the data center cooling systems. These algorithms predict temperature fluctuations and adjust cooling processes accordingly, saving energy and reducing equipment wear and tear.

- Reduction in Energy Consumption: Achieved a 40% reduction in energy used for cooling.

- Decrease in Operational Costs and Environmental Impact: Lower energy usage resulted in cost savings and reduced environmental footprint.

- AI-Driven Optimization: AI can significantly decrease energy usage in large-scale infrastructure.

- Operational Efficiency Gains: Efficiency improvements in operational processes lead to cost savings and environmental benefits.

Case Study 12 – Improving Public Safety (New York City Police Department)

Challenge: The NYPD needed to enhance its crime prevention strategies by better predicting where and when crimes were most likely to occur, requiring sophisticated analysis of historical crime data and environmental factors.

Solution: The NYPD implemented a predictive policing system that utilizes data analytics to identify potential crime hotspots based on trends and patterns in past crime data. Officers are preemptively dispatched to these areas to deter criminal activities.

- Reduction in Crime Rates: There is a notable decrease in crime in areas targeted by predictive policing.

- More Efficient Use of Police Resources: Enhanced allocation of resources where needed.

- Effectiveness of Data-Driven Crime Prevention: Targeting resources based on data analytics can significantly reduce crime.

- Proactive Law Enforcement: Predictive analytics enable a shift from reactive to proactive law enforcement strategies.

Case Study 13 – Enhancing Agricultural Yields (John Deere)

Challenge: John Deere aimed to help farmers increase agricultural productivity and sustainability by optimizing various farming operations from planting to harvesting.

Solution: Utilizing data from sensors on equipment and satellite imagery, John Deere developed algorithms that provide actionable insights for farmers on optimal planting times, water usage, and harvest schedules.

- Increased Crop Yields: More efficient farming methods led to higher yields.

- Enhanced Sustainability of Farming Practices: Improved resource management contributed to more sustainable agriculture.

- Precision Agriculture: Significantly improves productivity and resource efficiency.

- Data-Driven Decision-Making: Enables better farming decisions through timely and accurate data.

Case Study 14 – Streamlining Drug Discovery (Pfizer)

Challenge: Pfizer faced the need to accelerate the process of discoverying drug and improve the success rates of clinical trials.

Solution: Pfizer employed data science to simulate and predict outcomes of drug trials using historical data and predictive models, optimizing trial parameters and improving the selection of drug candidates.

- Accelerated Drug Development: Reduced time to market for new drugs.

- Increased Efficiency and Efficacy in Clinical Trials: More targeted trials led to better outcomes.

- Reduction in Drug Development Time and Costs: Data science streamlines the R&D process.

- Improved Clinical Trial Success Rates: Predictive modeling enhances the accuracy of trial outcomes.

Case Study 15 – Media Buying Optimization (Procter & Gamble)

Challenge: Procter & Gamble aimed to maximize the ROI of their extensive advertising budget by optimizing their media buying strategy across various channels.

Solution: P&G analyzed extensive data on consumer behavior and media consumption to identify the most effective times and channels for advertising, allowing for highly targeted ads that reach the intended audience at optimal times.

- Improved Effectiveness of Advertising Campaigns: More effective ads increased campaign impact.

- Increased Sales and Better Budget Allocation: Enhanced ROI from more strategic media spending.

- Enhanced Media Buying Strategies: Data analytics significantly improves media buying effectiveness.

- Insights into Consumer Behavior: Understanding consumer behavior is crucial for optimizing advertising ROI.

Related: Is Data Science Certificate beneficial for your career?

Case Study 16 – Reducing Patient Readmission Rates with Predictive Analytics (Mount Sinai Health System)

Challenge: Mount Sinai Health System sought to reduce patient readmission rates, a significant indicator of healthcare quality and a major cost factor. The challenge involved identifying patients at high risk of being readmitted within 30 days of discharge.

Solution: The health system implemented a predictive analytics platform that analyzes real-time patient data and historical health records. The system detects patterns and risk factors contributing to high readmission rates by utilizing machine learning algorithms. Factors such as past medical history, discharge conditions, and post-discharge care plans were integrated into the predictive model.

- Reduced Readmission Rates: Early identification of at-risk patients allowed for targeted post-discharge interventions, significantly reducing readmission rates.

- Enhanced Patient Outcomes: Patients received better follow-up care tailored to their health risks.

- Predictive Analytics in Healthcare: Effective for managing patient care post-discharge.

- Holistic Patient Data Utilization: Integrating various data points provides a more accurate prediction and better healthcare outcomes.

Case Study 17 – Enhancing E-commerce Customer Experience with AI (Zalando)

Challenge: Zalando aimed to enhance the online shopping experience by improving the accuracy of size recommendations, a common issue that leads to high return rates in online apparel shopping.

Solution: Zalando developed an AI-driven size recommendation engine that analyzes past purchase and return data in combination with customer feedback and preferences. This system utilizes machine learning to predict the best-fit size for customers based on their unique body measurements and purchase history.

- Reduced Return Rates: More accurate size recommendations decreased the returns due to poor fit.

- Improved Customer Satisfaction: Customers experienced a more personalized shopping journey, enhancing overall satisfaction.

- Customization Through AI: Personalizing customer experience can significantly impact satisfaction and business metrics.

- Data-Driven Decision-Making: Utilizing customer data effectively can improve business outcomes by reducing costs and enhancing the user experience.

Case Study 18 – Optimizing Energy Grid Performance with Machine Learning (Enel Group)

Challenge: Enel Group, one of the largest power companies, faced challenges in managing and optimizing the performance of its vast energy grids. The primary goal was to increase the efficiency of energy distribution and reduce operational costs while maintaining reliability in the face of fluctuating supply and demand.

Solution: Enel Group implemented a machine learning-based system that analyzes real-time data from smart meters, weather stations, and IoT devices across the grid. This system is designed to predict peak demand times, potential outages, and equipment failures before they occur. By integrating these predictions with automated grid management tools, Enel can dynamically adjust energy flows, allocate resources more efficiently, and schedule maintenance proactively.

- Enhanced Grid Efficiency: Improved distribution management, reduced energy wastage, and optimized resource allocation.

- Reduced Operational Costs: Predictive maintenance and better grid management decreased the frequency and cost of repairs and outages.

- Predictive Maintenance in Utility Networks: Advanced analytics can preemptively identify issues, saving costs and enhancing service reliability.

- Real-Time Data Integration: Leveraging data from various sources in real-time enables more agile and informed decision-making in energy management.

Case Study 19 – Personalizing Movie Streaming Experience (WarnerMedia)

Challenge: WarnerMedia sought to enhance viewer engagement and subscription retention rates on its streaming platforms by providing more personalized content recommendations.

Solution: WarnerMedia deployed a sophisticated data science strategy, utilizing deep learning algorithms to analyze viewer behaviors, including viewing history, ratings given to shows and movies, search patterns, and demographic data. This analysis helped create highly personalized viewer profiles, which were then used to tailor content recommendations, homepage layouts, and promotional offers specifically to individual preferences.

- Increased Viewer Engagement: Personalized recommendations resulted in extended viewing times and increased interactions with the platform.

- Higher Subscription Retention: Tailored user experiences improved overall satisfaction, leading to lower churn rates.

- Deep Learning Enhances Personalization: Deep learning algorithms allow a more nuanced knowledge of consumer preferences and behavior.

- Data-Driven Customization is Key to User Retention: Providing a customized experience based on data analytics is critical for maintaining and growing a subscriber base in the competitive streaming market.

Case Study 20 – Improving Online Retail Sales through Customer Sentiment Analysis (Zappos)

Challenge: Zappos, an online shoe and clothing retailer, aimed to enhance customer satisfaction and boost sales by better understanding customer sentiments and preferences across various platforms.

Solution: Zappos implemented a comprehensive sentiment analysis program that utilized natural language processing (NLP) techniques to gather and analyze customer feedback from social media, product reviews, and customer support interactions. This data was used to identify emerging trends, customer pain points, and overall sentiment towards products and services. The insights derived from this analysis were subsequently used to customize marketing strategies, enhance product offerings, and improve customer service practices.

- Enhanced Product Selection and Marketing: Insight-driven adjustments to inventory and marketing strategies increased relevancy and customer satisfaction.

- Improved Customer Experience: By addressing customer concerns and preferences identified through sentiment analysis, Zappos enhanced its overall customer service, increasing loyalty and repeat business.

- Power of Sentiment Analysis in Retail: Understanding and reacting to customer emotions and opinions can significantly impact sales and customer satisfaction.

- Strategic Use of Customer Feedback: Leveraging customer feedback to drive business decisions helps align product offerings and services with customer expectations, fostering a positive brand image.

Related: Data Science Industry in the US

Case Study 21 – Streamlining Airline Operations with Predictive Analytics (Delta Airlines)

Challenge: Delta Airlines faced operational challenges, including flight delays, maintenance scheduling inefficiencies, and customer service issues, which impacted passenger satisfaction and operational costs.

Solution: Delta implemented a predictive analytics system that integrates data from flight operations, weather reports, aircraft sensor data, and historical maintenance records. The system predicts potential delays using machine learning models and suggests optimal maintenance scheduling. Additionally, it forecasts passenger load to optimize staffing and resource allocation at airports.

- Reduced Flight Delays: Predictive insights allowed for better planning and reduced unexpected delays.

- Enhanced Maintenance Efficiency: Maintenance could be scheduled proactively, decreasing the time planes spend out of service.

- Improved Passenger Experience: With better resource management, passenger handling became more efficient, enhancing overall customer satisfaction.

- Operational Efficiency Through Predictive Analytics: Leveraging data for predictive purposes significantly improves operational decision-making.

- Data Integration Across Departments: Coordinating data from different sources provides a holistic view crucial for effective airline management.

Case Study 22 – Enhancing Financial Advisory Services with AI (Morgan Stanley)

Challenge: Morgan Stanley sought to offer clients more personalized and effective financial guidance. The challenge was seamlessly integrating vast financial data with individual client profiles to deliver tailored investment recommendations.

Solution: Morgan Stanley developed an AI-powered platform that utilizes natural language processing and ML to analyze financial markets, client portfolios, and historical investment performance. The system identifies patterns and predicts market trends while considering each client’s financial goals, risk tolerance, and investment history. This integrated approach enables financial advisors to offer highly customized advice and proactive investment strategies.

- Improved Client Satisfaction: Clients received more relevant and timely investment recommendations, enhancing their overall satisfaction and trust in the advisory services.

- Increased Efficiency: Advisors were able to manage client portfolios more effectively, using AI-driven insights to make faster and more informed decisions.

- Personalization through AI: Advanced analytics and AI can significantly enhance the personalization of financial services, leading to better client engagement.

- Data-Driven Decision Making: Leveraging diverse data sets provides a comprehensive understanding crucial for tailored financial advising.

Case Study 23 – Optimizing Inventory Management in Retail (Walmart)

Challenge: Walmart sought to improve inventory management across its vast network of stores and warehouses to reduce overstock and stockouts, which affect customer satisfaction and operational efficiency.

Solution: Walmart implemented a robust data analytics system that integrates real-time sales data, supply chain information, and predictive analytics. This system uses machine learning algorithms to forecast demand for thousands of products at a granular level, considering factors such as seasonality, local events, and economic trends. The predictive insights allow Walmart to dynamically adjust inventory levels, optimize restocking schedules, and manage distribution logistics more effectively.