Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is a Case-Control Study? | Definition & Examples

What Is a Case-Control Study? | Definition & Examples

Published on February 4, 2023 by Tegan George . Revised on June 22, 2023.

A case-control study is an experimental design that compares a group of participants possessing a condition of interest to a very similar group lacking that condition. Here, the participants possessing the attribute of study, such as a disease, are called the “case,” and those without it are the “control.”

It’s important to remember that the case group is chosen because they already possess the attribute of interest. The point of the control group is to facilitate investigation, e.g., studying whether the case group systematically exhibits that attribute more than the control group does.

Table of contents

When to use a case-control study, examples of case-control studies, advantages and disadvantages of case-control studies, other interesting articles, frequently asked questions.

Case-control studies are a type of observational study often used in fields like medical research, environmental health, or epidemiology. While most observational studies are qualitative in nature, case-control studies can also be quantitative , and they often are in healthcare settings. Case-control studies can be used for both exploratory and explanatory research , and they are a good choice for studying research topics like disease exposure and health outcomes.

A case-control study may be a good fit for your research if it meets the following criteria.

- Data on exposure (e.g., to a chemical or a pesticide) are difficult to obtain or expensive.

- The disease associated with the exposure you’re studying has a long incubation period or is rare or under-studied (e.g., AIDS in the early 1980s).

- The population you are studying is difficult to contact for follow-up questions (e.g., asylum seekers).

Retrospective cohort studies use existing secondary research data, such as medical records or databases, to identify a group of people with a common exposure or risk factor and to observe their outcomes over time. Case-control studies conduct primary research , comparing a group of participants possessing a condition of interest to a very similar group lacking that condition in real time.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Case-control studies are common in fields like epidemiology, healthcare, and psychology.

You would then collect data on your participants’ exposure to contaminated drinking water, focusing on variables such as the source of said water and the duration of exposure, for both groups. You could then compare the two to determine if there is a relationship between drinking water contamination and the risk of developing a gastrointestinal illness. Example: Healthcare case-control study You are interested in the relationship between the dietary intake of a particular vitamin (e.g., vitamin D) and the risk of developing osteoporosis later in life. Here, the case group would be individuals who have been diagnosed with osteoporosis, while the control group would be individuals without osteoporosis.

You would then collect information on dietary intake of vitamin D for both the cases and controls and compare the two groups to determine if there is a relationship between vitamin D intake and the risk of developing osteoporosis. Example: Psychology case-control study You are studying the relationship between early-childhood stress and the likelihood of later developing post-traumatic stress disorder (PTSD). Here, the case group would be individuals who have been diagnosed with PTSD, while the control group would be individuals without PTSD.

Case-control studies are a solid research method choice, but they come with distinct advantages and disadvantages.

Advantages of case-control studies

- Case-control studies are a great choice if you have any ethical considerations about your participants that could preclude you from using a traditional experimental design .

- Case-control studies are time efficient and fairly inexpensive to conduct because they require fewer subjects than other research methods .

- If there were multiple exposures leading to a single outcome, case-control studies can incorporate that. As such, they truly shine when used to study rare outcomes or outbreaks of a particular disease .

Disadvantages of case-control studies

- Case-control studies, similarly to observational studies, run a high risk of research biases . They are particularly susceptible to observer bias , recall bias , and interviewer bias.

- In the case of very rare exposures of the outcome studied, attempting to conduct a case-control study can be very time consuming and inefficient .

- Case-control studies in general have low internal validity and are not always credible.

Case-control studies by design focus on one singular outcome. This makes them very rigid and not generalizable , as no extrapolation can be made about other outcomes like risk recurrence or future exposure threat. This leads to less satisfying results than other methodological choices.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A case-control study differs from a cohort study because cohort studies are more longitudinal in nature and do not necessarily require a control group .

While one may be added if the investigator so chooses, members of the cohort are primarily selected because of a shared characteristic among them. In particular, retrospective cohort studies are designed to follow a group of people with a common exposure or risk factor over time and observe their outcomes.

Case-control studies, in contrast, require both a case group and a control group, as suggested by their name, and usually are used to identify risk factors for a disease by comparing cases and controls.

A case-control study differs from a cross-sectional study because case-control studies are naturally retrospective in nature, looking backward in time to identify exposures that may have occurred before the development of the disease.

On the other hand, cross-sectional studies collect data on a population at a single point in time. The goal here is to describe the characteristics of the population, such as their age, gender identity, or health status, and understand the distribution and relationships of these characteristics.

Cases and controls are selected for a case-control study based on their inherent characteristics. Participants already possessing the condition of interest form the “case,” while those without form the “control.”

Keep in mind that by definition the case group is chosen because they already possess the attribute of interest. The point of the control group is to facilitate investigation, e.g., studying whether the case group systematically exhibits that attribute more than the control group does.

The strength of the association between an exposure and a disease in a case-control study can be measured using a few different statistical measures , such as odds ratios (ORs) and relative risk (RR).

No, case-control studies cannot establish causality as a standalone measure.

As observational studies , they can suggest associations between an exposure and a disease, but they cannot prove without a doubt that the exposure causes the disease. In particular, issues arising from timing, research biases like recall bias , and the selection of variables lead to low internal validity and the inability to determine causality.

Sources in this article

We strongly encourage students to use sources in their work. You can cite our article (APA Style) or take a deep dive into the articles below.

George, T. (2023, June 22). What Is a Case-Control Study? | Definition & Examples. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/methodology/case-control-study/

Schlesselman, J. J. (1982). Case-Control Studies: Design, Conduct, Analysis (Monographs in Epidemiology and Biostatistics, 2) (Illustrated). Oxford University Press.

Is this article helpful?

Tegan George

Other students also liked, what is an observational study | guide & examples, control groups and treatment groups | uses & examples, cross-sectional study | definition, uses & examples, what is your plagiarism score.

What Is A Case Control Study?

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

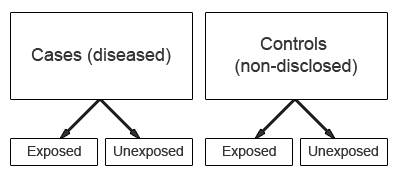

A case-control study is a research method where two groups of people are compared – those with the condition (cases) and those without (controls). By looking at their past, researchers try to identify what factors might have contributed to the condition in the ‘case’ group.

Explanation

A case-control study looks at people who already have a certain condition (cases) and people who don’t (controls). By comparing these two groups, researchers try to figure out what might have caused the condition. They look into the past to find clues, like habits or experiences, that are different between the two groups.

The “cases” are the individuals with the disease or condition under study, and the “controls” are similar individuals without the disease or condition of interest.

The controls should have similar characteristics (i.e., age, sex, demographic, health status) to the cases to mitigate the effects of confounding variables .

Case-control studies identify any associations between an exposure and an outcome and help researchers form hypotheses about a particular population.

Researchers will first identify the two groups, and then look back in time to investigate which subjects in each group were exposed to the condition.

If the exposure is found more commonly in the cases than the controls, the researcher can hypothesize that the exposure may be linked to the outcome of interest.

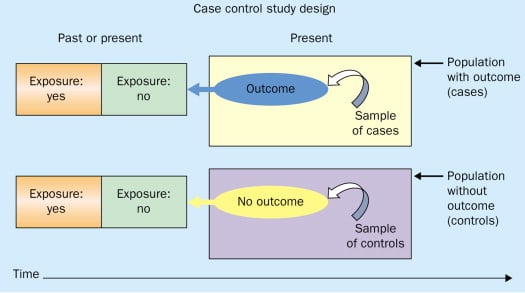

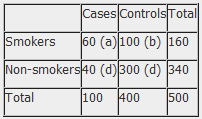

Figure: Schematic diagram of case-control study design. Kenneth F. Schulz and David A. Grimes (2002) Case-control studies: research in reverse . The Lancet Volume 359, Issue 9304, 431 – 434

Quick, inexpensive, and simple

Because these studies use already existing data and do not require any follow-up with subjects, they tend to be quicker and cheaper than other types of research. Case-control studies also do not require large sample sizes.

Beneficial for studying rare diseases

Researchers in case-control studies start with a population of people known to have the target disease instead of following a population and waiting to see who develops it. This enables researchers to identify current cases and enroll a sufficient number of patients with a particular rare disease.

Useful for preliminary research

Case-control studies are beneficial for an initial investigation of a suspected risk factor for a condition. The information obtained from cross-sectional studies then enables researchers to conduct further data analyses to explore any relationships in more depth.

Limitations

Subject to recall bias.

Participants might be unable to remember when they were exposed or omit other details that are important for the study. In addition, those with the outcome are more likely to recall and report exposures more clearly than those without the outcome.

Difficulty finding a suitable control group

It is important that the case group and the control group have almost the same characteristics, such as age, gender, demographics, and health status.

Forming an accurate control group can be challenging, so sometimes researchers enroll multiple control groups to bolster the strength of the case-control study.

Do not demonstrate causation

Case-control studies may prove an association between exposures and outcomes, but they can not demonstrate causation.

A case-control study is an observational study where researchers analyzed two groups of people (cases and controls) to look at factors associated with particular diseases or outcomes.

Below are some examples of case-control studies:

- Investigating the impact of exposure to daylight on the health of office workers (Boubekri et al., 2014).

- Comparing serum vitamin D levels in individuals who experience migraine headaches with their matched controls (Togha et al., 2018).

- Analyzing correlations between parental smoking and childhood asthma (Strachan and Cook, 1998).

- Studying the relationship between elevated concentrations of homocysteine and an increased risk of vascular diseases (Ford et al., 2002).

- Assessing the magnitude of the association between Helicobacter pylori and the incidence of gastric cancer (Helicobacter and Cancer Collaborative Group, 2001).

- Evaluating the association between breast cancer risk and saturated fat intake in postmenopausal women (Howe et al., 1990).

Frequently asked questions

1. what’s the difference between a case-control study and a cross-sectional study.

Case-control studies are different from cross-sectional studies in that case-control studies compare groups retrospectively while cross-sectional studies analyze information about a population at a specific point in time.

In cross-sectional studies , researchers are simply examining a group of participants and depicting what already exists in the population.

2. What’s the difference between a case-control study and a longitudinal study?

Case-control studies compare groups retrospectively, while longitudinal studies can compare groups either retrospectively or prospectively.

In a longitudinal study , researchers monitor a population over an extended period of time, and they can be used to study developmental shifts and understand how certain things change as we age.

In addition, case-control studies look at a single subject or a single case, whereas longitudinal studies can be conducted on a large group of subjects.

3. What’s the difference between a case-control study and a retrospective cohort study?

Case-control studies are retrospective as researchers begin with an outcome and trace backward to investigate exposure; however, they differ from retrospective cohort studies.

In a retrospective cohort study , researchers examine a group before any of the subjects have developed the disease, then examine any factors that differed between the individuals who developed the condition and those who did not.

Thus, the outcome is measured after exposure in retrospective cohort studies, whereas the outcome is measured before the exposure in case-control studies.

Boubekri, M., Cheung, I., Reid, K., Wang, C., & Zee, P. (2014). Impact of windows and daylight exposure on overall health and sleep quality of office workers: a case-control pilot study. Journal of Clinical Sleep Medicine: JCSM: Official Publication of the American Academy of Sleep Medicine, 10 (6), 603-611.

Ford, E. S., Smith, S. J., Stroup, D. F., Steinberg, K. K., Mueller, P. W., & Thacker, S. B. (2002). Homocyst (e) ine and cardiovascular disease: a systematic review of the evidence with special emphasis on case-control studies and nested case-control studies. International journal of epidemiology, 31 (1), 59-70.

Helicobacter and Cancer Collaborative Group. (2001). Gastric cancer and Helicobacter pylori: a combined analysis of 12 case control studies nested within prospective cohorts. Gut, 49 (3), 347-353.

Howe, G. R., Hirohata, T., Hislop, T. G., Iscovich, J. M., Yuan, J. M., Katsouyanni, K., … & Shunzhang, Y. (1990). Dietary factors and risk of breast cancer: combined analysis of 12 case—control studies. JNCI: Journal of the National Cancer Institute, 82 (7), 561-569.

Lewallen, S., & Courtright, P. (1998). Epidemiology in practice: case-control studies. Community eye health, 11 (28), 57–58.

Strachan, D. P., & Cook, D. G. (1998). Parental smoking and childhood asthma: longitudinal and case-control studies. Thorax, 53 (3), 204-212.

Tenny, S., Kerndt, C. C., & Hoffman, M. R. (2021). Case Control Studies. In StatPearls . StatPearls Publishing.

Togha, M., Razeghi Jahromi, S., Ghorbani, Z., Martami, F., & Seifishahpar, M. (2018). Serum Vitamin D Status in a Group of Migraine Patients Compared With Healthy Controls: A Case-Control Study. Headache, 58 (10), 1530-1540.

Further Information

- Schulz, K. F., & Grimes, D. A. (2002). Case-control studies: research in reverse. The Lancet, 359(9304), 431-434.

- What is a case-control study?

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Case Control Study: Definition, Benefits & Examples

By Jim Frost 2 Comments

What is a Case Control Study?

A case control study is a retrospective, observational study that compares two existing groups. Researchers form these groups based on the existence of a condition in the case group and the lack of that condition in the control group. They evaluate the differences in the histories between these two groups looking for factors that might cause a disease.

By evaluating differences in exposure to risk factors between the case and control groups, researchers can learn which factors are associated with the medical condition.

For example, medical researchers study disease X and use a case-control study design to identify risk factors. They create two groups using available medical records from hospitals. Individuals with disease X are in the case group, while those without it are in the control group. If the case group has more exposure to a risk factor than the control group, that exposure is a potential cause for disease X. However, case-control studies establish only correlation and not causation. Be aware of spurious correlations!

Case-control studies are observational studies because researchers do not control the risk factors—they only observe them. They are retrospective studies because the scientists create the case and control groups after the outcomes for the subjects (e.g., disease vs. no disease) are known.

This post explains the benefits and limitations of case-control studies, controlling confounders, and analyzing and interpreting the results. I close with an example case control study showing how to calculate and interpret the results.

Learn more about Experimental Design: Definition, Types, and Examples .

Related posts : Observational Studies Explained and Control Groups in Experiments

Benefits of a Case Control Study

A case control study is a relatively quick and simple design. They frequently use existing patient data, and the experimenters form the groups after the outcomes are known. Researchers do not conduct an experiment. Instead, they look for differences between the case and control groups that are potential risk factors for the condition. Small groups and individual facilities can conduct case-control studies, unlike other more intensive types of experiments.

Case-control studies are perfect for evaluating outbreaks and rare conditions. Researchers simply need to let a sufficient number of known cases accumulate in an established database. The alternative would be to select a large random sample and hope that the condition afflicts it eventually.

A case control study can provide rapid results during outbreaks where the researchers need quick answers. They are ideal for the preliminary investigation phase, where scientists screen potential risk factors. As such, they can point the way for more thorough, time-consuming, and expensive studies. They are especially beneficial when the current state of science knows little about the connection between risk factors and the medical condition. And when you need to identify potential risk factors quickly!

Cohort studies are another type of observational study that are similar to case-control studies, but there are some important differences. To learn more, read my post about Cohort Studies .

Limitations of a Case Control Study

Because case-control studies are observational, they cannot establish causality and provide lower quality evidence than other experimental designs, such as randomized controlled trials . Additionally, as you’ll see in the next section, this type of study is susceptible to confounding variables unless experimenters correctly match traits between the two groups.

A case-control study typically depends on health records. If the necessary data exist in sources available to the researchers, all is good. However, the investigation becomes more complicated if the data are not readily available.

Case-control studies can incorporate biases from the underlying data sources. For example, researchers frequently obtain patient data from hospital records. The population of hospital patients is likely to differ from the general population. Even the control patients are in the hospital for some reason—they likely have serious health problems. Consequently, the subjects in case-control studies are likely to differ from the general population, which reduces the generalizability of the results.

A case-control study cannot estimate incidence or prevalence rates for the disease. The data from these studies do not allow you to calculate the probability of a new person contracting the condition in a given period nor how common it is in the population. This limitation occurs because case-control studies do not use a representative sample.

Case-control studies cannot determine the time between exposure and onset of the medical condition. In fact, case-control studies cannot reliably assess each subject’s exposure to risk factors over time. Longitudinal studies, such as prospective cohort studies, can better make those types of assessment.

Related post : Causation versus Correlation in Statistics

Use Matching to Control Confounders

Because case-control studies are observational studies, they are particularly vulnerable to confounding variables and spurious correlations . A confounder correlates with both the risk factor and the outcome variable. Because observational studies don’t use random assignment to equalize confounders between the case and control groups, they can become unbalanced and affect the results.

Unfortunately, confounders can be the actual cause of the medical condition rather than the risk factor that the researchers identify. If a case-control study does not account for confounding variables, it can bias the results and make them untrustworthy.

Case-control studies typically use trait matching to control confounders. This technique involves selecting study participants for the case and control groups with similar characteristics, which helps equalize the groups for potential confounders. Equalizing confounders limits their impact on the results.

Ultimately, the goal is to create case and control groups that have equal risks for developing the condition/disease outside the risk factors the researchers are explicitly assessing. Matching facilitates valid comparisons between the two groups because the controls are similar to cases. The researchers use subject-area knowledge to identify characteristics that are critical to match.

Note that you cannot assess matching variables as potential risk factors. You’ve intentionally equalized them across the case and control groups and, consequently, they do not correlate with the condition. Hence, do not use the risk factors you want to evaluate as trait matching variables.

Learn more about confounding variables .

Statistical Analysis of a Case Control Study

Researchers frequently include two controls for each case to increase statistical power for a case-control study. Adding even more controls per case provides few statistical benefits, so studies usually do not use more than a 2:1 control to case ratio.

For statistical results, case-control studies typically produce an odds ratio for each potential risk factor. The equation below shows how to calculate an odds ratio for a case-control study.

Notice how this ratio takes the exposure odds in the case group and divides it by the exposure odds in the control group. Consequently, it quantifies how much higher the odds of exposure are among cases than the controls.

In general, odds ratios greater than one flag potential risk factors because they indicate that exposure was higher in the case group than in the control group. Furthermore, higher ratios signify stronger associations between exposure and the medical condition.

An odds ratio of one indicates that exposure was the same in the case and control groups. Nothing to see here!

Ratios less than one might identify protective factors.

Learn more about Understanding Ratios .

Now, let’s bring this to life with an example!

Example Odds Ratio in a Case-Control Study

The Kent County Health Department in Michigan conducted a case-control study in 2005 for a company lunch that produced an outbreak of vomiting and diarrhea. Out of multiple lunch ingredients, researchers found the following exposure rates for lettuce consumption.

| 53 | 33 | |

| 1 | 7 |

By plugging these numbers into the equation, we can calculate the odds ratio for lettuce in this case-control study.

The study determined that the odds ratio for lettuce is 11.2.

This ratio indicates that those with symptoms were 11.2 times more likely to have eaten lettuce than those without symptoms. These results raise a big red flag for contaminated lettuce being the culprit!

Learn more about Odds Ratios.

Epidemiology in Practice: Case-Control Studies (NIH)

Interpreting Results of Case-Control Studies (CDC)

Share this:

Reader Interactions

January 18, 2022 at 7:56 am

Great post, thanks for writing it!

Is it possible to test an odds ration for statistical significance?

January 18, 2022 at 7:41 pm

Hi Michael,

Thanks! And yes, you can test for significance. To learn more about that, read my post about odds ratios , where I discuss p-values and confidence intervals.

Comments and Questions Cancel reply

Case-Control Studies

Introduction

Cohort studies have an intuitive logic to them, but they can be very problematic when one is investigating outcomes that only occur in a small fraction of exposed and unexposed individuals. They can also be problematic when it is expensive or very difficult to obtain exposure information from a cohort. In these situations a case-control design offers an alternative that is much more efficient. The goal of a case-control study is the same as that of cohort studies, i.e., to estimate the magnitude of association between an exposure and an outcome. However, case-control studies employ a different sampling strategy that gives them greater efficiency.

Learning Objectives

After completing this module, the student will be able to:

- Define and explain the distinguishing features of a case-control study

- Describe and identify the types of epidemiologic questions that can be addressed by case-control studies

- Define what is meant by the term "source population"

- Describe the purpose of controls in a case-control study

- Describe differences between hospital-based and population-based case-control studies

- Describe the principles of valid control selection

- Explain the importance of using specific diagnostic criteria and explicit case definitions in case-control studies

- Estimate and interpret the odds ratio from a case-control study

- Identify the potential strengths and limitations of case-control studies

Overview of Case-Control Design

In the module entitled Overview of Analytic Studies it was noted that Rothman describes the case-control strategy as follows:

"Case-control studies are best understood by considering as the starting point a source population , which represents a hypothetical study population in which a cohort study might have been conducted. The source population is the population that gives rise to the cases included in the study. If a cohort study were undertaken, we would define the exposed and unexposed cohorts (or several cohorts) and from these populations obtain denominators for the incidence rates or risks that would be calculated for each cohort. We would then identify the number of cases occurring in each cohort and calculate the risk or incidence rate for each. In a case-control study the same cases are identified and classified as to whether they belong to the exposed or unexposed cohort. Instead of obtaining the denominators for the rates or risks, however, a control group is sampled from the entire source population that gives rise to the cases. Individuals in the control group are then classified into exposed and unexposed categories. The purpose of the control group is to determine the relative size of the exposed and unexposed components of the source population. Because the control group is used to estimate the distribution of exposure in the source population, the cardinal requirement of control selection is that the controls be sampled independently of exposure status."

To illustrate this consider the following hypothetical scenario in which the source population is the state of Massachusetts. Diseased individuals are red, and non-diseased individuals are blue. Exposed individuals are indicated by a whitish midsection. Note the following aspects of the depicted scenario:

- The disease is rare.

- There is a fairly large number of exposed individuals in the state, but most of these are not diseased.

If we somehow had exposure and outcome information on all of the subjects in the source population and looked at the association using a cohort design, we might find the data summarized in the contingency table below.

|

| Diseased | Non-diseased | Total |

|---|---|---|---|

| Exposed | 700 | 999,300 | 1,000,000 |

| Non-exposed | 600 | 4,999,400 | 5,000,000 |

In this hypothetical example, we have data on all 6,000,000 people in the source population, and we could compute the probability of disease (i.e., the risk or incidence) in both the exposed group and the non-exposed group, because we have the denominators for both the exposed and non-exposed groups.

The table above summarizes all of the necessary information regarding exposure and outcome status for the population and enables us to compute a risk ratio as a measure of the strength of the association. Intuitively, we compute the probability of disease (the risk) in each exposure group and then compute the risk ratio as follows:

The problem , of course, is that we usually don't have the resources to get the data on all subjects in the population. If we took a random sample of even 5-10% of the population, we would have few diseased people in our sample, certainly not enough to produce a reasonably precise measure of association. Moreover, we would expend an inordinate amount of effort and money collecting exposure and outcome data on a large number of people who would not develop the outcome.

We need a method that allows us to retain all the people in the numerator of disease frequency (diseased people or "cases") but allows us to collect information from only a small proportion of the people that make up the denominator (population, or "controls"), most of whom do not have the disease of interest. The case-control design allows us to accomplish this. We identify and collect exposure information on all the cases, but identify and collect exposure information on only a sample of the population. Once we have the exposure information, we can assign subjects to the numerator and denominator of the exposed and unexposed groups. This is what Rothman means when he says,

"The purpose of the control group is to determine the relative size of the exposed and unexposed components of the source population."

In the above example, we would have identified all 1,300 cases, determined their exposure status, and ended up categorizing 700 as exposed and 600 as unexposed. We might have ransomly sampled 6,000 members of the population (instead of 6 million) in order to determine the exposure distribution in the total population. If our sampling method was random, we would expect that about 1,000 would be exposed and 5,000 unexposed (the same ratio as in the overall population). We calculate a similar measure as the risk ratio above, but substituting in the denominator a sample of the population ("controls") instead of the whole population:

Note that when we take a sample of the population, we no longer have a measure of disease frequency, because the denominator no longer represents the population. Therefore, we can no longer compute the probability or rate of disease incidence in each exposure group. We also can't calculate a risk or rate difference measure for the same reason. However, as we have seen, we can compute the relative probability of disease in the exposed vs. unexposed group. The term generally used for this measure is an odds ratio , described in more detail later in the module.

Consequently, when the outcome is uncommon, as in this case, the risk ratio can be estimated much more efficiently by using a case-control design. One would focus first on finding an adequate number of cases in order to determine the ratio of exposed to unexposed cases. Then, one only needs to take a sample of the population in order to estimate the relative size of the exposed and unexposed components of the source population. Note that if one can identify all of the cases that were reported to a registry or other database within a defined period of time, then it is possible to compute an estimate of the incidence of disease if the size of the population is known from census data. While this is conceptually possible, it is rarely done, and we will not discuss it further in this course.

A Nested Case-Control Study

Suppose a prospective cohort study were conducted among almost 90,000 women for the purpose of studying the determinants of cancer and cardiovascular disease. After enrollment, the women provide baseline information on a host of exposures, and they also provide baseline blood and urine samples that are frozen for possible future use. The women are then followed, and, after about eight years, the investigators want to test the hypothesis that past exposure to pesticides such as DDT is a risk factor for breast cancer. Eight years have passed since the beginning of the study, and 1.439 women in the cohort have developed breast cancer. Since they froze blood samples at baseline, they have the option of analyzing all of the blood samples in order to ascertain exposure to DDT at the beginning of the study before any cancers occurred. The problem is that there are almost 90,000 women and it would cost $20 to analyze each of the blood samples. If the investigators could have analyzed all 90,000 samples this is what they would have found the results in the table below.

Table of Breast Cancer Occurrence Among Women With or Without DDT Exposure

|

| Breast Cancer | No Breast Cancer | Total |

|---|---|---|---|

| DDT exposed | 360 | 13,276 | 13,636 |

| Unexposed | 1,079 | 75,234 | 76,313 |

|

| 1,439 | 88,510 | 89,949 |

If they had been able to afford analyzing all of the baseline blood specimens in order to categorize the women as having had DDT exposure or not, they would have found a risk ratio = 1.87 (95% confidence interval: 1.66-2.10). The problem is that this would have cost almost $1.8 million, and the investigators did not have the funding to do this.

While 1,439 breast cancers is a disturbing number, it is only 1.6% of the entire cohort, so the outcome is relatively rare, and it is costing a lot of money to analyze the blood specimens obtained from all of the non-diseased women. There is, however, another more efficient alternative, i.e., to use a case-control sampling strategy. One could analyze all of the blood samples from women who had developed breast cancer, but only a sample of the whole cohort in order to estimate the exposure distribution in the population that produced the cases.

If one were to analyze the blood samples of 2,878 of the non-diseased women (twice as many as the number of cases), one would obtain results that would look something like those in the next table.

|

| Breast Cancer | No Breast Cancer |

|---|---|---|

| DDT exposed | 360 | 432 |

| Unexposed | 1,079 | 2,446 |

|

| 1,439 | 2,878 |

Odds of Exposure: 360/1079 in the cases versus 432/2,446 in the non-diseased controls.

Totals Samples analyzed = 1,438+2,878 = 4,316

Total Cost = 4,316 x $20 = $86,320

With this approach a similar estimate of risk was obtained after analyzing blood samples from only a small sample of the entire population at a fraction of the cost with hardly any loss in precision. In essence, a case-control strategy was used, but it was conducted within the context of a prospective cohort study. This is referred to as a case-control study "nested" within a cohort study.

Rothman states that one should look upon all case-control studies as being "nested" within a cohort. In other words the cohort represents the source population that gave rise to the cases. With a case-control sampling strategy one simply takes a sample of the population in order to obtain an estimate of the exposure distribution within the population that gave rise to the cases. Obviously, this is a much more efficient design.

It is important to note that, unlike cohort studies, case-control studies do not follow subjects through time. Cases are enrolled at the time they develop disease and controls are enrolled at the same time. The exposure status of each is determined, but they are not followed into the future for further development of disease.

As with cohort studies, case-control studies can be prospective or retrospective. At the start of the study, all cases might have already occurred and then this would be a retrospective case-control study. Alternatively, none of the cases might have already occurred, and new cases will be enrolled prospectively. Epidemiologists generally prefer the prospective approach because it has fewer biases, but it is more expensive and sometimes not possible. When conducted prospectively, or when nested in a prospective cohort study, it is straightforward to select controls from the population at risk. However, in retrospective case-control studies, it can be difficult to select from the population at risk, and controls are then selected from those in the population who didn't develop disease. Using only the non-diseased to select controls as opposed to the whole population means the denominator is not really a measure of disease frequency, but when the disease is rare , the odds ratio using the non-diseased will be very similar to the estimate obtained when the entire population is used to sample for controls. This phenomenon is known as the r are-disease assumption . When case-control studies were first developed, most were conducted retrospectively, and it is sometimes assumed that the rare-disease assumption applies to all case-control studies. However, it actually only applies to those case-control studies in which controls are sampled only from the non-diseased rather than the whole population.

The difference between sampling from the whole population and only the non-diseased is that the whole population contains people both with and without the disease of interest. This means that a sampling strategy that uses the whole population as its source must allow for the fact that people who develop the disease of interest can be selected as controls. Students often have a difficult time with this concept. It is helpful to remember that it seems natural that the population denominator includes people who develop the disease in a cohort study. If a case-control study is a more efficient way to obtain the information from a cohort study, then perhaps it is not so strange that the denominator in a case-control study also can include people who develop the disease. This topic is covered in more detail in EP813 Intermediate Epidemiology.

Retrospective and Prospective Case-Control Studies

Students usually think of case-control studies as being only retrospective, since the investigators enroll subjects who have developed the outcome of interest. However, case-control studies, like cohort studies, can be either retrospective or prospective. In a prospective case-control study, the investigator still enrolls based on outcome status, but the investigator must wait to the cases to occur.

When is a Case-Control Study Desirable?

Given the greater efficiency of case-control studies, they are particularly advantageous in the following situations:

- When the disease or outcome being studied is rare.

- When the disease or outcome has a long induction and latent period (i.e., a long time between exposure and the eventual causal manifestation of disease).

- When exposure data is difficult or expensive to obtain.

- When the study population is dynamic.

- When little is known about the risk factors for the disease, case-control studies provide a way of testing associations with multiple potential risk factors. (This isn't really a unique advantage to case-control studies, however, since cohort studies can also assess multiple exposures.)

Another advantage of their greater efficiency, of course, is that they are less time-consuming and much less costly than prospective cohort studies.

The DES Case-Control Study

A classic example of the efficiency of the case-control approach is the study (Herbst et al.: N. Engl. J. Med. Herbst et al. (1971;284:878-81) that linked in-utero exposure to diethylstilbesterol (DES) with subsequent development of vaginal cancer 15-22 years later. In the late 1960s, physicians at MGH identified a very unusual cancer cluster. Eight young woman between the ages of 15-22 were found to have cancer of the vagina, an uncommon cancer even in elderly women. The cluster of cases in young women was initially reported as a case series, but there were no strong hypotheses about the cause.

In retrospect, the cause was in-utero exposure to DES. After World War II, DES started being prescribed for women who were having troubles with a pregnancy -- if there were signs suggesting the possibility of a miscarriage, DES was frequently prescribed. It has been estimated that between 1945-1950 DES was prescribed for about 20% of all pregnancies in the Boston area. Thus, the unborn fetus was exposed to DES in utero, and in a very small percentage of cases this resulted in development of vaginal cancer when the child was 15-22 years old (a very long latent period). There were several reasons why a case-control study was the only feasible way to identify this association: the disease was extremely rare (even in subjects who had been exposed to DES), there was a very long latent period between exposure and development of disease, and initially they had no idea what was responsible, so there were many possible exposures to consider.

In this situation, a case-control study was the only reasonable approach to identify the causative agent. Given how uncommon the outcome was, even a large prospective study would have been unlikely to have more than one or two cases, even after 15-20 years of follow-up. Similarly, a retrospective cohort study might have been successful in enrolling a large number of subjects, but the outcome of interest was so uncommon that few, if any, subjects would have had it. In contrast, a case-control study was conducted in which eight known cases and 32 age-matched controls provided information on many potential exposures. This strategy ultimately allowed the investigators to identify a highly significant association between the mother's treatment with DES during pregnancy and the eventual development of adenocarcinoma of the vagina in their daughters (in-utero at the time of exposure) 15 to 22 years later.

For more information see the DES Fact Sheet from the National Cancer Institute.

An excellent summary of this landmark study and the long-range effects of DES can be found in a Perspective article in the New England Journal of Medicine. A cohort of both mothers who took DES and their children (daughters and sons) was later formed to look for more common outcomes. Members of the faculty at BUSPH are on the team of investigators that follow this cohort for a variety of outcomes, particularly reproductive consequences and other cancers.

Selecting & Defining Cases and Controls

The "case" definition.

Careful thought should be given to the case definition to be used. If the definition is too broad or vague, it is easier to capture people with the outcome of interest, but a loose case definition will also capture people who do not have the disease. On the other hand, an overly restrictive case definition is employed, fewer cases will be captured, and the sample size may be limited. Investigators frequently wrestle with this problem during outbreak investigations. Initially, they will often use a somewhat broad definition in order to identify potential cases. However, as an outbreak investigation progresses, there is a tendency to narrow the case definition to make it more precise and specific, for example by requiring confirmation of the diagnosis by laboratory testing. In general, investigators conducting case-control studies should thoughtfully construct a definition that is as clear and specific as possible without being overly restrictive.

Investigators studying chronic diseases generally prefer newly diagnosed cases, because they tend to be more motivated to participate, may remember relevant exposures more accurately, and because it avoids complicating factors related to selection of longer duration (i.e., prevalent) cases. However, it is sometimes impossible to have an adequate sample size if only recent cases are enrolled.

Sources of Cases

Typical sources for cases include:

- Patient rosters at medical facilities

- Death certificates

- Disease registries (e.g., cancer or birth defect registries; the SEER Program [Surveillance, Epidemiology and End Results] is a federally funded program that identifies newly diagnosed cases of cancer in population-based registries across the US )

- Cross-sectional surveys (e.g., NHANES, the National Health and Nutrition Examination Survey)

Selection of the Controls

As noted above, it is always useful to think of a case-control study as being nested within some sort of a cohort, i.e., a source population that produced the cases that were identified and enrolled. In view of this there are two key principles that should be followed in selecting controls:

- The comparison group ("controls") should be representative of the source population that produced the cases.

- The "controls" must be sampled in a way that is independent of the exposure, meaning that their selection should not be more (or less) likely if they have the exposure of interest.

If either of these principles are not adhered to, selection bias can result (as discussed in detail in the module on Bias).

Note that in the earlier example of a case-control study conducted in the Massachusetts population, we specified that our sampling method was random so that exposed and unexposed members of the population had an equal chance of being selected. Therefore, we would expect that about 1,000 would be exposed and 5,000 unexposed (the same ratio as in the whole population), and came up with an odds ratio that was same as the hypothetical risk ratio we would have had if we had collected exposure information from the whole population of six million:

What if we had instead been more likely to sample those who were exposed, so that we instead found 1,500 exposed and 4,500 unexposed among the 6,000 controls? Then the odds ratio would have been:

This odds ratio is biased because it differs from the true odds ratio. In this case, the bias stemmed from the fact that we violated the second principle in selection of controls. Depending on which category is over or under-sampled, this type of bias can result in either an underestimate or an overestimate of the true association.

A hypothetical case-control study was conducted to determine whether lower socioeconomic status (the exposure) is associated with a higher risk of cervical cancer (the outcome). The "cases" consisted of 250 women with cervical cancer who were referred to Massachusetts General Hospital for treatment for cervical cancer. They were referred from all over the state. The cases were asked a series of questions relating to socioeconomic status (household income, employment, education, etc.). The investigators identified control subjects by going door-to-door in the community around MGH from 9:00 AM to 5:00 PM. Many residents are not home, but they persist and eventually enroll enough controls. The problem is that the controls were selected by a different mechanism than the cases, AND the selection mechanism may have tended to select individuals of different socioeconomic status, since women who were at home may have been somewhat more likely to be unemployed. In other words, the controls were more likely to be enrolled (selected) if they had the exposure of interest (lower socioeconomic status).

Sources for "Controls"

Population controls:.

A population-based case-control study is one in which the cases come from a precisely defined population, such as a fixed geographic area, and the controls are sampled directly from the same population. In this situation cases might be identified from a state cancer registry, for example, and the comparison group would logically be selected at random from the same source population. Population controls can be identified from voter registration lists, tax rolls, drivers license lists, and telephone directories or by "random digit dialing". Population controls may also be more difficult to obtain, however, because of lack of interest in participating, and there may be recall bias, since population controls are generally healthy and may remember past exposures less accurately.

|

Random Digit Dialing Random digit dialing has been popular in the past, but it is becoming less useful because of the use of caller ID, answer machines, and a greater reliance on cell phones instead of land lines. Ken Rothman points out several that random digit dialing provides an equal probability that any given phone will be dialed, but not an equal probability of reaching eligible control subjects, because households vary in the number of residents and the likelihood that someone will be home. In addition, random digit dialing doesn't make any distinction between residential and business phones.

|

Example of a Population-based Case-Control Study: Rollison et al. reported on a "Population-based Case-Control Study of Diabetes and Breast Cancer Risk in Hispanic and Non-Hispanic White Women Living in US Southwestern States". (ALink to the article - Citation: Am J Epidemiol 2008;167:447–456).

"Briefly, a population-based case-control study of breast cancer was conducted in Colorado, New Mexico, Utah, and selected counties of Arizona. For investigation of differences in the breast cancer risk profiles of non-Hispanic Whites and Hispanics, sampling was stratified by race/ethnicity, and only women who self-reported their race as non-Hispanic White, Hispanic, or American Indian were eligible, with the exception of American Indian women living on reservations. Women diagnosed with histologically confirmed breast cancer between October 1999 and May 2004 (International Classification of Diseases for Oncology codes C50.0–C50.6 and C50.8–C50.9) were identified as cases through population-based cancer registries in each state."

"Population-based controls were frequency-matched to cases in 5-year age groups. In New Mexico and Utah, control participants under age 65 years were randomly selected from driver's license lists; in Arizona and Colorado, controls were randomly selected from commercial mailing lists, since driver's license lists were unavailable. In all states, women aged 65 years or older were randomly selected from the lists of the Centers for Medicare and Medicaid Services (Social Security lists). Of all women contacted, 68 percent of cases and 42 percent of controls participated in the study."

"Odds ratios and 95% confidence intervals were calculated using logistic regression, adjusting for age, body mass index at age 15 years, and parity. Having any type of diabetes was not associated with breast cancer overall (odds ratio = 0.94, 95% confidence interval: 0.78, 1.12). Type 2 diabetes was observed among 19% of Hispanics and 9% of non-Hispanic Whites but was not associated with breast cancer in either group."

In this example, it is clear that the controls were selected from the source population (principle 1), but less clear that they were enrolled independent of exposure status (principle 2), both because drivers' licenses were used for selection and because the participation rate among controls was low. These factors would only matter if they impacted on the estimate of the proportion of the population who had diabetes.

Hospital or Clinic Controls:

- They have diseases that are unrelated to the exposure being studied. For example, for a study examining the association between smoking and lung cancer, it would not be appropriate to include patients with cardiovascular disease as control, since smoking is a risk factor for cardiovascular disease. To include such patients as controls would result in an underestimate of the true association.

- Second, control patients in the comparison should have diseases with similar referral patterns as the cases, in order to minimize selection bias. For example, if the cases are women with cervical cancer who have been referred from all over the state, it would be inappropriate to use controls consisting of women with diabetes who had been referred primarily from local health centers in the immediate vicinity of the hospital. Similarly, it would be inappropriate to use patients from the emergency room, because the selection of a hospital for an emergency is different than for cancer, and this difference might be related to the exposure of interest.

The advantages of using controls who are patients from the same facility are:

- They are easier to identify

- They are more likely to participate than general population controls.

- They minimize selection bias because they generally come from the same source population (provided referral patterns are similar).

- Recall bias would be minimized, because they are sick, but with a different diagnosis.

Example: Several years ago the vascular surgeons at Boston Medical Center wanted to study risk factors for severe atherosclerosis of the lower extremities. The cases were patients who were referred to the hospital for elective surgery to bypass severe atherosclerotic blockages in the arteries to the legs. The controls consisted of patients who were admitted to the same hospital for elective joint replacement of the hip or knee. The patients undergoing joint replacement were similar in age and they also were following the same referral pathways. In other words, they met the "would" criterion: if one of the joint replacement surgery patients had developed severe atherosclerosis in their leg arteries, they would have been referred to the same hospital.

Friend, Neighbor, Spouse, and Relative Controls:

Occasionally investigators will ask cases to nominate controls who are in one of these categories, because they have similar characteristics, such as genotype, socioeconomic status, or environment, i.e., factors that can cause confounding, but are hard to measure and adjust for. By matching cases and controls on these factors, confounding by these factors will be controlled. However, one must be careful that the controls satisfy the two fundamental principles. Often, they do not.

How Many Controls?

Since case-control studies are often used for uncommon outcomes, investigators often have a limited number of cases but a plentiful supply of potential controls. In this situation the statistical power of the study can be increased somewhat by enrolling more controls than cases. However, the additional power that is achieved diminishes as the ratio of controls to cases increases, and ratios greater than 4:1 have little additional impact on power. Consequently, if it is time-consuming or expensive to collect data on controls, the ratio of controls to cases should be no more than 4:1. However, if the data on controls is easily obtained, there is no reason to limit the number of controls.

Methods of Control Sampling

There are three strategies for selecting controls that are best explained by considering the nested case-control study described on page 3 of this module:

- Survivor sampling: This is the most common method. Controls consist of individuals from the source population who do not have the outcome of interest.

- Case-base sampling (also known as "case-cohort" sampling): Controls are selected from the population at risk at the beginning of the follow-up period in the cohort study within which the case-control study was nested.

- Risk Set Sampling: In the nested case-control study a control would be selected from the population at risk at the point in time when a case was diagnosed.

The Rare Outcome Assumption

It is often said that an odds ratio provides a good estimate of the risk ratio only when the outcome of interest is rare, but this is only true when survivor sampling is used. With case-base sampling or risk set sampling, the odds ratio will provide a good estimate of the risk ratio regardless of the frequency of the outcome, because the controls will provide an accurate estimate of the distribution in the source population (i.e., not just in non-diseased people).

More on Selection Bias

Always consider the source population for case-control studies, i.e. the "population" that generated the cases. The cases are always identified and enrolled by some method or a set of procedures or circumstances. For example, cases with a certain disease might be referred to a particular tertiary hospital for specialized treatment. Alternatively, if there is a database or a disease registry for a geographic area, cases might be selected at random from the database. The key to avoiding selection bias is to select the controls by a similar, if not identical, mechanism in order to ensure that the controls provide an accurate representation of the exposure status of the source population.

Example 1: In the first example above, in which cases were randomly selected from a geographically defined database, the source population is also defined geographically, so it would make sense to select population controls by some random method. In contrast, if one enrolled controls from a particular hospital within the geographic area, one would have to at least consider whether the controls were inherently more or less likely to have the exposure of interest. If so, they would not provide an accurate estimate of the exposure distribution of the source population, and selection bias would result.

Example 2: In the second example above, the source population was defined by the patterns of referral to a particular hospital for a particular disease. In order for the controls to be representative of the "population" that produced those cases, the controls should be selected by a similar mechanism, e.g., by contacting the referring health care providers and asking them to provide the names of potential controls. By this mechanism, one can ensure that the controls are representative of the source population, because if they had had the disease of interest they would have been just as likely as the cases to have been included in the case group (thus fulfilling the "would" criterion).

Example 3: A food handler at a delicatessen who is infected with hepatitis A virus is responsible for an outbreak of hepatitis which is largely confined to the surrounding community from which most of the customers come. Many (but not all) of the infected cases are identified by passive and active surveillance. How should controls be selected? In this situation, one might guess that the likelihood of people going to the delicatessen would be heavily influenced by their proximity to it, and this would to a large extent define the source population. In a case-control study undertaken to identify the source, the delicatessen is one of the exposures being tested. Consequently, even if the cases were reported to the state-wide surveillance system, it would not be appropriate to randomly select controls from the state, the county, or even the town where the delicatessen is located. In other words, the "would" criterion doesn't work here, because anyone in the state with clinical hepatitis would end up in the surveillance system, but someone who lived far from the deli would have a much lower likelihood of having the exposure. A better approach would be to select controls who were matched to the cases by neighborhood, age, and gender. These controls would have similar access to go to the deli if they chose to, and they would therefore be more representative of the source population.

Analysis of Case-Control Studies

The computation and interpretation of the odds ratio in a case-control study has already been discussed in the modules on Overview of Analytic Studies and Measures of Association. Additionally, one can compute the confidence interval for the odds ratio, and statistical significance can also be evaluated by using a chi-square test (or a Fisher's Exact Test if the sample size is small) to compute a p-value. These calculations can be done using the Case-Control worksheet in the Excel file called EpiTools.XLS.

Advantages and Disadvantages of Case-Control Studies

Advantages:

- They are efficient for rare diseases or diseases with a long latency period between exposure and disease manifestation.

- They are less costly and less time-consuming; they are advantageous when exposure data is expensive or hard to obtain.

- They are advantageous when studying dynamic populations in which follow-up is difficult.

Disadvantages:

- They are subject to selection bias.

- They are inefficient for rare exposures.

- Information on exposure is subject to observation bias.

- They generally do not allow calculation of incidence (absolute risk).

Introduction to study designs - case-control studies

Introduction.

Learning objectives: You will learn about basic introduction to case-control studies, its analysis and interpretation of outcomes. Case-control studies are one of the frequently used study designs due to the relative ease of its application in comparison with other study designs. This section introduces you to basic concepts, application and strengths of case-control study. This section also covers: 1. Issues in the design of case-control studies 2. Common sources of bias in a case-control study 3. Analysis of case-control studies 4. Strengths and weaknesses of case-control studies 5. Nested case-control studies Read the resource text below.

Resource text

Case-control studies start with the identification of a group of cases (individuals with a particular health outcome) in a given population and a group of controls (individuals without the health outcome) to be included in the study.

In a case-control study the prevalence of exposure to a potential risk factor(s) is compared between cases and controls. If the prevalence of exposure is more common among cases than controls, it may be a risk factor for the outcome under investigation. A major characteristic of case-control studies is that data on potential risk factors are collected retrospectively and as a result may give rise to bias. This is a particular problem associated with case-control studies and therefore needs to be carefully considered during the design and conduct of the study.

1. Issues in the design of case-control studies

Formulation of a clearly defined hypothesis As with all epidemiological investigations the beginning of a case-control study should begin with the formulation of a clearly defined hypothesis. Case definition It is essential that the case definition is clearly defined at the outset of the investigation to ensure that all cases included in the study are based on the same diagnostic criteria. Source of cases The source of cases needs to be clearly defined.

Selection of cases Case-control studies may use incident or prevalent cases.

Incident cases comprise cases newly diagnosed during a defined time period. The use of incident cases is considered as preferential, as the recall of past exposure(s) may be more accurate among newly diagnosed cases. In addition, the temporal sequence of exposure and disease is easier to assess among incident cases.

Prevalent cases comprise individuals who have had the outcome under investigation for some time. The use of prevalent cases may give rise to recall bias as prevalent cases may be less likely to accurately report past exposures(s). As a result, the interpretation of results based on prevalent cases may prove more problematic, as it may be more difficult to ensure that reported events relate to a time before the development of disease rather than to the consequence of the disease process itself. For example, individuals may modify their exposure following the onset of disease. In addition, unless the effect of exposure on duration of illness is known, it will not be possible to determine the extent to which a particular characteristic is related to the prognosis of the disease once it develops rather than to its cause.

Source of cases Cases may be recruited from a number of sources; for example they may be recruited from a hospital, clinic, GP registers or may be population bases. Population based case control studies are generally more expensive and difficult to conduct.

Selection of controls A particular problem inherent in case-control studies is the selection of a comparable control group. Controls are used to estimate the prevalence of exposure in the population which gave rise to the cases. Therefore, the ideal control group would comprise a random sample from the general population that gave rise to the cases. However, this is not always possible in practice. The goal is to select individuals in whom the distribution of exposure status would be the same as that of the cases in the absence of an exposure disease association. That is, if there is no true association between exposure and disease, the cases and controls should have the same distribution of exposure. The source of controls is dependent on the source of cases. In order to minimize bias, controls should be selected to be a representative sample of the population which produced the cases. For example, if cases are selected from a defined population such as a GP register, then controls should comprise a sample from the same GP register.

In case-control studies where cases are hospital based, it is common to recruit controls from the hospital population. However, the choice of controls from a hospital setting should not include individuals with an outcome related to the exposure(s) being studied. For example, in a case-control study of the association between smoking and lung cancer the inclusion of controls being treated for a condition related to smoking (e.g. chronic bronchitis) may result in an underestimate of the strength of the association between exposure (smoking) and outcome. Recruiting more than one control per case may improve the statistical power of the study, though including more than 4 controls per case is generally considered to be no more efficient.

Measuring exposure status Exposure status is measured to assess the presence or level of exposure for each individual for the period of time prior to the onset of the disease or condition under investigation when the exposure would have acted as a causal factor. Note that in case-control studies the measurement of exposure is established after the development of disease and as a result is prone to both recall and observer bias. Various methods can be used to ascertain exposure status. These include:

- Standardized questionnaires

- Biological samples

- Interviews with the subject

- Interviews with spouse or other family members

- Medical records

- Employment records

- Pharmacy records

The procedures used for the collection of exposure data should be the same for cases and controls.

2. Common sources of bias in case-control studies

Due to the retrospective nature of case-control studies, they are particularly susceptible to the effects of bias, which may be introduced as a result of a poor study design or during the collection of exposure and outcome data. Because the disease and exposure have already occurred at the outset of a case control study, there may be differential reporting of exposure information between cases and controls based on their disease status. For example, cases and controls may recall past exposure differently (recall bias). Similarly, the recording of exposure information may vary depending on the investigator's knowledge of an individual's disease status (interviewer/observer bias). Therefore, the design and conduct of the study must be carefully considered, as there are limited options for the control of bias during the analysis. Selection bias in case-control studies Selection bias is a particular problem inherent in case-control studies, where it gives rise to non-comparability between cases and controls. Selection bias in case control studies may occur when: 'cases (or controls) are included in (or excluded from) a study because of some characteristic they exhibit which is related to exposure to the risk factor under evaluation' [1]. The aim of a case-control study is to select study controls who are representative of the population which produced the cases. Controls are used to provide an estimate of the exposure rate in the population. Therefore, selection bias may occur when those individuals selected as controls are unrepresentative of the population that produced the cases.

The potential for selection bias in case control studies is a particular problem when cases and controls are recruited exclusively from hospital or clinics. Hospital patients tend to have different characteristics than the population, for example they may have higher levels of alcohol consumption or cigarette smoking. If these characteristics are related to the exposures under investigation, then estimates of the exposure among controls may be different from that in the reference population, which may result in a biased estimate of the association between exposure and disease. Berkesonian bias is a bias introduced in hospital based case-control studies, due to varying rates of hospital admissions. As the potential for selection bias is likely to be less of a problem in population based case-control studies, neighbourhood controls may be a preferable choice when using cases from a hospital or clinic setting. Alternatively, the potential for selection bias may be minimized by selecting controls from more than one source, such as by using both hospital and neighbourhood controls. Selection bias may also be introduced in case-control studies when exposed cases are more likely to be selected than unexposed cases.

3. Analysis of case-control studies

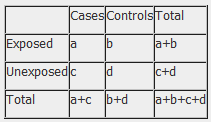

The odds ratio (OR) is used in case-control studies to estimate the strength of the association between exposure and outcome. Note that it is not possible to estimate the incidence of disease from a case control study unless the study is population based and all cases in a defined population are obtained.

The results of a case-control study can be presented in a 2x2 table as follow:

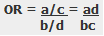

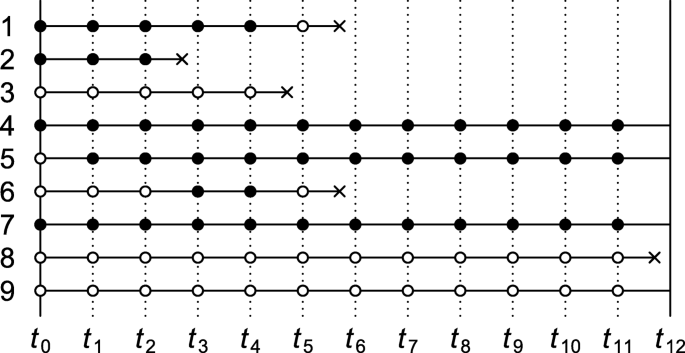

The odds ratio is a measure of the odds of disease in the exposed compared to the odds of disease in the unexposed (controls) and is calculated as:

Example: Calculation of the OR from a hypothetical case-control study of smoking and cancer of the pancreas among 100 cases and 400 controls. Table 1. Hypothetical case-control study of smoking and cancer of the pancreas.

OR = 60 x 300 100 x 40 OR = 4.5 The OR calculated from the hypothetical data in table 1 estimates that smokers are 4.5 times more likely to develop cancer of the pancreas than non-smokers. NB: The odds ratio of smoking and cancer of the pancreas has been performed without adjusting for potential confounders. Further analysis of the data would involve stratifying by levels of potential confounders such as age. The 2x2 table can then be extended to allow for stratum specific rates of the confounding variable(s) to be calculated and, where appropriate, an overall summary measure, adjusted for the effects of confounding, and a statistical test of significance can also be calculated. In addition, confidence intervals for the odds ratio would also be presented.

4. Strengths and weaknesses of case-control studies

- Cost effective relative to other analytical studies such as cohort studies.

- Case-control studies are retrospective, and cases are identified at the beginning of the study; therefore there is no long follow up period (as compared to cohort studies).

- Efficient for the study of diseases with long latency periods.

- Efficient for the study of rare diseases.

- Good for examining multiple exposures.

- Particularly prone to bias; especially selection, recall and observer bias.

- Case-control studies are limited to examining one outcome.

- Unable to estimate incidence rates of disease (unless study is population based).

- Poor choice for the study of rare exposures.

- The temporal sequence between exposure and disease may be difficult to determine.

References 1. Hennekens CH, Buring JE. Epidemiology in Medicine, Lippincott Williams & Wilkins, 1987.

- Open access

- Published: 07 January 2022

Identification of causal effects in case-control studies

- Bas B. L. Penning de Vries 1 &

- Rolf H. H. Groenwold 1 , 2

BMC Medical Research Methodology volume 22 , Article number: 7 ( 2022 ) Cite this article

5818 Accesses

3 Citations

8 Altmetric

Metrics details

Case-control designs are an important yet commonly misunderstood tool in the epidemiologist’s arsenal for causal inference. We reconsider classical concepts, assumptions and principles and explore when the results of case-control studies can be endowed a causal interpretation.

We establish how, and under which conditions, various causal estimands relating to intention-to-treat or per-protocol effects can be identified based on the data that are collected under popular sampling schemes (case-base, survivor, and risk-set sampling, with or without matching). We present a concise summary of our identification results that link the estimands to the (distribution of the) available data and articulate under which conditions these links hold.

The modern epidemiologist’s arsenal for causal inference is well-suited to make transparent for case-control designs what assumptions are necessary or sufficient to endow the respective study results with a causal interpretation and, in turn, help resolve or prevent misunderstanding. Our approach may inform future research on different estimands, other variations of the case-control design or settings with additional complexities.

Peer Review reports

Introduction

In causal inference, it is important that the causal question of interest is unambiguously articulated [ 1 ]. The causal question should dictate, and therefore be at the start of, investigation. When the target causal quantity, the estimand, is made explicit, one can start to question how it relates to the available data distribution and, as such, form a basis for estimation with finite samples from this distribution.