An official website of the United States government

Here’s how you know

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

Take action

- Report an antitrust violation

- File adjudicative documents

- Find banned debt collectors

- View competition guidance

- Competition Matters Blog

Slow the Roll-up: Help Shine a Light on Serial Acquisitions

View all Competition Matters Blog posts

We work to advance government policies that protect consumers and promote competition.

View Policy

Search or browse the Legal Library

Find legal resources and guidance to understand your business responsibilities and comply with the law.

Browse legal resources

- Find policy statements

- Submit a public comment

Vision and Priorities

Memo from Chair Lina M. Khan to commission staff and commissioners regarding the vision and priorities for the FTC.

Technology Blog

Avoiding outages and preventing widespread system failures.

View all Technology Blog posts

Advice and Guidance

Learn more about your rights as a consumer and how to spot and avoid scams. Find the resources you need to understand how consumer protection law impacts your business.

- Report fraud

- Report identity theft

- Register for Do Not Call

- Sign up for consumer alerts

Get Business Blog updates

- Get your free credit report

- Find refund cases

- Order bulk publications

- Consumer Advice

- Shopping and Donating

- Credit, Loans, and Debt

- Jobs and Making Money

- Unwanted Calls, Emails, and Texts

- Identity Theft and Online Security

- Business Guidance

- Advertising and Marketing

- Credit and Finance

- Privacy and Security

- By Industry

- For Small Businesses

- Browse Business Guidance Resources

- Business Blog

Servicemembers: Your tool for financial readiness

Visit militaryconsumer.gov

Get consumer protection basics, plain and simple

Visit consumer.gov

Learn how the FTC protects free enterprise and consumers

Visit Competition Counts

Looking for competition guidance?

- Competition Guidance

News and Events

Latest news, ftc staff issue report on multi-level marketing income disclosures.

View News and Events

Upcoming Event

Informal hearing on the proposed amendments to the energy labeling rule.

View more Events

Sign up for the latest news

Follow us on social media

Noncompete Rule: What You Should Know

Visit the Noncompetes feature page for more information, including factsheets featuring stories on how the rule can benefit Americans.

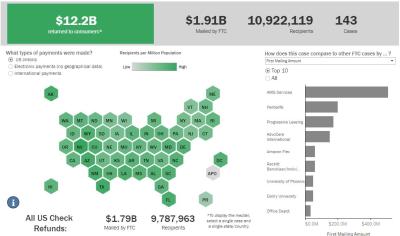

Latest Data Visualization

FTC Refunds to Consumers

Explore refund statistics including where refunds were sent and the dollar amounts refunded with this visualization.

About the FTC

Our mission is protecting the public from deceptive or unfair business practices and from unfair methods of competition through law enforcement, advocacy, research, and education.

Learn more about the FTC

Meet the Chair

Lina M. Khan was sworn in as Chair of the Federal Trade Commission on June 15, 2021.

Chair Lina M. Khan

Looking for legal documents or records? Search the Legal Library instead.

- Cases and Proceedings

- Premerger Notification Program

- Merger Review

- Anticompetitive Practices

- Competition and Consumer Protection Guidance Documents

- Warning Letters

- Consumer Sentinel Network

- Criminal Liaison Unit

- FTC Refund Programs

- Notices of Penalty Offenses

- Advocacy and Research

- Advisory Opinions

- Cooperation Agreements

- Federal Register Notices

- Public Comments

- Policy Statements

- International

- Office of Technology Blog

- Military Consumer

- Consumer.gov

- Bulk Publications

- Data and Visualizations

- Stay Connected

- Commissioners and Staff

- Bureaus and Offices

- Budget and Strategy

- Office of Inspector General

- Careers at the FTC

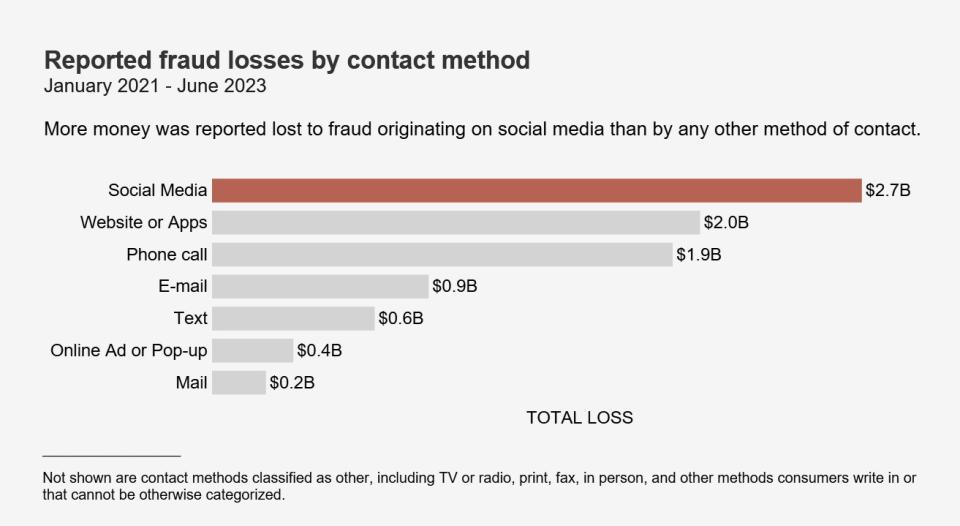

Social media: a golden goose for scammers

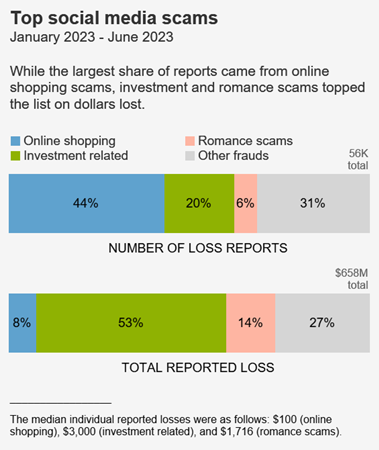

Scammers are hiding in plain sight on social media platforms and reports to the FTC’s Consumer Sentinel Network point to huge profits. One in four people who reported losing money to fraud since 2021 said it started on social media. [1] Reported losses to scams on social media during the same period hit a staggering $2.7 billion, far higher than any other method of contact. And because the vast majority of frauds are not reported, this figure reflects just a small fraction of the public harm. [2]

Social media gives scammers an edge in several ways. They can easily manufacture a fake persona, or hack into your profile, pretend to be you, and con your friends. They can learn to tailor their approach from what you share on social media. And scammers who place ads can even use tools available to advertisers to methodically target you based on personal details, such as your age, interests, or past purchases. All of this costs them next to nothing to reach billions of people from anywhere in the world.

Reports show that scams on social media are a problem for people of all ages, but the numbers are most striking for younger people. In the first six months of 2023, in reports of money lost to fraud by people 20-29, social media was the contact method more than 38% of the time. For people 18-19, that figure was 47%. [3] The numbers decrease with age, consistent with generational differences in social media use. [4]

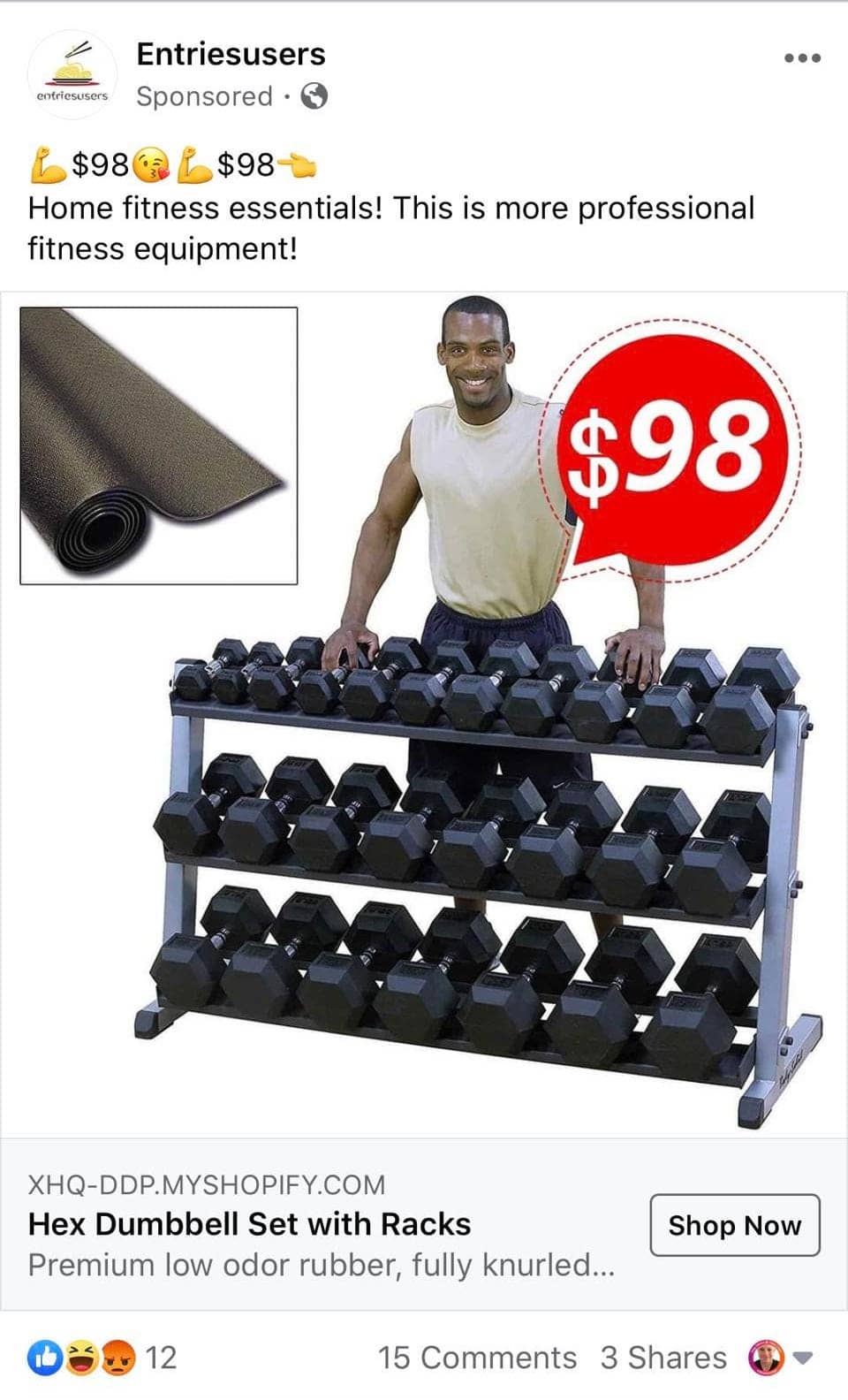

The most frequently reported fraud loss in the first half of 2023 was from people who tried to buy something marketed on social media, coming in at a whopping 44% of all social media fraud loss reports. Most of these reports are about undelivered goods, with no-show clothing and electronics topping the list. [5] According to reports, these scams most often start with an ad on Facebook or Instagram. [6]

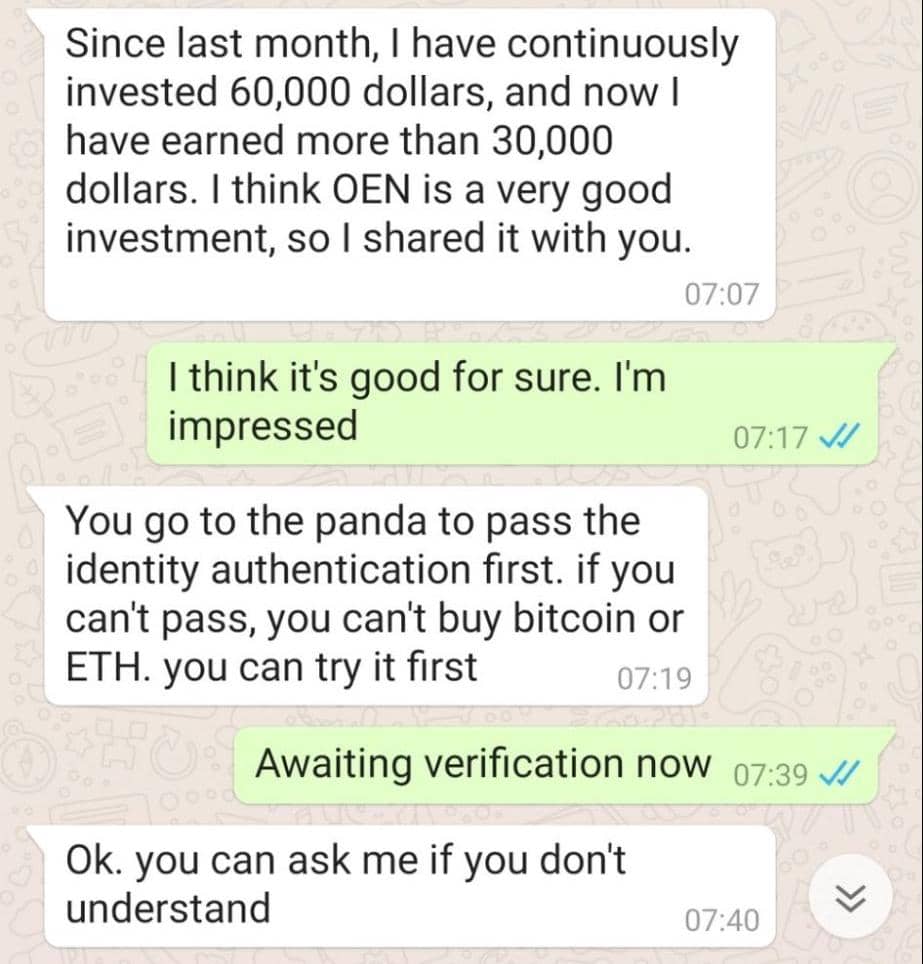

While online shopping scams have the highest number of reports, the largest share of dollar losses are to scams that use social media to promote fake investment opportunities. [7] In the first six months of 2023, more than half the money reported lost to fraud on social media went to investment scammers. To draw people in, these scammers promote their own supposed investment success, often trying to lure people to investment websites and apps that turn out to be bogus. They make promises of huge returns, and even make it look like an “investment” is growing. But if people invest, and reports say it’s usually in cryptocurrency, [8] they end up empty handed.

After investment scams, reports point to romance scams as having the second highest losses on social media. In the first six months of 2023, half of people who said they lost money to an online romance scam said it began on Facebook, Instagram, or Snapchat. [9] These scams often start with a seemingly innocent friend request from a stranger followed by love bombing and the inevitable request for money.

Here are some ways to steer clear of scams on social media:

- Limit who can see your posts and information on social media. All platforms collect information about you from your activities on social media, but visit your privacy settings to set some restrictions.

- If you get a message from a friend about an opportunity or an urgent need for money, call them. Their account may have been hacked—especially if they ask you to pay by cryptocurrency, gift card, or wire transfer. That’s how scammers ask you to pay.

- If someone appears on your social media and rushes you to start a friendship or romance, slow down. Read about romance scams . And never send money to someone you haven’t met in person.

- Before you buy, check out the company . Search online for its name plus “scam” or “complaint.”

[1] This figure excludes reports that did not specify a contact method. Including reports directly to the FTC and reports provided by Sentinel data contributors, 257,945 reports about money lost to fraud originating on social media were filed from January 2021 through June 2023.

[2] See Anderson, K. B., To Whom Do Victims of Mass-Market Consumer Fraud Complain? at 1 (May 2021), available at https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3852323 (study showed only 4.8% of people who experienced mass-market consumer fraud complained to a Better Business Bureau or a government entity).

[3] These figures exclude reports that did not specify a contact method and reports that did not include age information.

[4] See Pew Research Center, Social Media Use in 2021 (April 2021), available at https://www.pewresearch.org/internet/2021/04/07/social-media-use-in-2021/ (study showed people ages 18-29 reported the highest social media use at 84%, followed by ages 30-49 at 81%, ages 50-64 at 73% and 65 and over at 45%). In the first 6 months of 2023, the share of loss reports indicating social media as the contact method by age was as follows: 47% (18-19), 38% (20-29), 32% (30-39), 28% (40-49), 26% (50-59), 21% (60-69), 15% (70-79), 9% (80 and over). Social media was the top contact method ranked by fraud loss reports for all age groups under age 70, while phone call was the top contact method for the 70-79 and 80 and over age groups.

[5] The top undelivered items were identified by hand-coding a random sample of 400 reports that contained a narrative description identifying the items ordered.

[6] In the first 6 months of 2023, people reported undelivered merchandise in 61% of loss reports about online shopping fraud originating on social media. Facebook was identified as the social media platform in 60% of these reports, and Instagram was identified in 24%. This excludes reports that did not identify a platform.

[7] The top platforms identified in these reports were Instagram (30%), Facebook (26%), WhatsApp (13%), and Telegram (9%). Reports that did not indicate a platform are excluded from these calculations.

[8] In the first 6 months of 2023, cryptocurrency was identified as the payment method in 53% of investment-related fraud reports that indicated social media as the method of contact. This excludes reports that did not specify a payment method.

[9] Facebook and Instagram were each identified in 21% of these reports, followed by Snapchat at 8%. This excludes reports that did not specify the platform, website, or app.

File Social media: a golden goose for scammers (318.84 KB)

More from the Data Spotlight

Bitcoin atms: a payment portal for scammers, who’s who in scams: a spring roundup, impersonation scams: not what they used to be, iykyk: the top text scams of 2022.

Social Media Use and Fraud

Employment scams and the telegram app..

Posted February 11, 2022 | Reviewed by Ekua Hagan

- What Is a Career

- Take our Procrastination Test

- Find a career counselor near me

- Social media fraud is exploding: Per the FTC, 1 in 4 scams start on social media.

- Younger adults aged 18-39 were more than twice as likely as older adults to report losing money to social media scams in 2021.

- Young workers who are new to the job market can be especially vulnerable to employment scams.

A recent report by the Federal Trade Commission (FTC, January 25, 2022) highlights the shift to social media as the platform of choice for con artists. Per the FTC, 1 in 4 scams start on social media, and it is the most profitable venue for reaching victims than any other format.

These scams may start with an ad, a post, or a message using a number of platforms. Losses initiated on social media are estimated to be in the range of $770 million for 2021 alone. The scams range from romance scams to investment scams with a massive increase in cryptocurrency scams. As a result of this shift, younger adults aged 18-39 were more than twice as likely as older adults to report losing money to these scams in 2021.

Younger adults and employment scams

Younger adults have different vulnerabilities than seniors. For example, new workers who need jobs and have limited professional experience are more likely to fall prey to employment scams.

These scams often start with either a bogus job posting on legitimate job boards (like Indeed or ZipRecruiter) or are initiated by third parties that contact job seekers indicating they had seen their resume on Indeed or a similar platform. In a typical example, the scammer suggests using a messaging tool such as Telegram or WhatsApp and conducts most or all of the business using text messaging.

I was contacted by a recent college graduate who was willing to speak to me about their experience with this type of scam. The individual graduated last May and was still looking for work in the area of a remote IT help desk or similar position. They were contacted by a recruitment manager who indicated they had seen their resume and indicated falsely that they were from a well-known supplement company. The position was a remote IT-type job and the rate was a tad higher than other entry-level jobs, but not out of the range.

The manager immediately indicated that the interview and training would take place on Telegram, and asked the applicant to download the app to proceed. Telegram is an instant messaging service similar to WhatsApp or Facebook messaging. It has been implicated in cryptocurrency scams and is also used by those seeking secrecy as individuals can communicate without exchanging phone numbers.

Besides being on Telegram, the interview questions appeared routine and professional related to work experience and logistics. Once “hired,” they were asked to give a social security number and other personal information. The big red flag, however, was the requirement that they deposit a $1400 check in their bank account to be used to purchase expensive equipment. At this point in time, the individual contacted me and I advised them to contact their banking institution to cancel the check and initiate a fraud investigation. I also recommended initiating a service to monitor credit reports and threats of identity theft.

If allowed to proceed, the scammer may ask the victim to “buy” expensive software with their own funds, and in the meantime, the initial check bounces. Or sometimes, they ask the individual to purchase crypto and send it to other accounts as part of the job and the applicant becomes unwittingly involved in money laundering. Or both—they become both a victim and have their bank accounts used by the scammers for other purposes. Usually, the banking institution will catch the fraudulent transactions and freeze the account or block online banking after a few suspicious transactions.

Law enforcement recommends contacting your bank and credit card companies, contacting credit monitoring companies, and contacting the national consumer credit line in the US (1-888-567-8688) if you suspect you have been the victim of an employment scam.

Scam victims may also need support. Already discouraged from a difficult job search, younger consumers may become less confident and more anxious regarding their prospects. Like any crime victim, they need support and to be assured that these incidents could literally happen to anyone.

https://www.ftc.gov/news-events/blogs/data-spotlight/2022/01/social-med…

Stacey Wood, Ph.D. is the Molly Mason Jones Professor of Psychology at Scripps College and a national expert on elder fraud issues.

- Find a Therapist

- Find a Treatment Center

- Find a Psychiatrist

- Find a Support Group

- Find Online Therapy

- United States

- Brooklyn, NY

- Chicago, IL

- Houston, TX

- Los Angeles, CA

- New York, NY

- Portland, OR

- San Diego, CA

- San Francisco, CA

- Seattle, WA

- Washington, DC

- Asperger's

- Bipolar Disorder

- Chronic Pain

- Eating Disorders

- Passive Aggression

- Personality

- Goal Setting

- Positive Psychology

- Stopping Smoking

- Low Sexual Desire

- Relationships

- Child Development

- Self Tests NEW

- Therapy Center

- Diagnosis Dictionary

- Types of Therapy

It’s increasingly common for someone to be diagnosed with a condition such as ADHD or autism as an adult. A diagnosis often brings relief, but it can also come with as many questions as answers.

- Emotional Intelligence

- Gaslighting

- Affective Forecasting

- Neuroscience

Worried you have been impacted by the latest data breach? Get protection now .

Worried you have been impacted by the

latest data breach? Get protection now .

The Worst Social Media Scams of 2024 & How To Avoid Them

Social media scams are running rampant. Learn what to look out for and how to avoid the worst social media scams out there right now.

Yaniv Masjedi

Contributing Cybersecurity Writer

Yaniv Masjedi is the CMO at Nextiva, a provider of cloud-based, unified communication services. Previously, he headed the marketing department at Aura. Yaniv studied Political Science and History at UCLA. Follow him on Twitter: @YanivMasjedi.

Jory MacKay

Aura Cybersecurity Editor

Jory MacKay is a writer and award-winning editor with over a decade of experience for online and print publications. He has a bachelor's degree in journalism from the University of Victoria and a passion for helping people identify and avoid fraud.

Aura’s digital security app keeps your family safe from scams, fraud, and identity theft.

Do You Know How To Spot a Social Media Scammer?

After Georgina’s husband passed away, she turned to Facebook to feel more connected to her family. Soon after joining, she received a friend request from a man named “Jim” — an attractive stranger who was serving in the military overseas [ * ].

The two hit it off, quickly building an online relationship. Jim had dreams of one day opening a gemstone business after his current service duty ended. But as that date drew closer, he started having serious legal troubles. He needed money to get home — and Georgina was more than happy to help.

When her family and the police finally found out what was going on, it was too late. There was no “Jim” — only a scammer to whom Georgina had sent more than $100,000.

Social media provides prime hunting grounds for scammers.

Last year, one out of every four fraud victims said the scam started with either a social media direct message (DM), ad, or post — with fraud losses hitting $770 million, according to the Federal Trade Commission (FTC) [ * ].

If you or a loved one use social media, you need to be more vigilant than ever to avoid scams.

In this guide, we’ll cover how social media scams work, how to quickly identify a scammer on social media, and 10 of the latest scams to watch out for.

{{show-toc}}

What Are Social Media Scams? How Do People Get Scammed on Social Media?

Social media scams are a type of fraud that is committed on social networking sites. Scammers often create fake profiles, befriend innocent people, and send spam messages or links that lead to malicious websites.

But that’s only several of the ways that scammers can use social media to target you.

Other tactics include:

- Sending you malicious links that infect your devices with malware.

- Running online dating scams and coercing you into sending money or signing up for fake investment platforms. (The latest version of this scam, known as the “ pig butchering scam ” has cost victims over $10 billion .)

- Posting ads to fake stores that steal your personal information or money.

- Using social engineering tactics to trick you into giving scammers access to your social media accounts or sending them money and cryptocurrency.

- Using surveys and quizzes to gather sensitive information that they can use to steal your identity.

- Impersonating brands, celebrities, and people you know — and tricking you into giving them money or personal information.

Scammers can create an endless number of fake profiles and ads, putting billions of social media users at risk. So, how do you spot a scammer before it’s too late?

⛳️ Related: How To Protect your Personal Information on Social Media →

Here’s How To Quickly Identify a Scammer on Social Media

- Their messages include a lot of grammar and spelling errors. Many scammers aren’t native English speakers and may use poor spelling, strange and unnatural language, or awkward formatting. Be especially cautious when someone’s claimed background (where they were born, education, etc.) doesn’t match up with how they write.

- It’s a brand new social media profile with little content or few friends . The average Facebook user has around 200–250 friends [ * ]. Regardless of the platform, if an account has fewer followers than that or is very new, it could be a scammer.

- The profile belongs to someone with whom you thought you were already friends. Scammers create “cloned” profiles to impersonate your friends and contacts.

- You receive a random message with a link in it. Never click on links or engage with unsolicited direct messages (DMs). This is how scammers trick you into going to fake websites or downloading malware onto your device.

- You’re asked to send money online (via gift cards, wire transfers, payment apps, etc.) or invest in cryptocurrency . This is the #1 red flag that you’re dealing with a social media scammer.

- Posts or ads promoting a deal that seems too good to be true. Low prices or hard-to-find items that are readily available are major warning signs of a scam.

- You’re sent to an online store that depicts signs of a scam. Beware of sites that offer good deals but are missing basic information (like shipping times and costs, the company’s address, and direct contact information). When in doubt, follow best practices on how to shop online safely .

- The person insists on taking the conversation off social media and asks you to text them. This allows them to bypass the security measures provided on most social media sites (or continue the scam if their account gets reported and banned).

The 10 Latest Social Media Scams in 2023

- Investment and cryptocurrency scams

- Romance scams

- Social media account takeover fraud

- Authentication code scams

- Social media ads promoting fake online stores

- Impersonator accounts

- “Is this you in this photo/video?”, other link scams

- Social media quizzes

- Lottery, sweepstakes, and giveaway scams

- Job scams on social media

Cybercriminals will stop at nothing to get you to give up your hard-earned money and personal data.

Keep an eye out for these common social media scams to help stay safe from fraudsters:

1. Investment and cryptocurrency scams

Fake cryptocurrency and investment opportunities are among the biggest scams happening on social media right now. It’s estimated that 37% of all social media scam losses last year were due to investment scams — with the majority being cryptocurrency scams [ * ].

The con starts when a scammer reaches out to you, typically via direct social media message. They’ll start off by trying to build a relationship but then quickly share information about a “great investment opportunity” that helped them “make so much money so fast.”

But if you invest, you’ll be sending money or crypto directly to a scammer.

Warning signs of a social media investment scam:

- Promises of high returns with zero risk.

- Professional-looking investment websites or crypto exchanges with little to no information about the company.

- The scammer offers to walk you through your first few trades and claims to have insider knowledge of the market.

Don’t get scammed. Do this instead:

- Conduct a thorough online search and/or contact your state’s Department of Financial Institutions (DFI) to see whether or not the person offering you this opportunity is a real investment banker.

- Don’t share any personal information until you’ve verified whether the company is legitimate or not.

- Do not send money to anyone who has reached out directly over social media.

⛳️ Related: The 11 Latest Facebook Scams You Didn't Know About (Until Now) →

2. Romance scams

Romance scams are common on dating sites, but many scammers also turn to social media to find victims.

In these scams, fraudsters create fake profiles using stolen photos of attractive people to lure in unsuspecting social media users. Once they initiate a relationship, they’re very forward and “love bomb” their victims — quickly telling them that they’re in love and want to meet up.

Eventually, the catfisher will mention financial troubles and ask for help. Too many people have fallen victim to this, with romance scams comprising 24% of all social media scams [ * ].

Warning signs of a romance scam:

- The person wants to quickly move from the social media site to WhatsApp or texting.

- They promise to meet in person but come up with excuses for why they can’t.

- They repeatedly ask for personal information, like your location or pet’s name.

- The scammer professes their love for you early in the conversation.

- They ask for money or gift cards.

- Be conscious of what you post publicly online. Scammers can use your posts, tweets, or updates to craft a personalized approach that makes you think you’ve found the “perfect partner.”

- Be safe and always meet people you meet online in public places.

- Don’t send money to people you haven’t met in person.

⛳️ Related: These 10 WhatsApp Scams Are as Unnerving as They Look →

3. Social media account takeover fraud

Account takeover fraud occurs when hackers gain access to someone’s social media profile. They may trick you into giving up access, use a phishing attack to steal your password, or simply buy your login information off the Dark Web.

Once they gain access, scammers will use these accounts to:

- Post about fake investment opportunities.

- Share links to phishing sites or fake apps .

- Gather personal details from their victim’s friends and family members.

- Gain access to other online accounts (for example, by using “sign in with Facebook”).

Warning signs of an account takeover scam:

- Your friend is randomly sending messages that don’t fully seem like actual things they would say.

- Your friend is randomly posting about investment opportunities or great deals that they just found.

Secure your accounts with strong and unique passwords, and enable two-factor authentication (2FA) whenever possible.

If you receive a message or see a social media post from a friend that doesn’t seem quite right — no matter what platform it’s on — message them on a different platform (or via text/phone call) to double-check that their account didn’t get hacked.

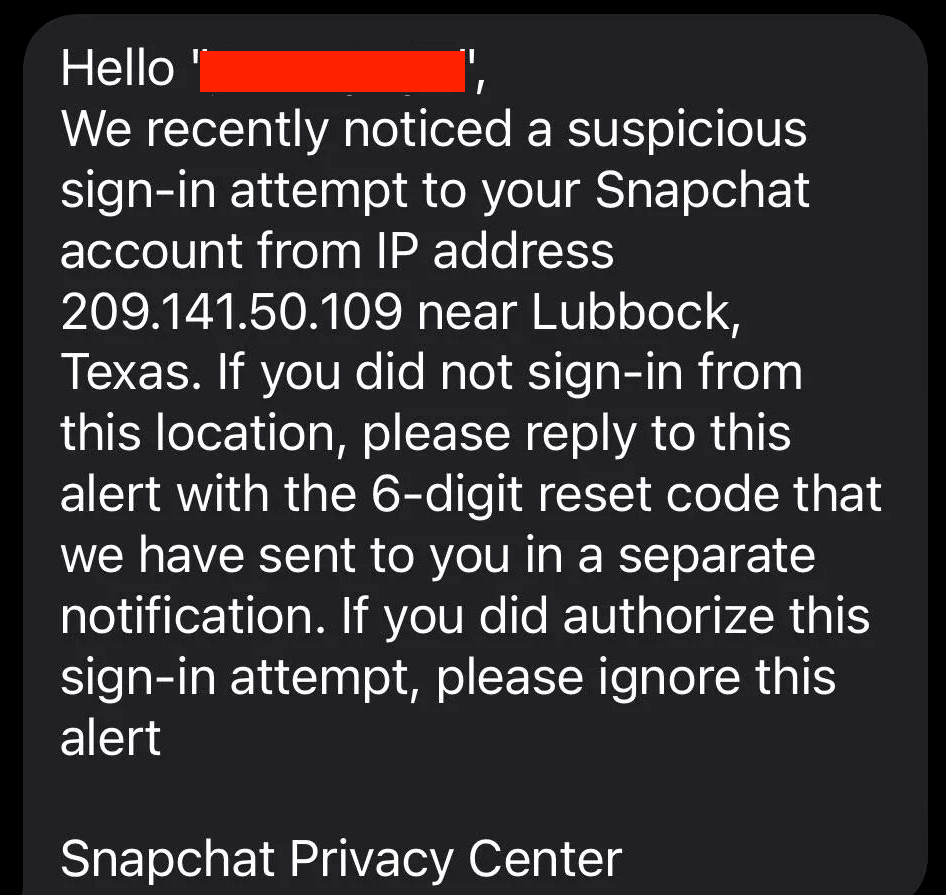

4. Authentication code scams

Two-factor and multi-factor authentication (2FA and MFA) offer additional security for your online accounts by requiring confirmation of a special code along with your password. These codes are usually sent via text or email, making it hard for hackers to steal them.

Scammers on social media pretend to be friends or contacts who need “help” getting their account back and will ask to send a code to your phone or email.

In reality, they’re requesting a 2FA code for your account . If you send the code back to them, they’ll gain access to your online accounts.

Warning signs of an authentication code scam:

- You’ve received a random text with an authentication code for one of your accounts.

- A stranger is texting or messaging you and asking for an authentication code.

- Some scammers claim the code is a way to “tell you’re legitimate” on Facebook Marketplace (or other platforms) as a ruse to get you to send them your code.

- Never give a stranger an authentication code that has been texted or emailed to you. Legitimate companies will never ask for your password or 2FA code.

- Ignore any requests for 2FA codes, and immediately change your passwords for the affected accounts.

⛳️ Related: How To Recover a Hacked Facebook Account →

5. Social media ads promoting fake online stores or counterfeit products

Scammers often use social media ads to promote fake products or stores on social media. The Better Business Bureau (BBB) has received thousands of complaints about misleading Facebook and Instagram ads [ * ].

These online shopping ads try to capture your attention by saying the proceeds are going to charity; or they list items at unbelievable prices.

However, the ads are just fronts to get your money or information, and the scammers do not intend to fulfill the order.

Warning signs of a social media ad scam:

- Poor-quality product images are the center point of the ad.

- Price points are exponentially lower than what other retailers are charging.

- There are spelling and grammatical errors in the ad copy.

If a deal seems too good to be true, it probably is. To be safe, do a Google search of the brand or product to check reviews. Consider searching for “[brand name] + [scam/reviews/legit]” to see if anything comes up.

⛳️ Related: How To Avoid Facebook Marketplace Scams →

6. Impersonator accounts

Scammers create imposter social media accounts using someone else's name, photos, and other identifying information.

Impersonator accounts may request money, send links for phishing scams, or post fake giveaways and prizes.

Scammers have also started impersonating celebrities. Several people have shared their experiences on social media about celebrities supposedly contacting them for financial assistance [ * ] or claiming they’re raising money for charities.

Warning signs of an impersonator scam:

- The account is not verified — especially if it normally would be (i.e., a celebrity or influencer).

- A celebrity or someone you don’t know well is requesting money.

- A “lookalike” social media handle misleadingly seems like it could belong to the real person.

A celebrity or influencer is likely not messaging you to ask for financial help. Always conduct an additional search to see if you can find a verified account for this person, or an account that displays more followers, content, and engagement.

⛳️ Related: How To Properly Set Up Your Social Media Privacy Settings →

7. “Is this you in this photo/video?” and other link scams

This scam is another version of a hacked account scam. You might receive a message from a friend or stranger that says something like, “Is this you in this photo?!” alongside a link.

While reading a message like this can be nerve-racking (or pique your curiosity), don’t click on the link. If you do, it will most likely take you to a fake social media login page designed to steal your password.

Warning signs of a link scam:

- You receive a random message with a strange-looking link or a threatening message.

- When clicking on a link, you’re prompted to log in to a website.

Never click on a suspicious-looking link. Check in with the friend from whom you received the link; but use a different platform or method of communication to either see if it’s legitimate or to let them know that their social media account has been hacked.

If you’re ever asked to log in to an account via a link, check that the page is secure and has a valid security certificate (issued to the site that you think you’re logging in to).

8. Social media quizzes

Scammers use quizzes on social media to steal your personal information and break into your accounts.

These quizzes start with innocent-sounding questions, such as “What car did you pass your driver’s test with?” or “What is your mother’s maiden name?” or “What street did you grow up on?”

But these are common security questions to access your bank account and other financial institutions.

Warning signs of a social media quiz scam:

- A quiz poses unrelated and deeply personal questions.

- You recognize the questions from options you’ve had for security questions.

- The quiz requests your phone number in order for you to view the results.

If a quiz starts asking strange questions, stop there. Don’t answer further questions, and immediately report the account to the social media platform.

9. Lottery, sweepstakes, and giveaway scams

In this type of scam, fraudsters DM you to say you've won a prize. But to receive it, you must first pay or provide financial information [ * ].

Everyone wants to win a big prize. But if you haven't entered any giveaways, you shouldn't receive congratulatory messages in your DMs.

Warning signs of a lottery, sweepstakes, or giveaway scam:

- You’re being asked to pay to receive your prize (i.e., taxes, shipping, processing fees).

- You’re told that paying increases your chances of winning.

- You’re asked to provide financial account information or a phone number to claim your prize.

Do not pay an account that DMs you. No credible lottery or sweepstakes requires you to pay. It’s illegal to request money for sweepstakes .

If you really have entered the lottery or sweepstakes, ensure that the person contacting you about your prize is not asking for money upfront.

⛳️ Related: How To Spot (and Avoid) Publishers Clearing House Scams →

10. Job scams on social media

The number of job scams have rocketed in the last few years as more Americans are working from home or exclusively online.

Fraudsters create fake social media accounts to promote amazing remote job opportunities, promising that you can make tons of money. Scammers have two objectives when running a job scam:

- Get money from you. A scammer will give you the job, but only if you “buy the equipment” first.

- Get information from you. Scammers will send you a job application in hopes that you’ll fill it out and give away private information, such as your Social Security number and home address.

Warning signs of a job scam:

- The job pays extremely well for not much work.

- The supposed employer wants you to pay for your own equipment (legitimate companies should provide you with everything you need).

- You’re sent a check for a large amount and told to deposit it and then send some of the money back to the employer. This is a classic bank scam .

Always research companies to which you’re applying, and make sure they’re legitimate. You can check reviews on sites like Glassdoor, or search for the company name on the Better Business Bureau (BBB) website. In all cases, you should never pay for equipment, training, or supplies upfront for a new job.

Did You Fall for a Social Media Scam? Do This

With 25% of all fraud victims getting scammed on social media, there’s a good chance that you could become a victim. Here’s what to do if you’ve been scammed on social media.

If scammers took over your social media account:

- Request a password reset email from the social media service. Each site and app has a different process for recovering a hacked account. For example, here’s how to recover a hacked Instagram account .

- Once you regain access, force any unfamiliar sessions to log out. For example, check your “login activity” and look for devices or locations that you don’t recognize.

- Then, update the email and phone number associated with your account, and change your passwords.

- Enable 2FA on your account and use an authenticator app such as Authy (instead of text or SMS).

If you sent a social media scammer money or crypto:

- Try to cancel the transaction by contacting the financial institution or crypto exchange that you used.

- Freeze your credit. This stops scammers from using your financial information to open new accounts or take out loans.

- Report the fraud to the social media platform and to the FTC at ReportFraud.ftc.gov.

- If you have any information that could lead to the arrest of the scammer, you should also file a police report with your local law enforcement.

If you clicked on a strange link or gave scammers personal information:

- Report the fraud to the social media platform. Collect as much as information as you can, including screenshots of conversations and the scammer’s profile.

- File an official identity theft report with the FTC at IdentityTheft.gov. This is an essential step if you need to dispute fraudulent transactions or prove that you were the victim of identity theft.

- Report the fraud to the FBI’s Internet Crime Complaint Center ( IC3 ). This will help the authorities track current scams and go after the fraudsters.

- Do a full scan of your device with antivirus software, and follow the steps of what to do if you think you’ve been hacked .

- Consider signing up for Aura’s #1-rated identity theft protection. Try Aura free for 14 days and see if it’s right for you →

How To Stay Safe and Avoid Social Media Scams

With billions of people using social media, it’s impossible to completely avoid scammers. But if you’re vigilant and do your due diligence, you can stay safe and social at the same time.

Whenever you’re using social media, make sure to follow these best practices:

- Never click on pop-up messages or links from unsolicited, private messages.

- Don’t give out personal information unless you know the website you’re on is legitimate and secure.

- Adjust your social media privacy settings to ensure that your posts are not visible to strangers.

- Don’t respond to strangers messaging you on social media.

- Create strong, unique passwords for each social media account.

- Use a password manager to securely store your passwords and warn you if your account has been compromised.

- Activate two-factor authentication (2FA) for your accounts.

- If you suspect a friend or company has been hacked, contact them directly through trusted channels (such as their phone number).

- Never send money to someone you’ve only met on social media.

For added protection, consider signing up for Aura’s all-in-one digital security solution to keep you and your family safe from scams.

With Aura, you get #1-rated identity theft protection, 24/7 credit monitoring, proactive digital security tools — including antivirus software, virtual private network (VPN), password manager, and more — as well as $1 million in insurance coverage for eligible losses due to identity theft.

Stop scammers in their tracks. Try Aura free for 14 days →

Editorial note: Our articles provide educational information for you to increase awareness about digital safety. Aura’s services may not provide the exact features we write about, nor may cover or protect against every type of crime, fraud, or threat discussed in our articles. Please review our Terms during enrollment or setup for more information. Remember that no one can prevent all identity theft or cybercrime.

Award-winning identity theft protection with AI-powered digital security tools, 24/7 White Glove support, and more. Try Aura for free .

Related Articles

10 Text Message Scams You Didn't Know About (Until Now)

Scammers are everywhere — even in your text message inbox. Here are the 10 latest text message scams to be aware of (and how to avoid them).

How To Protect Yourself From Account Takeover Fraud (ATO)

Account takeover fraud is when scammers gain access to your online accounts — social media, online banking, etc. Learn how to keep your accounts secure.

Try Aura—14 Days Free

Start your free trial today**

14 days FREE of All-In-One Protection

4.7 as of March 2024

The Psychology of Internet Fraud Victimisation: a Systematic Review

- Open access

- Published: 02 July 2019

- Volume 34 , pages 231–245, ( 2019 )

Cite this article

You have full access to this open access article

- Gareth Norris ORCID: orcid.org/0000-0002-7828-5857 1 ,

- Alexandra Brookes 1 &

- David Dowell 2

57k Accesses

57 Citations

96 Altmetric

11 Mentions

Explore all metrics

Existing theories of fraud provide some insight into how criminals target and exploit people in the online environment; whilst reference to psychological explanations is common, the actual use of established behavioural theories and/or methods in these studies is often limited. In particular, there is less understanding of why certain people/demographics are likely to respond to fraudulent communications. This systematic review will provide a timely synthesis of the leading psychologically based literature to establish the key theories and empirical research that promise to impact on anti-fraud policies and campaigns. Relevant databases and websites were searched using terms related to psychology and fraud victimisation. A total of 44 papers were extracted and 34 included in the final analysis. The studies range in their scope and methods; overall, three main factors were identified: message ( n = 6), experiential ( n = 7), and dispositional ( n = 21), although there was some overlap between these (for example, mapping message factors onto the dispositional traits of the victim). Despite a growing body of research, the total number of studies able to identify specific psychological processes associated with increased susceptibility to online fraud victimisation was limited. Messages are targeted to appeal to specific psychological vulnerabilities, the most successful linking message with human factors, for example, time-limited communications designed to enact peripheral rather than central information processing. Suggestions for future research and practical interventions are discussed.

Similar content being viewed by others

Understanding Internet Fraud: Denial of Risk Theory Perspective

Meeting the Challenges of Fraud in a Digital World

The Online Behaviour and Victimization Study: The Development of an Experimental Research Instrument for Measuring and Explaining Online Behaviour and Cybercrime Victimization

Explore related subjects.

- Artificial Intelligence

Avoid common mistakes on your manuscript.

Introduction

The FBI’s Internet Crime Complaint Center (IC3) recently reported figures that show Internet-enabled theft, fraud, and exploitation being responsible for $2.7 billion in financial losses in 2018 (FBI 2018 ). The annual Internet Crime Report shows that IC3 received 351,936 complaints last year—nearly 1000 per day—with non-payment/non-delivery scams, extortion, and personal data breaches the most frequently reported. The most financially costly were business email compromise, romance or confidence fraud, and investment scams. Internet-based fraud was the fastest growing crime in the UK in 2015–2016, with 3.25 million victims each year and an annual combined loss of £3.6 billion (Button et al. 2016 ). Estimates indicate 4.7 million incidents of fraud and computer misuse were experienced by adults aged 16 and over in England and Wales for the survey year ending September 2017 (ONS, 2017 ). Button and Cross ( 2017 ; p. 23) provide a summary on the rising role of technology in perpetuating these crimes: ‘[i]ndeed it is estimated globally there are 29 billion spam emails daily and that the email virus rate is 1 in 196 and phishing emails are 1 in 392’. The on-going infiltration and reliance on technology into our daily lives is likely to see this trend increase in the short-to-medium term until we develop suitable strategies to stay secure online.

However, despite current efforts to educate individuals on the way in which criminals operate online, millions of these fraudulent activities—from phishing attempts to ‘lonely hearts’ scams—are responded to each year (NAO 2017 ); inherent human weaknesses for incentive-driven behaviours seemingly make many of these scams too alluring to resist. For example, priming individuals with images of money has been shown to reduce helpfulness towards others and increase isolation in tasks involving new acquaintances (Vohs et al. 2006 ). Similarly, financial decisions elicit different brain structures to similar non-financial rewards (Knutson et al. 2000 ). Anecdotally, we know that fraud-related messages are designed to exploit certain behavioural and demographic ‘weaknesses’, for example, impulsiveness and/or loneliness (Duffield and Grabosky 2001 ). Button et al. ( 2009 ) note that when considering the perpetrators of fraud, ‘[…] there is only limited data available. Even the law enforcement community does not always know the background of the perpetrators.’ (p. 13). Significantly, the existing fraud literature is limited in scope in terms of exploring the ‘how’ and the ‘why’—in precisely what way they influence individual decision-making processes? Thus, this systematic review aims to connect some of these methodological and conceptual links to establish how message, experiential, and dispositional factors may influence an individual’s cognitive processing associated with increased likelihood for Internet fraud victimisation.

Previous Reviews

There are a number of reviews in the wider online/consumer fraud area, although the focus for many is age as a risk factor. Jackson’s ( 2017 ) evaluation is predominantly aimed at methodological and prevalence issues and suggests a lack of knowledge of risk factors in the financial exploitation of older people increases propensity for fraud. More recently, a review by Burnes et al. ( 2017 ) expands upon many of these points to also include susceptibility to web scams. Incorporating the wider issue of consumer fraud, Ross et al. ( 2014 ) attempt to dispel some of the myths regarding age-related victimisation and increased vulnerability. They document six key areas where older people are more likely to be disproportionately exploited by fraudsters, for example, slower cognitive processing and increased trust. However, Ross et al. suggest that age can also act as a protective factor in the sense that older people are less likely to use the Internet for financial transactions. In particular, they caution that vulnerability does not equal prevalence; Ross et al. conclude that psychological research in this area must not overly stereotype older people in the sense that policies designed to reduce victimisation mistakenly create further opportunities for crime.

A recently published report evaluation of fraud typologies and victims by the UK National Fraud Authority (NFA) highlights how victims are selected, approach strategies, and profiles of victims (Button et al. 2016 ). This report identifies a number of research articles which indicate that targeting individual susceptibility to fraud is a key feature of many scams; for example, using time-limited responses to limit the amount of deliberation. Risk taking and low self-control are also identified as additional personality factors linked to characteristics of fraud victims. The report also goes some way to dispel the myth that older people are more probable victims (although they are more likely to experience fraud than theft or robbery). Lower levels of reporting may be more apparent in older victims—whether they knew the fraud had taken place or not—with those who blamed themselves also being less likely to report. Significantly, active social networks encouraged reporting; these may be less extensive in some older populations. Ultimately, Button et al. caution that: ‘[…] what is striking about of [sic] the scams is that the profiles cover almost everybody; hence almost anyone could become the victim of a scam’ (p. 24). Consequently, although we can observe some small variations in demographics of fraud victims (e.g. age, gender, SES), it appears that individual psychological differences are likely to be the key factor in explaining why some people are more likely to arrive at erroneous decisions in responding to fraudulent online messages.

Theoretical and Conceptual Issues

The majority of previous research conducted in this area predominantly focus on the persuasive influence of the scam message employed by the fraudster (see Chang and Chong 2010 ) or the knowledge of scams held by the potential victim (see Harrison et al. 2016a ). The purpose of this systematic review is to extend that focus to incorporate variables related to individual psychological differences, i.e. those which make people more vulnerable to be deceived by fraudulent communications (see Judges et al. 2017 ). Research by Modic and colleagues has highlighted individual differences to scam compliance through the lens of susceptibility to persuasion and wider theoretical links with social influence (see Modic et al. 2018 ; Modic and Lea 2013 ). The development of the Susceptibility to Persuasion (StP) scale has demonstrated good construct validity in relation to self-report scam plausibility across large samples. The second iteration (StP-II; see Modic et al. 2018 ) incorporates 10 subscales measuring individual differences in a range of mechanisms, including sensation seeking, risk preferences, and social influence. However, we are still some way from achieving a robust and testable model of online fraud susceptibility.

Dispositional factors currently assessed in the literature predominantly focus on demographic factors, such as age, gender, income, and education (Purkait et al. 2014 ), in conjunction with individual characteristics, such as low self-control (Holtfreter et al. 2008 ), high levels of perceived loneliness (Buchanan and Whitty 2014 ), and impulsivity (Pattinson et al. 2011 ). The application of Petty and Cacioppo’s ( 1986 ) elaboration likelihood model (ELM) to explain how psychological mechanisms impact deception likelihood is common (see Vishwanath et al. 2011 ), although few have applied this theoretical model to explore how dispositional factors influence an individual’s cognitive processing associated with victimisation. Similarly, there are a limited number of experimental designs or use of large secondary data sets in this field, both of which would provide the vital understanding of ‘how’ these influences occur. Upon reflection, much of the literature exploring dispositional factors and vulnerability to fraud is limited in scope in terms of understanding the psychological mechanisms that lead people to become victims of these scams. Without sufficient grounding in established psychological mechanisms, attempts to prevent or limit victimisation will likely underperform. The aim of this systematic review is to collate and analyse the key research in relation to the psychology of Internet fraud to ascertain the baseline theoretical and research knowledge in this growing area, focusing on established psychological theories and empirically based methodologies.

Methodology

To examine the extent to which psychological theories have been empirically tested to explain Internet fraud victimisation through a systematic review of the literature. The primary focus is upon understanding the literature which relates to how victims respond to fraudulent communications as opposed to the offender. However, as Button, Lewis, and Tapley ( 2009 , p. 15) note: ‘[t]he growing literature upon different types of fraud provides much information on the techniques of fraudsters. These diverse range of tactics used [can] be considered under three sub-headings, victim selection techniques, perpetration strategies and finally detection avoiding strategies’:

Victim selection techniques concern the strategies that fraudsters use to contact their victims, e.g. email or virus.

Perpetration strategies: once the victim has been identified, these are the techniques used by fraudsters to secure money or identity, e.g. legitimate appearance of an email.

Detection avoidance techniques: techniques used by fraudsters that would minimise their risk of getting caught/sentenced, e.g. making reporting unlikely if ask for a small sum of money.

It is the first two of these that is the focus of this review and primarily the aim is to consolidate our understanding of the psychological mechanisms by which perpetrator (message) and victim (respondent) interact.

Search Methods

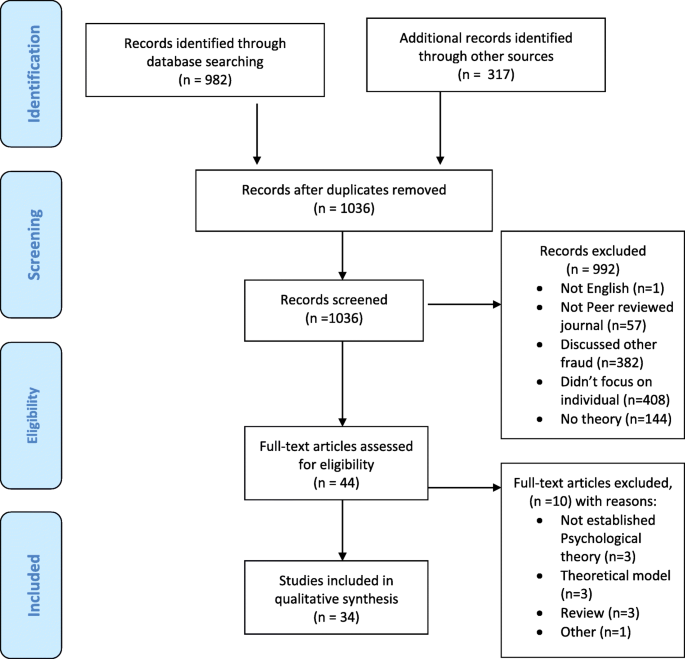

Multiple investigators (GN and AB) independently screened both titles and abstracts and relevant full-text articles from the following databases: PsychINFO, ProQuest, International Bibliography of the Social Sciences; Applied Social Science Index and Abstracts, Sociological Abstracts; Sage Criminology; Criminal Justice Abstracts, alongside grey literature from Dissertation Abstracts Online and COS Conference Paper Index. Figure 1 shows the flow diagram outlining the search and exclusion process conforming to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al. 2009 ). Full technical data for the systematic review is included in Appendix A .

PRISMA flow diagram for identifying psychologically based studies into Internet-based fraud

Inclusion Criteria

The key inclusion criteria were that the paper should be an empirical examination of an established psychological theory relating to online fraud. In order to minimise more general commentary and published statistics articles, we restricted our search criteria to peer-reviewed journal articles, conference presentations, and book chapters in English. Both quantitative and qualitative studies were acceptable, but the latter should employ a recognised analysis technique, for example, interpretive phenomenological analysis (IPA), as opposed to more anecdotal commentaries of cases, scams, etc.

Exclusion Criteria

There were a large number of articles extracted and screened full text before being rejected as not fulfilling the inclusion criteria ( n = 1036). The majority of these articles purported to include psychological theories and/or measures (for example, personality). Additional exclusions included other fraud types (e.g. corporate or academic fraud), those not focusing on the individual or individual factors (e.g. socialisation), and did not include at least one established and testable psychological theory (e.g. loneliness).

Data Collection and Analysis

Main results.

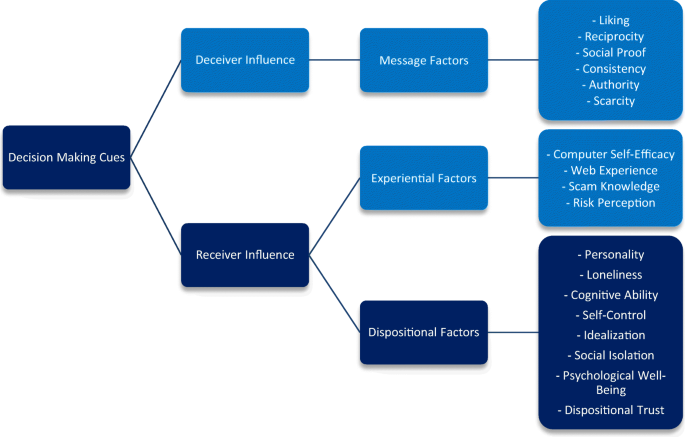

A total of 1299 initial papers were extracted; 39 papers were included in the final search after the exclusion criteria were applied and an additional 5 equivocal items also added ( n = 44) (see Fig. 1 ). From this, a further 10 were excluded by a third author (DD) due to not including an established psychological theory and/or were theoretical models or existing reviews (i.e. not empirical studies). The final number of reviewed articles was 34. The studies range in their scope and methods; overall, three main factors were identified: message, experiential, and dispositional (see Fig. 2 ).

Summary diagram of the variables and processes which influence an individual’s ability to correctly identify fraudulent communications

Meta-analysis

Given the diverse nature of the theoretical background and unrelated outcome measures from each study, a meta-analysis of the findings is not appropriate.

Summary of Studies

Modic and Lea ( 2013 ) regard Internet fraud as a staged process, involving: ‘[…] plausibility, interaction with a fraudster, and losing utility to fraud’ (p. 15); once an offer is deemed plausible, the later stages are therefore more likely to be forthcoming. The review highlighted some broad groupings under which the empirical research in this area has been targeted. The key variables associated with decisions as to whether or not to decide whether information via the internet is plausible can be divided into two key areas: deceiver and receiver influence (see Fig. 1 ). These categories represent both the content of the message and the way in which it interacts with the target. The receiver characteristics can also be further divided into two distinct elements: experiential and dispositional factors. Experiential factors relate to the person’s knowledge and experience of computers and knowledge of fraudulent activity. Dispositional factors include personality, heuristics, and cognitive ability.

Message Factors

The 6 papers classified into this category primarily focused on how the fraudulent message was framed in order to maximise the potential for enticing a victim (Table 1 ). In these articles, only limited mapping onto demographic or individual factors was made. Experimental designs included ‘fake’ phishing emails sent out to university staff and students purporting to be from ‘Information Services’ requesting account verification (Luo et al. 2013 ). Follow-up studies of respondent demographics and personality features in these ‘real-world’ experiments would potentially yield important results for understanding fraud victims’ behaviour, although ethically they may present some limitations.

Fischer et al. ( 2013 ) highlight four key factors that make people more likely to respond to fraudulent communications: (1) high motivation triggers in the size of the reward; (2) trust by focusing on interaction rather than message content, often generated by using ‘official’ notices, logos etc.; (3) social influence, including liking and reciprocation, designed to gain compliance; and (4) the scarcity or urgency of the opportunity. Utilising several waves of quantitative and qualitative studies, Fischer et al. found mixed support for these four elements associated with the message factors and indeed concluded that a fifth factor—personality—may indeed be more indicative of those people likely to predict victimisation. Fischer et al. suggest that this could be in some way linked to ‘self-confidence’ and an increased belief in one’s ability to detect scams. Scam compliance was linked to decision-making errors—exploitation of heuristics (judgement inaccuracies)—and hence limits the exploration of message factors alone as a viable explanation of fraud. It appears that individual differences are more relevant to understanding the way messages are constructed and what processes they are likely to exploit. For example, in what way does a ‘time-limited’ message interact with certain individual’s decision-making processes that make them more likely to respond.

The review highlighted that the content of the message was important to ‘hook’ the target to engaging with the deception. For example, Luo et al. ( 2013 ) demonstrated that messages with high source credibility and quality arguments are particularly effective in ‘spear phishing’ Footnote 1 attacks. Wang et al. ( 2012 ) also found that ‘time-limited’ messages (those which required a quick response) were more likely to be responded to than those which appeared less urgent; it suggests that these ‘visceral triggers’ reduce the cognitive effort expended upon assessing the authenticity of the message. Vishwanath ( 2016 ) extends this perspective to the use of smartphones as a means of reducing cognitive involvement in email filtering, alongside usage variables such as habituation. Responding to fraudulent messages on smartphones was found to be more probable, potentially due to increased cognitive demands and further impacted by the presentation on smaller screens and routine engagement with continued email demands whilst on the move. Certainly, fraudulent responding on smartphones is one potential additional variable to be included in future research.

Experiential Factors

A total of 7 papers were classified into the experiential category, focusing primarily on the experience and expertise of the end-user (Table 2 ). Knowledge of internet scams was one way in which people showed some resilience to victimisation; for example, Wright and Marett ( 2010 ) indicated that people with higher levels of computer self-efficacy, web experience, and security knowledge were less susceptible to phishing attempts. However, Internet use itself was not a protective factor; for some, usage patterns predicted whether they were likely to respond to fraudulent requests, with those people dealing with significantly high email traffic more likely to respond to messages (van Wilsem 2011 ; see also Vishwanath 2015 ). Self-control was identified as a key predictor in whether people were able to withhold responses to fraudulent requests in van Wilsem’s study; what did emerge was a promising underlying pathway that linked low self-control to engaging in more online consumer behaviour generally. Interestingly, Vishwanath ( 2015 ) proposes that email behaviour—particularly habitual use—is linked to low social and emotional control and predictive of increased likelihood to respond to phishing emails.

Harrison et al. ( 2016a ) demonstrate that individual processing styles were also indicative of the likelihood of fraud responding, although this link was moderated significantly by individual factors linked to email knowledge and experience. Similarly, Zielinska et al. ( 2015 ) compared experts and novices in their ability to conceptually link phishing characteristics, discovering the latter used much simpler mental processes in evaluating how a message might be a phishing attempt. Using a novel neurological pathway design, Zielinska and colleagues demonstrate how semantic connections become more sophisticated following experience with how phishing attacks are executed and how to take steps to avoid fraudulent victimisation. The implications for interventions are evident; in addition, the prospects to map these novice reactions to phishing attempts enable a deeper understanding of the way in which people become victims, i.e. the personal factors that limit the way in which people optimise their decision-making strategies.

Hence, a person’s own competency with Internet safety cannot alone explain how they become victims of web-based fraud. Rather, it is an interaction between their ability and usage of the web and general dispositional factors, such as more deliberate and controlled information processing, which are possibly more fruitful avenues of future research in this domain. Potentially, habitual email users are susceptible—feasibly through low social control—to the way in which fraudulent messages are framed, for example, through the use of time-limited rewards, particularly when using mobile devices. It appears, however, that whilst message content and Internet experience have some predictive ability, the key mediating factor is individual dispositional factors that demonstrate the way in which message and experiential factors are processed.

Dispositional Factors

In reviewing the literature in the previous sections, it becomes apparent that the individual is central to the fraud victimisation process. Fischer et al. ( 2013 ) posit the question as to: ‘[w]hy do so many people all over the world, so often, react to completely worthless scam offers?’ (p. 2060). Likewise, despite the investment in firewalls and anti-virus software, the so called semantic attacks exploit inerrant weaknesses in the system—the individual—to divulge sensitive information (Harrison et al. 2016a , b ; p. 265). Workman ( 2008 ) formalises this process of social engineering as: ‘[…] techniques used to manipulate people into performing actions or divulging confidential information’ (p. 662). Subsequently, the key mediating factor between the message(s) and whether experience/expertise in detecting fraud is likely to be practical are individual and personality variables.

One of the most cited papers in this domain is an early examination by Langenderfer and Shimp ( 2001 ) (Table 3 ). Although not focused solely on Internet-based fraud, it nonetheless identifies the ‘visceral influences’ that make individuals vulnerable to scams, through a process that reduces the cognitive deliberation when faced with a message. Notably, Langenderfer and Shrimp utilise Petty and Cacioppo’s ( 1986 ) theory of persuasion: the elaboration likelihood model (ELM). In essence, ELM suggests that individuals who are motivated to respond to the content contained in a fraudulent message are likely to focus and be persuaded by the key messages. On the other hand, those less motivated by the content are more likely to be influenced by peripheral cues. Hence, motivation is likely to be negatively correlated with scam victimisation; the higher the level of motivation, the more likely attention will be expended upon aspects of the message and cues to deception identified. However, although widely cited, Langenderfer and Shimp ( 2001 ) rely heavily on largely anecdotal evidence for their ELM-based theory of scam compliance. Additional studies examining the relevance of ELM have found mixed support for the relevance of this individual factor relating to fraud victimisation (see Whitty 2013 ; Chang 2008 ), although Vishwanath et al. ( 2011 ) do support the ELM approach in conjunction with message and experiential influences.

In the previous section, the link between computer knowledge and self-control are identified by van Wilsem ( 2011 ). Results from Dickman’s ( 1990 ) Impulsivity Inventory (DII)—as a measure of self-control—support the expected link between increased levels of fraud susceptibility. Pattinson et al. ( 2011 ) examine cognitive impulsivity alongside personality and computer familiarity. Personality was less predictive of fraud susceptibility—with the exception of agreeableness—than familiarity with computers generally (see the ‘ Experiential Factors ” section). With regard to impulsivity, however, there was only a small relationship; generally speaking, less impulsive respondents are more able to manage potentially fraudulent messages. Using willingness to take risky investments as a proxy for low self-control, Chen et al. ( 2017 ) identify the role impulsivity has in susceptibility to responding to phishing messages, particularly those promising financial gains. Chen et al. advocate the ‘unpacking’ of the way in which Internet scams exploit impulsive individuals through financial rewards. Reisig and Holtfreter ( 2013 ) add additional support for the notion that lower levels of self-control are correlated to fraud victimisation.

Wider ‘personality’ correlates with fraud susceptibility are often featured in studies, yet many of these fail to incorporate established psychological theories from personality research and/or validated instruments. From those studies that did meet the inclusion criteria, a number attempt more exploratory research into the Big 5 personality characteristics. Hong et al. ( 2013 ) record negative correlations for openness to experience and introversion being more likely to delete legitimate emails. Hence, although these respondents were less prone to being victims of phishing messages, lower levels of trust (also measured) were predictive of general suspicion and potential rejection of genuine communication as a result. In contrast, only agreeableness was identified as a risk factor in Pattinson et al.’s ( 2011 ) research. Alternative personality inventories, for example, the HEXACO Personality Inventory (Judges et al. 2017 ) and the DISC Personality Questionnaire (Chuchuen and Chanvarasuth 2015 ), provide additional evidence for general personality influence in fraud susceptibility. Whilst some small links with potential to increase victimisation and personality factors emerge from these and other studies—for example, conscientiousness (victims have lower scores)—lead Chuchuen and Chanvarasuth ( 2015 ) caution that given the wide-range of phishing and fraudulent message content, no one personality feature is likely to predict susceptibility in isolation: ‘[…] there is relatively little information about the relationship between personality types and phishing techniques. However, there is some interesting literature on the relationship between decision-making that could reflect upon this area’ (p. 332).

The ELM/schema models suggest that central and peripheral decision strategies are key to understanding how cues to fraudulent messages are neglected (Langenderfer and Shimp 2001 ). Additional heuristics and potential judgement errors have also been examined: through a content analysis of phishing emails, Chang and Chong ( 2010 ) identify the representative, availability, and affect heuristics as possible sources of decision errors. Similarly, anchoring—the tendency to use previous information as a base line for later decision processing—compromised the ability to identify fraudulent websites (Iuga et al. 2016 ). Other dispositional factors, include executive functioning (Gavett et al. 2017 ), theory of deception (a decision-making model; Alseadoon et al. 2012 ; Alseadoon et al. 2013 ), and cognitive health and well-being (Lichtenberg et al. 2013 ; James et al. 2014 ). Despite the obvious links to fraud, judgement and decision-making would appear to be a relatively underexplored area of research that potentially can link message and received factors in a meaningful way.

The preamble to this review highlighted the limited use of established psychological theories in explaining Internet fraud susceptibility. From the 34 papers that met our inclusion criteria, there was still a lack of coherence in the selection of appropriate psychological principles with which to explain the increased likelihood of victimisation. In addition, there was a lack of consistency in developing useful ways in which these established psychological constructs added to our understanding of fraud conducted via the Internet. In attempting to identify the methods used by criminals and how they are targeted at specific individuals, there is a need to accurately map aspects of the message to individual differences, including Internet usage and psychological factors. This task is made more complex due to many of the papers reviewed here incorporating two or more of the three identified decision-making factors (message, experiential, and/or dispositional).

Personality theories appear to tell us very little about how people are more likely to respond to fraudulent communication via the Internet. Extravert individuals might be prone to higher levels of risk taking, but there was no clear pathway linking extraversion and fraud susceptibility (Pattinson et al. 2011 ). Time-limited messages might appeal to those with lower levels of social control (Reisig and Holtfreter 2013 ). Similarly, neuroticism increases fraud susceptibility (Cho et al. 2016 ), whereas conscientiousness decreases this tendency (Judges et al. 2017 ). These observations only loosely map onto plausible individual level explanation. In reality, it seems that the targeting of fraudulent emails—whether for phishing attacks, romance scams, or bank frauds—is done largely at random, through a high volume of communications. However, the mass release of phishing scams disguises somewhat the purposely considered message that is designed to appeal to people of specific dispositions.

What is less clear is how these messages—of which receivers negotiate several times per day—are only sometimes successful, even amongst rational and computer savvy individuals. Central versus peripheral processing may provide the most useful way to understand why people fall for scams, particularly messages that emulate official and/or genuine communications. For example, Vishwanath et al. ( 2011 ) produce a convincing account of the way in which message factors are linked to individual processing through the ELM. In addition, domain-specific knowledge also regulates the ELM process, with increased scam knowledge being linked to the attention given to email cues, i.e. a high level of elaboration likelihood. Schwarz ( 1990 ) reviews the evidence on the effect of mood upon visual information processing more generally and concluded that sad moods decreased global processing, whereas those of a happier disposition focused more on local factors. Specifically, when faced with ambiguous stimuli, mood states influenced how quickly people were likely to process information, particularly when the information was relevant to them. Additionally, people in a happy mood are more likely to pay attention to positive messages (for example, fake lottery wins). Current theories (e.g. elaboration likelihood model (ELM); see Petty and Briñol 2015 ) associated with mood influences on information processing suggest that happy individuals structure their response to stimuli in a top-down manner, relying more on heuristics and schemas to aid in understanding (Gasper 2004 ). The contrasting bottom up approach of those in less happy mood states would focus on the stimulus details more closely. Hence, we can see for our understanding of Internet fraud vulnerability that mood could be one key factor that influences how we process potentially fraudulent communications, but as yet has not received significant attention from researchers.

Practical implications concern the ability to identify individuals most at risk of fraud and provide targeted consumer education measures to help prevent victimisation. We know less about the financial situation and other background variables of fraud victims that might increase their risk of victimization. For example, does financial hardship lead people to take bigger chances with regard to false promises of prizes? Similarly, are those with physical and/or mental health problems likely to engage in dialogue with fraudsters through social isolation, anxiety, and other similar issues? Perhaps people with a predisposition for extraversion and/or risk taking may be ‘happier’, less likely to attend to the peripheral aspects of messages (cues to deception), and therefore be at a greater chance of being fraud victims (Gasper, 2004 ). Additional research with a theoretically and practically informed agenda is necessary in this important and growing field. The search terms and inclusion/exclusion criteria employed in this review clearly focused on a relatively narrow band of studies; wider reviews of what we know about the offender and how they target victims specifically can add value to this debate. It would appear that the most used ‘spam/phishing’ email, however, is largely indiscriminately aimed at a wide audience hoping to catch individuals not fully processing the possibility these communications are fraudulent.

Currently, issues arise in protecting specific groups of individuals, as a high proportion of any general awareness campaign maybe targeted on people unlikely to ever fall victim, for example, elderly non-Internet users (Lea et al. 2009 ). This research may help bridge this gap, in that if the more vulnerable groups are identified—or are encouraged to self-identify—prevention material can be specifically targeted at them. For example, the UK National Policing ‘4 P’s’ to tackle fraud and cyber-crime; specifically, elements concerning ‘protection’ and ‘preparation’ of potential fraud victims (City of London Police 2015 ). Similarly, the current ‘Take 5’ campaign developed by The Metropolitan Police with the support of the Financial Fraud Action UK (FFA UK) highlights the importance of not immediately responding to messages. Creating a time-buffer to avoid the peripheral/heuristic interpretation of potentially fraudulent requests could potentially limit the number of responses. Experimental examinations of how people can best control their responses would appear to be a fruitful avenue on the research agenda.

Methodological Limitations

Any systematic review will undoubtedly contain some bias in terms of the search parameters employed; hence, there may be papers which are not included here that others might see as an omission. A number of papers were rejected, most notably through the stipulation that there be an established psychological theory. The question as to what was deemed ‘established’ is somewhat equivocal; for example, research by Van Wyk and Mason ( 2001 ) was not included because the measures for ‘risk taking’ and ‘socialisation’ were not from published scales. Similarly, Button et al. ( 2014 ) acknowledged that ‘[…] previous research studies have identified certain psychological traits […] This was beyond the remit of this research’ (p. 400). Notably the research by Modic and colleagues is absent from the reviewed articles due to the search parameters employed here; the development of the StP-II did not fully match our criteria. Empirical examinations on the predictive validity of the StP-II are forthcoming (see Modic et al. 2018 , p. 16) and if successful will provide a way of understanding and mapping personality characteristics onto fraudulent activity.

There are also some methodological considerations to be accounted for in regard to the studies themselves and in particular their ecological validity in respects to accounting for behaviour in the real world. Role play scenarios, in which participants are asked to access the account of a character and decide how they would deal with a number of emails, may suffer from expectancy/observer effects. Jones et al. ( 2015 ) argue:

[…] that the way in which these types of tasks are constructed may still prompt socially desirable responses. For example, when given the option ‘type the URL into a browser window’, may subsequently alert participants that this is the most sensible option compared to other options such as ‘click on the link’. Parsons et al. ( 2014 ) demonstrated—using a role-play task as a measure of susceptibility—that knowledge of the nature of the study affected behaviour. Participants identified phishing emails more successfully when they had been alerted to look out for them. Such subject expectancy effects might affect the integrity of a study even more than any socially desirable bias (p. 20).